Similar presentations:

SOF108 - Computer Architecture Week 13: Parallel Processing

1. SOF108 - Computer Architecture Week 13: Parallel Processing

MALAYSIASOF108 - Computer Architecture

Week 13: Parallel Processing

1

2. Agenda

• Parallel Processing• Flynn’s taxonomy

• Multiprogramming vs Multiprocessing

• Cache coherence problem

• The MESI protocol

2

3. Parallel Processing

• Traditionally, the computer has been viewed as a sequentialmachine

• This view of the computer has never been entirely true

• At the micro-operation level

• multiple control signals are generated at the same time.

• Instruction pipelining, at least to the extent of overlapping fetch

and execute operations, has been around for a long time.

3

4. Parallel Processing

• Usually to enhance performance and,• In some cases, to increase availability

• Some of the most prominent approaches to parallel organization

• Symmetric multiprocessors (SMPs)

• Multithreaded processors and chip multiprocessors

4

5. Multiple Processor Organizations

• Multiprocessing = Multicores• The use of two or more CPUs within a single computer system

• Core - a unit that reads and executes instructions.

• A taxonomy first introduced by Flynn is still the most common way of

categorizing systems with parallel processing capability.

• Flynn proposed the following categories of computer system:

• Single instruction, single data stream (SISD)

• Single instruction, multiple data stream (SIMD)

• Multiple instruction, single data stream (MISD)

• Multiple instruction, multiple data stream (MIMD)

5

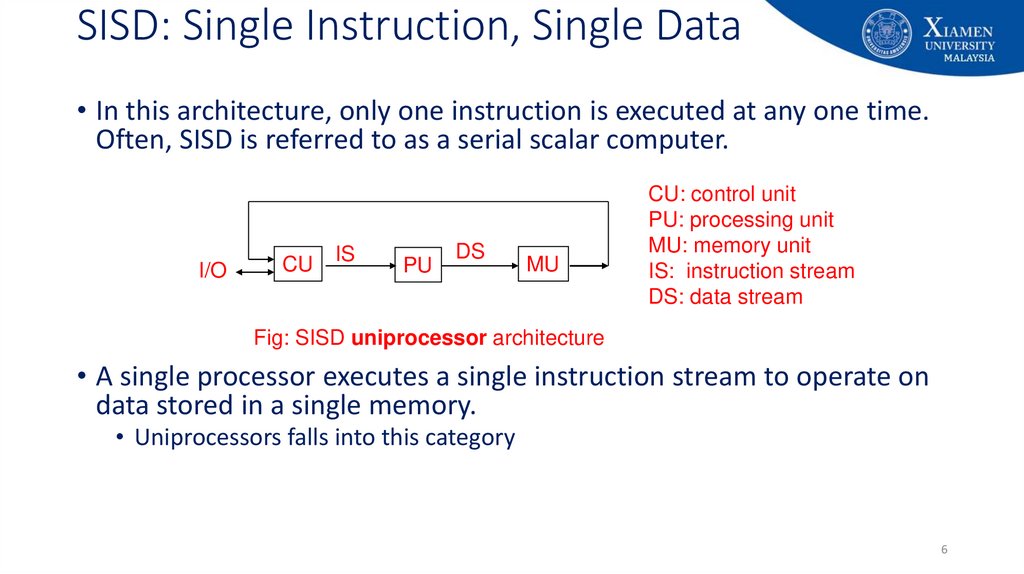

6. SISD: Single Instruction, Single Data

• In this architecture, only one instruction is executed at any one time.Often, SISD is referred to as a serial scalar computer.

I/O

CU

IS

PU

DS

MU

CU: control unit

PU: processing unit

MU: memory unit

IS: instruction stream

DS: data stream

Fig: SISD uniprocessor architecture

• A single processor executes a single instruction stream to operate on

data stored in a single memory.

• Uniprocessors falls into this category

6

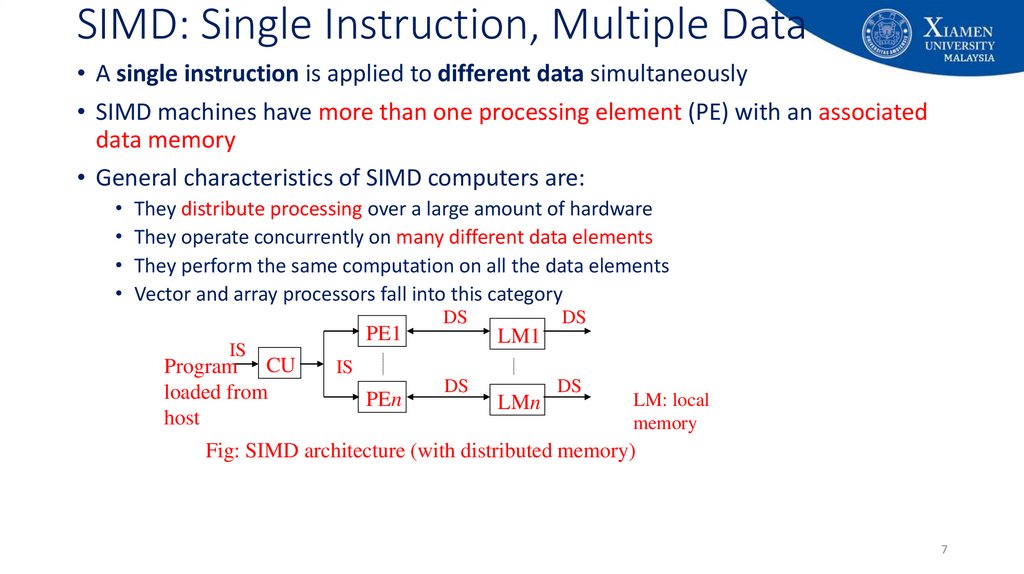

7. SIMD: Single Instruction, Multiple Data

• A single instruction is applied to different data simultaneously• SIMD machines have more than one processing element (PE) with an associated

data memory

• General characteristics of SIMD computers are:

• They distribute processing over a large amount of hardware

• They operate concurrently on many different data elements

• They perform the same computation on all the data elements

• Vector and array processors fall into this category

IS

Program CU

loaded from

host

PE1

DS

DS

LM1

IS

PEn

DS

LMn

DS

LM: local

memory

Fig: SIMD architecture (with distributed memory)

7

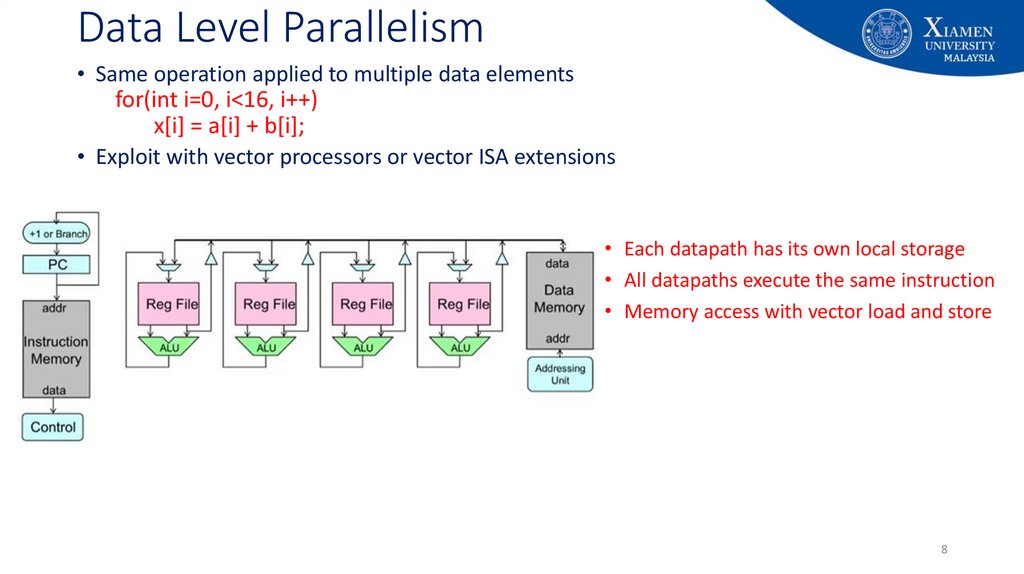

8. Data Level Parallelism

• Same operation applied to multiple data elementsfor(int i=0, i<16, i++)

x[i] = a[i] + b[i];

• Exploit with vector processors or vector ISA extensions

• Each datapath has its own local storage

• All datapaths execute the same instruction

• Memory access with vector load and store

8

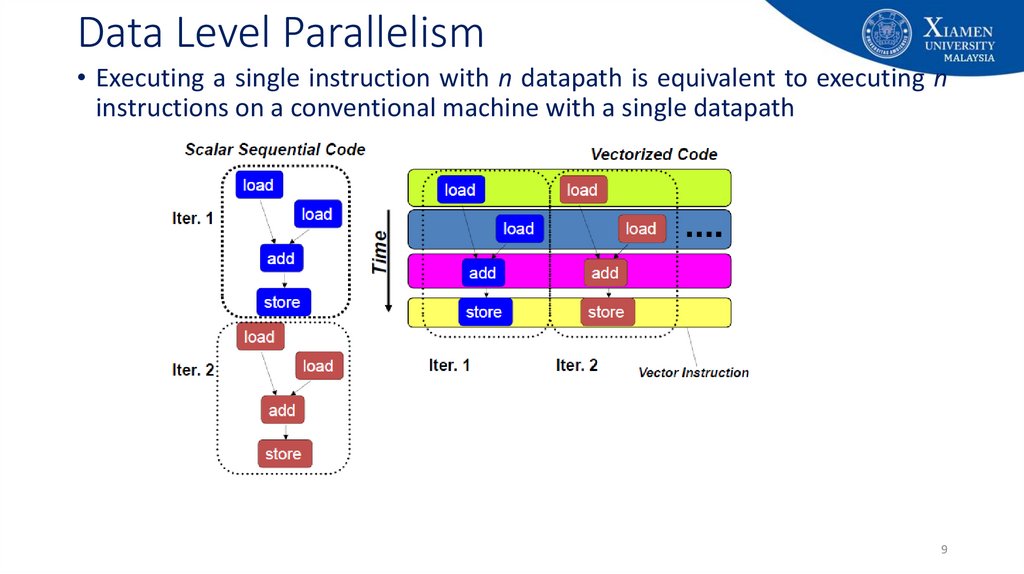

9. Data Level Parallelism

• Executing a single instruction with n datapath is equivalent to executing ninstructions on a conventional machine with a single datapath

9

10. MISD: Multiple Instruction, Single Data

• This implies that several instructions are operating on a single piece of data.• The same data flows through a linear array of processors executing different

instruction streams.

• This architecture is also known as systolic array for pipelined execution of specific

algorithms.

• Not much used in practice.

10

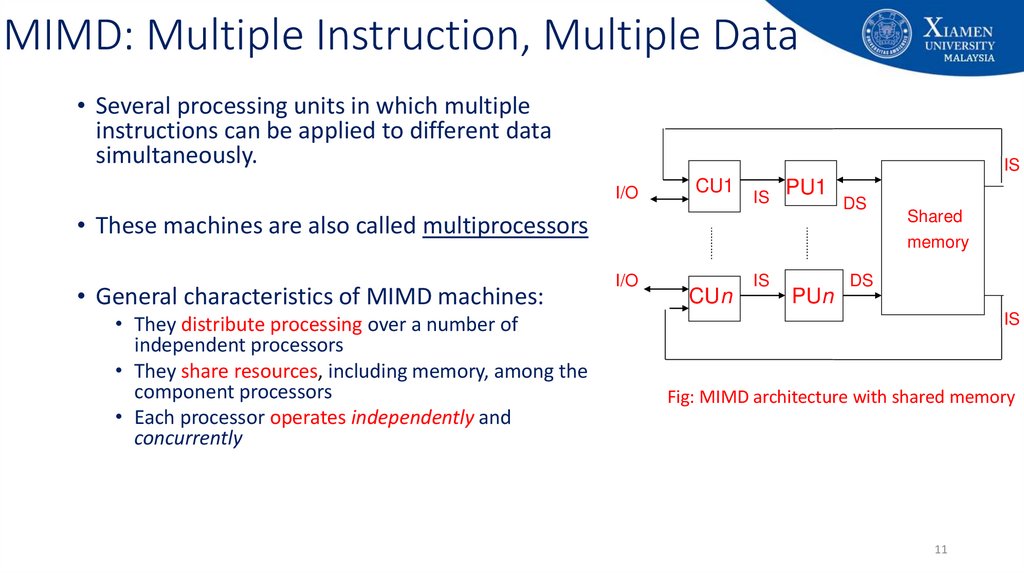

11. MIMD: Multiple Instruction, Multiple Data

• Several processing units in which multipleinstructions can be applied to different data

simultaneously.

IS

I/O

CU1

IS

PU1

• These machines are also called multiprocessors

• General characteristics of MIMD machines:

• They distribute processing over a number of

independent processors

• They share resources, including memory, among the

component processors

• Each processor operates independently and

concurrently

DS

Shared

memory

I/O

CUn

IS

PUn

DS

IS

Fig: MIMD architecture with shared memory

11

12. MIMD: Multiple Instruction, Multiple Data

• Multiple autonomous processors simultaneously executing differentinstructions on different data.

• Example:

• Symmetric shared-memory multiprocessors

• Distributed-memory multiprocessors

• Chip-multiprocessors / Multi-cores

12

13. Symmetric Multiprocessors

• Until fairly recently, virtually all single-user personal computers andmost workstations contained a single general purpose microprocessor

• Demands for performance increase

• Cost of microprocessors continues to drop

• Vendors have introduced systems with SMP organization

13

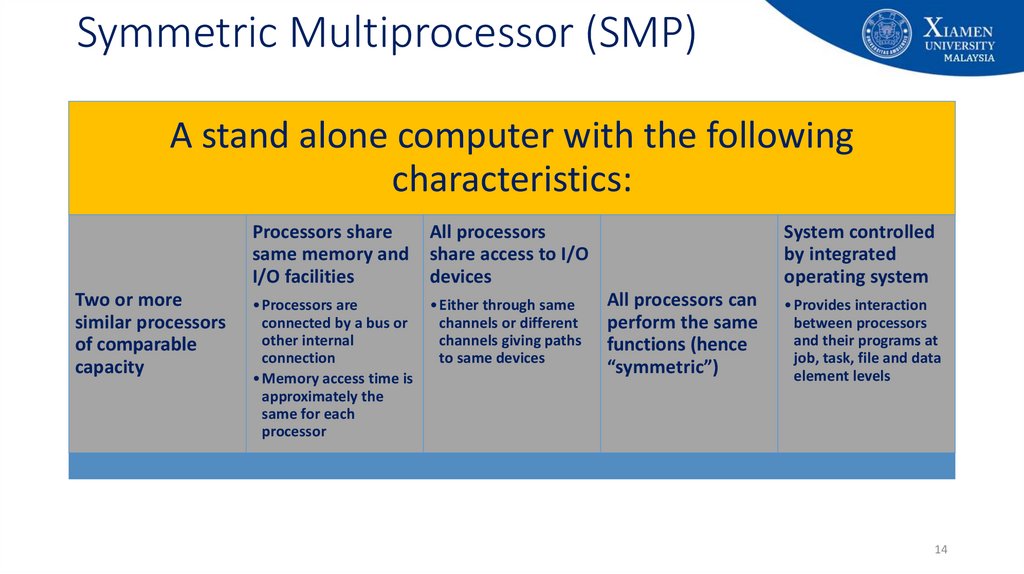

14. Symmetric Multiprocessor (SMP)

A stand alone computer with the followingcharacteristics:

Processors share

All processors

same memory and share access to I/O

I/O facilities

devices

Two or more

similar processors

of comparable

capacity

• Processors are

connected by a bus or

other internal

connection

• Memory access time is

approximately the

same for each

processor

• Either through same

channels or different

channels giving paths

to same devices

System controlled

by integrated

operating system

All processors can

perform the same

functions (hence

“symmetric”)

• Provides interaction

between processors

and their programs at

job, task, file and data

element levels

14

15. Multiprocessor System Organization

• Two or more processors• Self-contained, including a control

unit, ALU, registers, and one or more

levels of cache

• Processors can communicate with

each other through memory

15

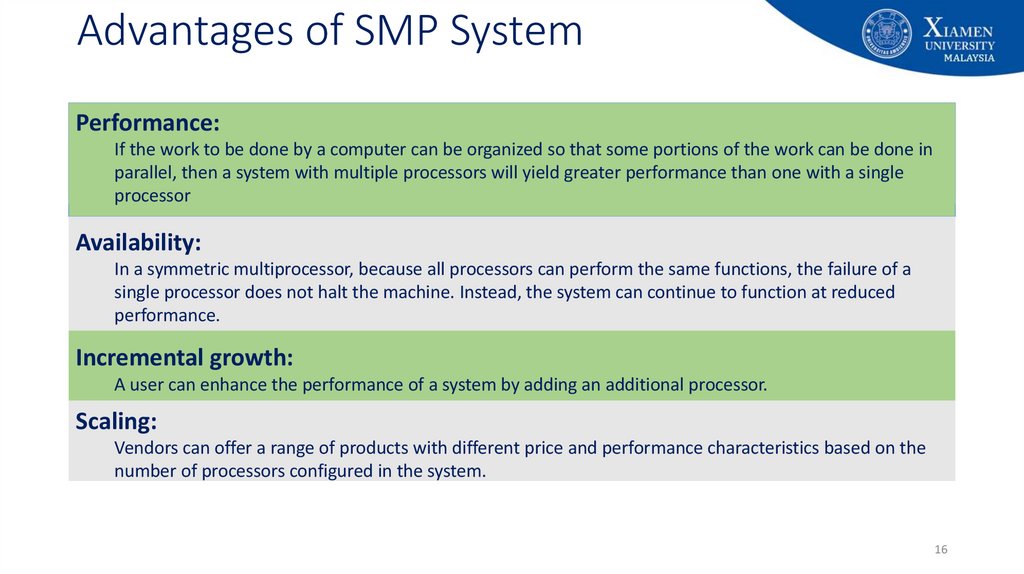

16. Advantages of SMP System

Performance:If the work to be done by a computer can be organized so that some portions of the work can be done in

parallel, then a system with multiple processors will yield greater performance than one with a single

processor

Availability:

In a symmetric multiprocessor, because all processors can perform the same functions, the failure of a

single processor does not halt the machine. Instead, the system can continue to function at reduced

performance.

Incremental growth:

A user can enhance the performance of a system by adding an additional processor.

Scaling:

Vendors can offer a range of products with different price and performance characteristics based on the

number of processors configured in the system.

16

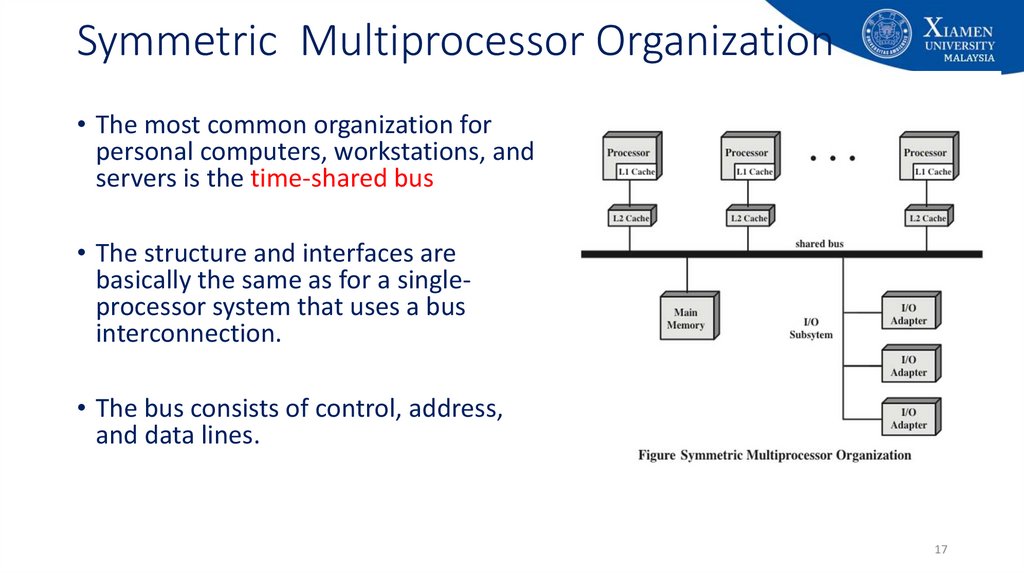

17. Symmetric Multiprocessor Organization

• The most common organization forpersonal computers, workstations, and

servers is the time-shared bus

• The structure and interfaces are

basically the same as for a singleprocessor system that uses a bus

interconnection.

• The bus consists of control, address,

and data lines.

17

18. Shared Bus Organization in SMP

• The bus organization has several attractive features• Simplicity

• Simplest approach to multiprocessor organization

• Flexibility

• Generally easy to expand the system by attaching more processors to the bus

• Reliability

• The bus is essentially a passive medium and the failure of any attached device should

not cause failure of the whole system

18

19. Shared Bus Organization in SMP

• Main drawback is performance• All memory references pass through the common bus

• Performance is limited by bus cycle time

• Each processor should have cache memory

• Reduces the number of bus accesses

• To improve performance, it is desirable to equip each processor with a cache memory

• Typically, workstation and PC SMPs have two levels of cache, with the L1 cache internal (same chip

as the processor) and the L2 cache either internal or external.

• Some processors now employ a L3 cache as well.

• Leads to problems with cache coherence

19

20. Multithreading and Chip Multiprocessors

• An alternative approach, which allows for a high degree of instructionlevel parallelism without increasing circuit complexity or power

consumption

• The instruction stream is divided into smaller streams, known as

threads

20

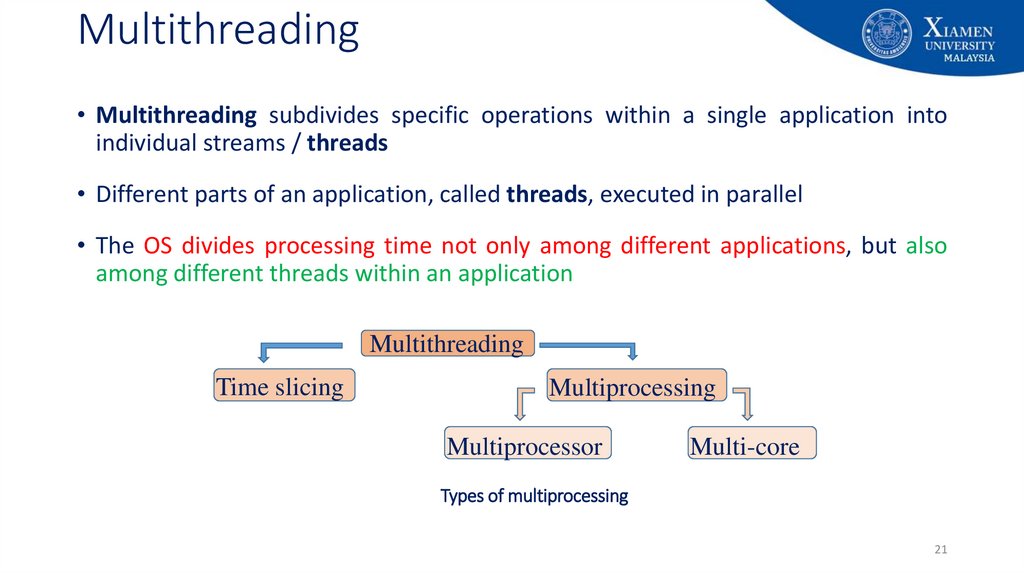

21. Multithreading

• Multithreading subdivides specific operations within a single application intoindividual streams / threads

• Different parts of an application, called threads, executed in parallel

• The OS divides processing time not only among different applications, but also

among different threads within an application

Multithreading

Time slicing

Multiprocessing

Multiprocessor

Multi-core

Types of multiprocessing

21

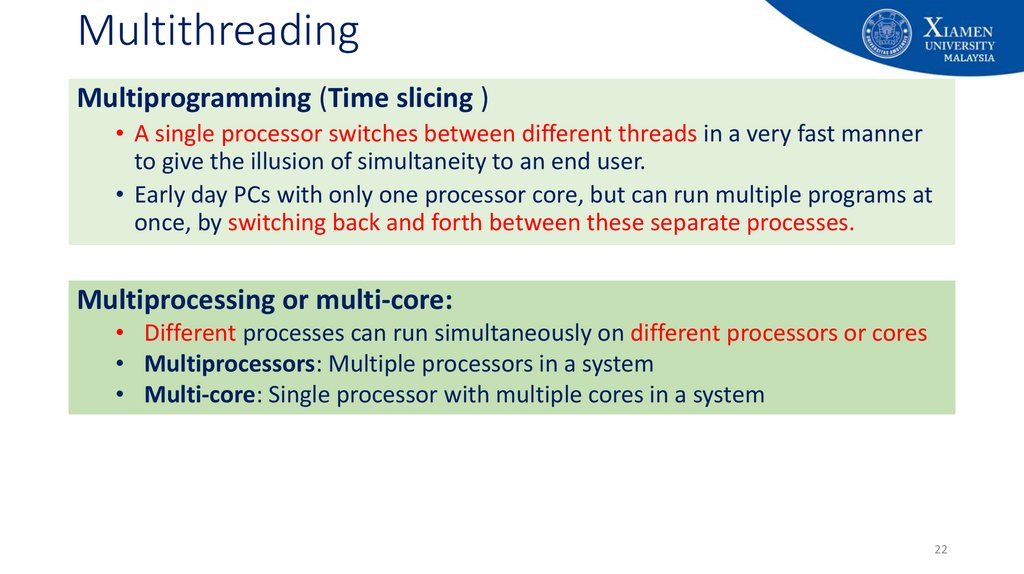

22. Multithreading

Multiprogramming (Time slicing )• A single processor switches between different threads in a very fast manner

to give the illusion of simultaneity to an end user.

• Early day PCs with only one processor core, but can run multiple programs at

once, by switching back and forth between these separate processes.

Multiprocessing or multi-core:

• Different processes can run simultaneously on different processors or cores

• Multiprocessors: Multiple processors in a system

• Multi-core: Single processor with multiple cores in a system

22

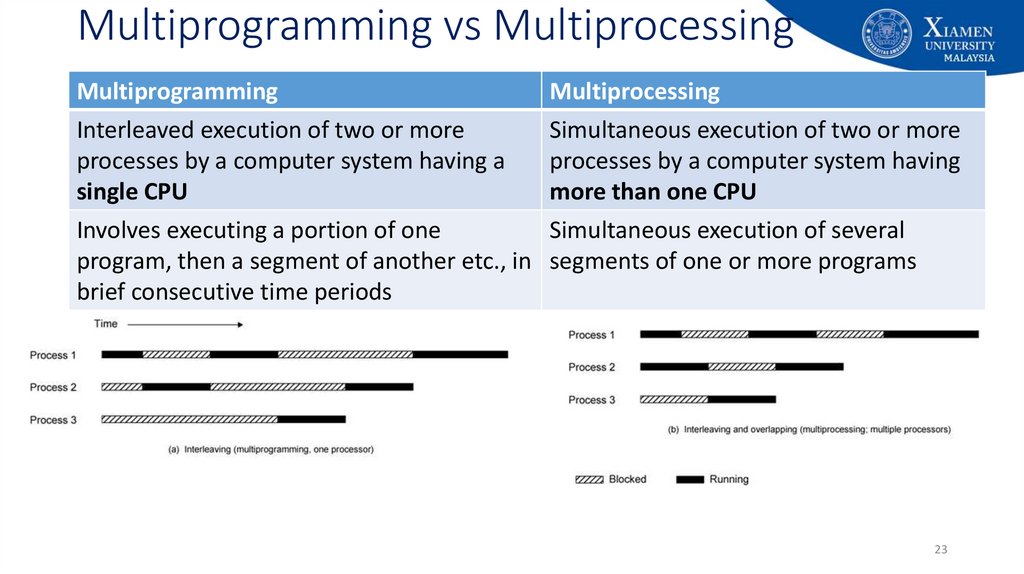

23. Multiprogramming vs Multiprocessing

MultiprogrammingMultiprocessing

Interleaved execution of two or more

Simultaneous execution of two or more

processes by a computer system having a

processes by a computer system having

single CPU

more than one CPU

Involves executing a portion of one

Simultaneous execution of several

program, then a segment of another etc., in segments of one or more programs

brief consecutive time periods

23

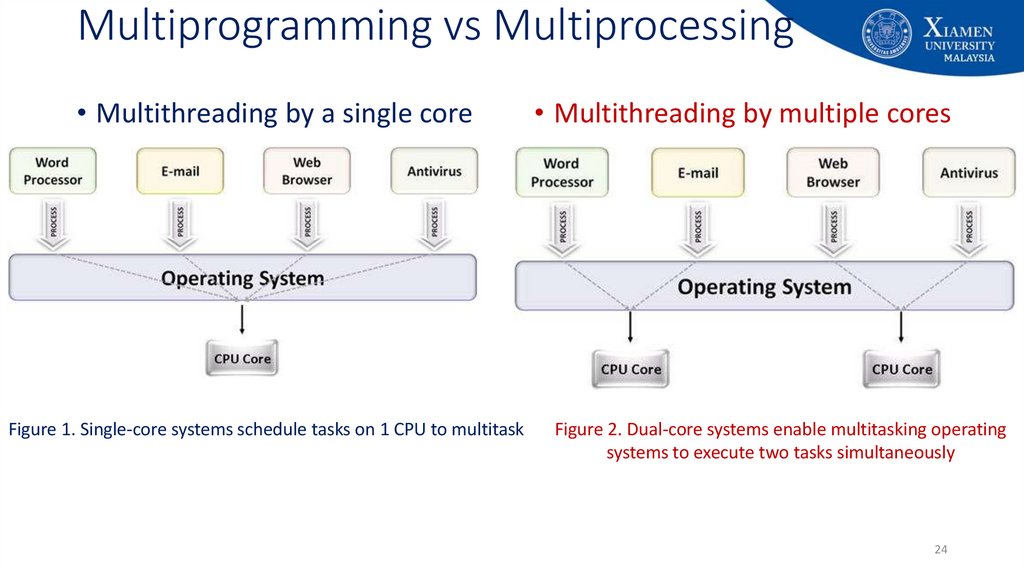

24. Multiprogramming vs Multiprocessing

• Multithreading by a single coreFigure 1. Single-core systems schedule tasks on 1 CPU to multitask

• Multithreading by multiple cores

Figure 2. Dual-core systems enable multitasking operating

systems to execute two tasks simultaneously

24

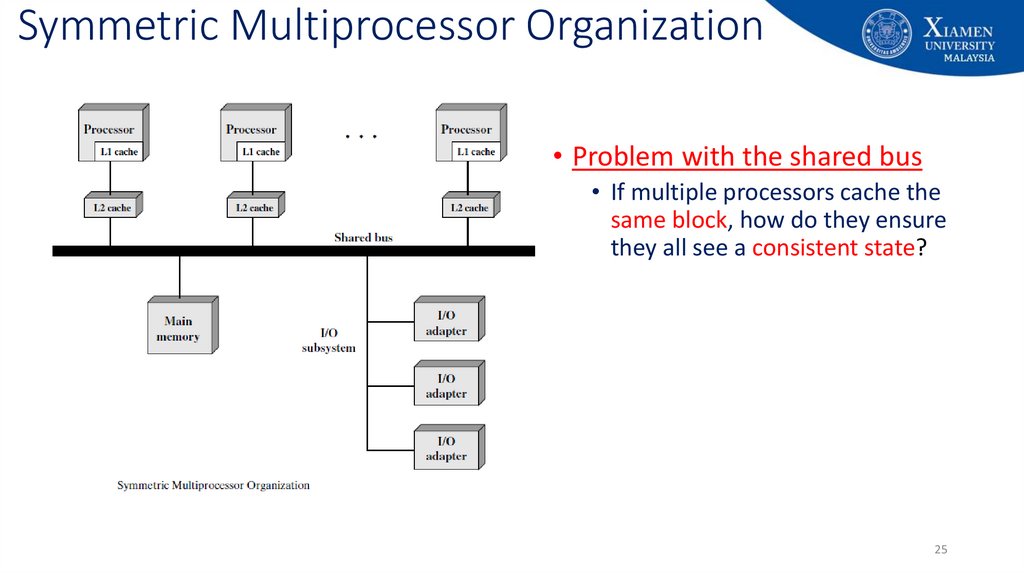

25. Symmetric Multiprocessor Organization

• Problem with the shared bus• If multiple processors cache the

same block, how do they ensure

they all see a consistent state?

25

26. Cache Coherence

• Because each local cache contains an image of a portion of memory, ifa word is altered in one cache, it could conceivably invalidate a word

in another cache.

• To prevent this, the other processors must be alerted that an update

has taken place.

• This problem is known as the cache coherence problem and is

typically addressed in hardware rather than by the operating system.

26

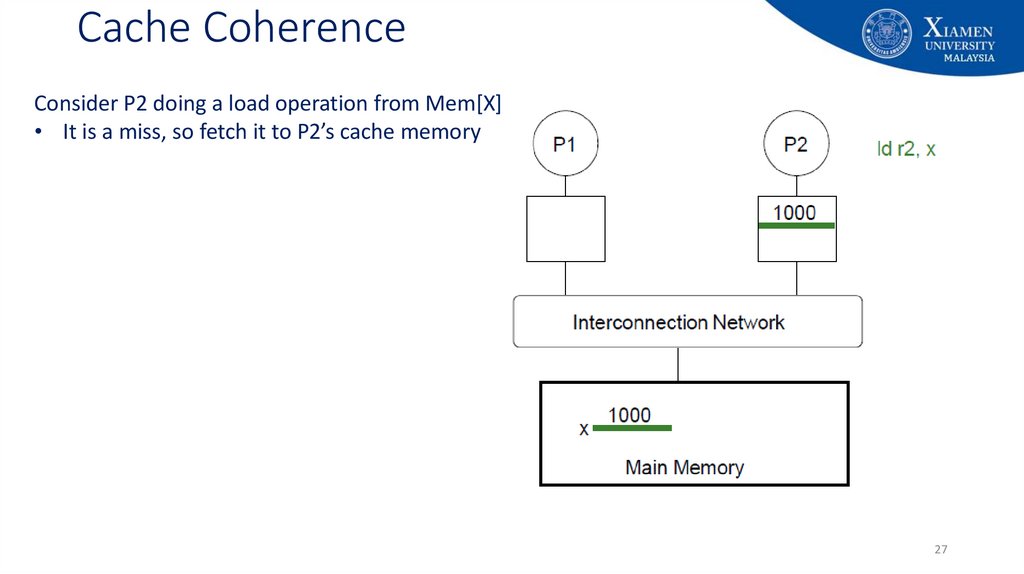

27. Cache Coherence

Consider P2 doing a load operation from Mem[X]• It is a miss, so fetch it to P2’s cache memory

27

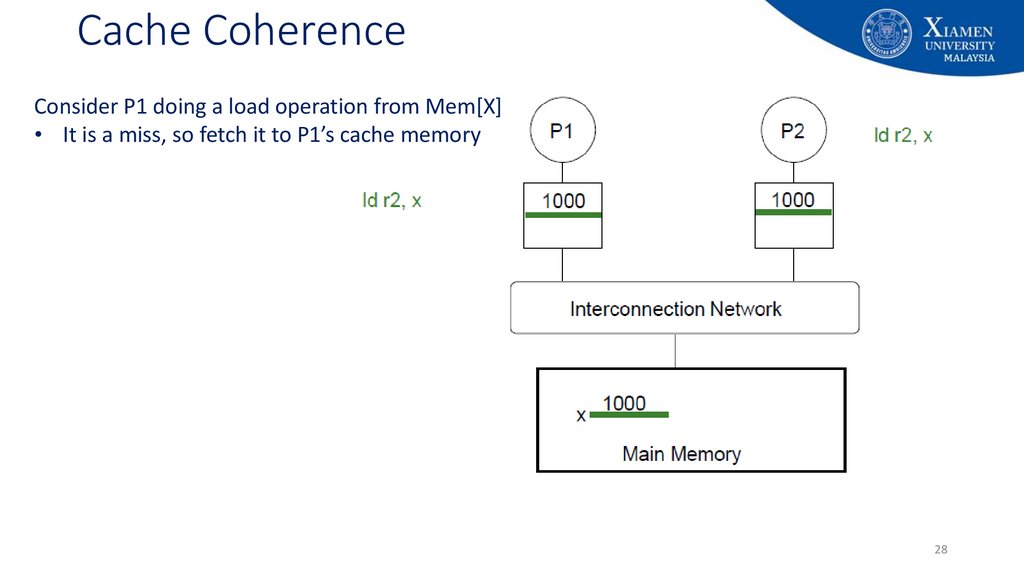

28. Cache Coherence

Consider P1 doing a load operation from Mem[X]• It is a miss, so fetch it to P1’s cache memory

28

29. Cache Coherence

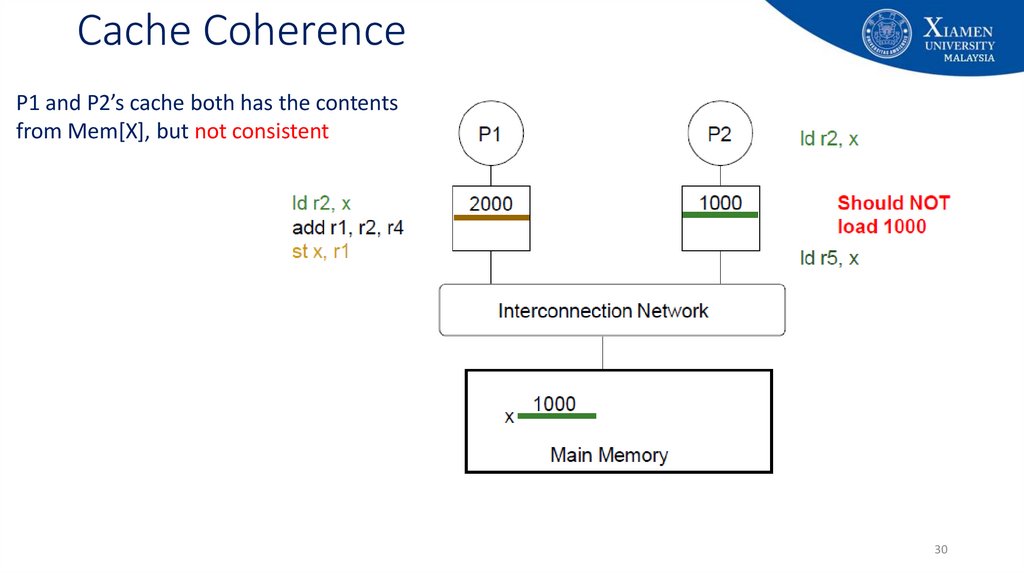

Both P1 and P2’s cache has the contents from Mem[X]P1 add r1, r2, r4

• Assume, the result in r1 is 2000

P1 stores the result in Mem[X]

• Stores in cache (it is a hit), if it is

a write back cache memory

P1 and P2’s cache bot has the contents from Mem[X],

but not consistent

29

30. Cache Coherence

P1 and P2’s cache both has the contentsfrom Mem[X], but not consistent

30

31. Example: Cache coherence problem

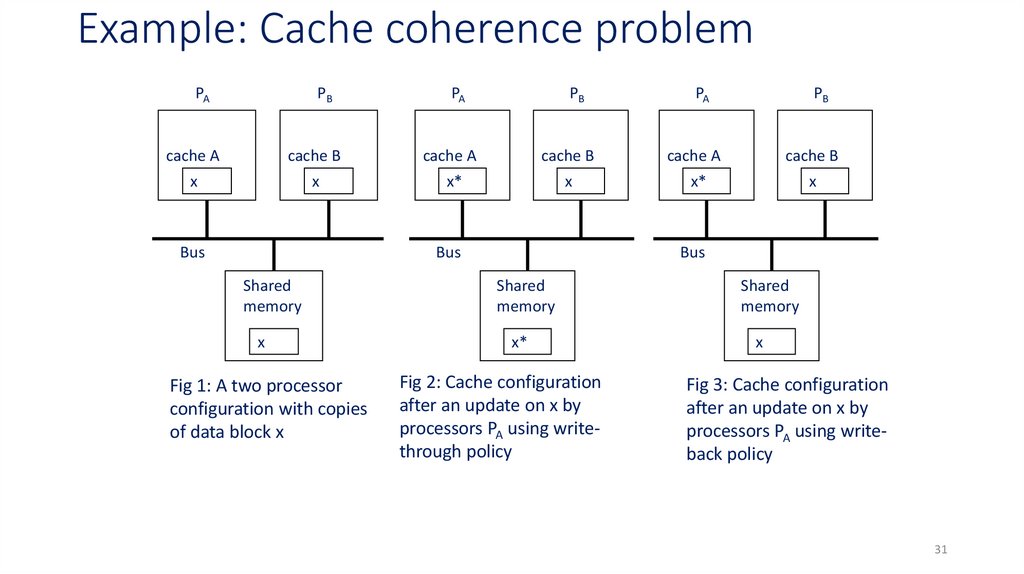

PAPB

PA

PB

PA

PB

cache A

cache B

cache A

cache B

cache A

cache B

x

x

x*

x

x*

x

Bus

Bus

Bus

Shared

memory

Shared

memory

Shared

memory

x

x*

x

Fig 1: A two processor

configuration with copies

of data block x

Fig 2: Cache configuration

after an update on x by

processors PA using writethrough policy

Fig 3: Cache configuration

after an update on x by

processors PA using writeback policy

31

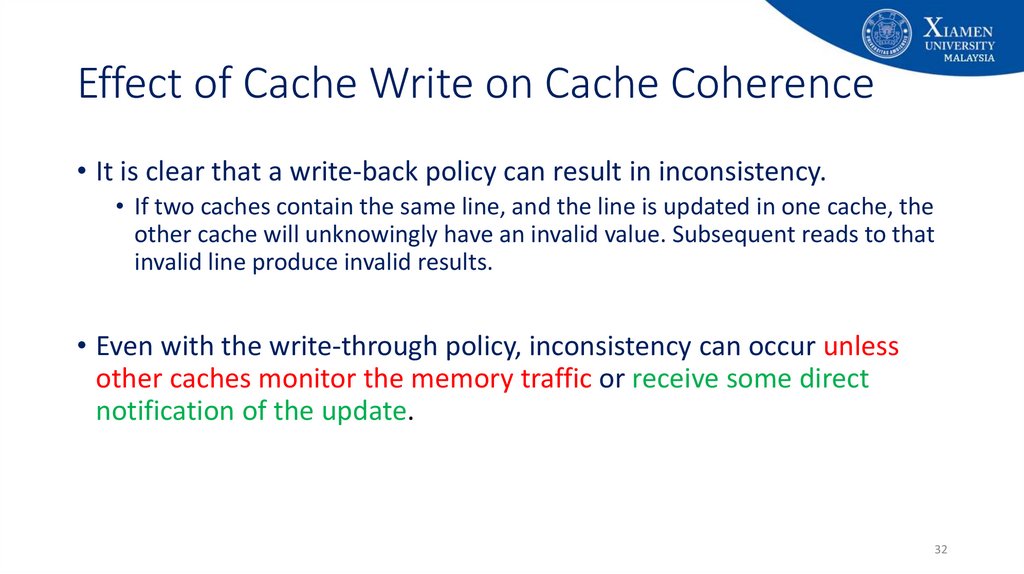

32. Effect of Cache Write on Cache Coherence

• It is clear that a write-back policy can result in inconsistency.• If two caches contain the same line, and the line is updated in one cache, the

other cache will unknowingly have an invalid value. Subsequent reads to that

invalid line produce invalid results.

• Even with the write-through policy, inconsistency can occur unless

other caches monitor the memory traffic or receive some direct

notification of the update.

32

33. Cache Coherence Protocol

• The objective is• to let recently used local variables get into the appropriate cache

• and stay there through numerous reads and write

• while using the protocol to maintain consistency of shared variables that might be in

multiple caches at the same time.

• Cache coherence approaches have generally been divided into software

and hardware approaches.

• Some implementations adopt a strategy that involves both software and

hardware elements.

33

34. Cache Coherence: Software Solutions

• Compiler-based coherence mechanisms perform an analysis on thecode to determine which data items may become unsafe for caching,

and they mark those items accordingly.

• The operating system or hardware then prevents non-cacheable

items from being cached

• The simplest approach is to prevent any shared data variables from

being cached.

34

35. Cache Coherence: Software Solutions

• Software cache coherence schemes attempt to avoid the need foradditional hardware circuitry and logic by relying on the compiler and

operating system to deal with the problem.

• Software approaches are attractive because the overhead of detecting

potential problems is transferred from run time to compile time, and the

design complexity is transferred from hardware to software.

• On the other hand, compile-time software approaches generally must

make conservative decisions, leading to inefficient cache utilization.

35

36. Cache Coherence: Hardware Solutions

• Hardware solutions are generally referred as cache coherence protocols.• These solutions provide dynamic recognition at run time by identifying

potential inconsistency conditions.

• Because the problem is only dealt with when it actually arises, there is

more effective use of caches, leading to improved performance over a

software approach.

• In addition, these approaches are transparent to the programmer and the

compiler, reducing the software development burden.

36

37. Cache Coherence: Hardware Solutions

• Directory Protocols• Snoopy Protocols

37

38. Snoopy Protocols

• Snoopy protocols distribute the responsibility for maintaining cache coherenceamong all of the cache controllers in a multiprocessor.

• A cache must recognize when a line that it holds is shared with other caches.

• When an update action is performed on a shared cache line, it must be

announced to all other caches by a broadcast mechanism.

• Each cache controller is able to “snoop” on the network to observe these

broadcasted notifications, and react accordingly.

38

39. Snoopy Protocols

• Snoopy protocols are ideally suited to a bus-based multiprocessor, because theshared bus provides a simple means for broadcasting and snooping.

• However, because one of the objectives of the use of local caches is to avoid bus

accesses,

• care must be taken that the increased bus traffic required for broadcasting and snooping

does not cancel out the gains from the use of local caches

• Two basic approaches have been explored:

• Write invalidate

• Write update (or write broadcast)

39

40. Snoopy Protocols: Write Invalidate

• Write-invalidate protocol:• there can be multiple readers but only one writer at a time.

• Initially, a line may be shared among several caches for reading purposes.

• When one of the caches wants to perform a write to the line, it first issues a

notice that invalidates that line in the other caches, making the line exclusive

to the writing cache.

• Once the line is exclusive, the owning processor can make cheap local writes

until some other processor requires the same line.

40

41. Snoopy Protocols: Write Update

• With a write-update protocol, there can be multiple writers as well asmultiple readers.

• When a processor wishes to update a shared line, the word to be updated

is distributed to all others, and caches containing that line can update it.

• Some systems use an adaptive mixture of both write-invalidate and writeupdate mechanisms

41

42. Write Invalid vs Write Update

• Neither of these two approaches is superior to the other under allcircumstances.

• Performance depends on the number of local caches and the pattern of

memory reads and writes.

• Some systems implement adaptive protocols that employ both writeinvalidate and write-update mechanisms.

42

43. The MESI Protocol

• To provide cache consistency on an SMP, the data cache oftensupports a protocol known as MESI.

• For MESI, the data cache includes two status bits per tag, so that each

line can be in one of four states:

• Modified

• Exclusive

• Shared

• Invalid

43

44. The MESI Protocol

• Modified: The line in the cache has been modified (different from main memory)and is available only in this cache.

• Exclusive: The line in the cache is the same as that in main memory and is not

present in any other cache.

• Shared: The line in the cache is the same as that in main memory and may be

present in another cache.

• Invalid: The line in the cache does not contain valid data.

44

45. The MESI Protocol: Read MISS

• When a read miss occurs in the local cache• the processor initiates a memory read to read the line of main memory

containing the missing address.

• The processor inserts a signal on the bus that alerts all other processor/cache

units to snoop the transaction. There are a number of possible outcomes

45

46. The MESI Protocol: Read MISS – Outcome 1

• If one other cache has a clean copy of the line in the exclusive state, itreturns a signal indicating that it shares this line.

• Does the responding processor needs to change it’s state?

• The responding processor then transitions the state of its copy from

exclusive to shared

• The initiating processor reads the line from main memory and transitions

the line in its cache from invalid to shared.

46

47. The MESI Protocol: Read MISS – Outcome 2

• If one or more caches have a clean copy of the line in the sharedstate, each of them signals that it shares the line.

• Does the responding processor need to change it’s state?

• The initiating processor reads the line and transitions the line in its

cache from invalid to shared.

47

48. The MESI Protocol: Read MISS – Outcome 3

• If one other cache has a modified copy of the line, then that cache blocksthe memory read and provides the line to the requesting cache over the

shared bus.

• Need to change the state?

• The responding cache then changes its line from modified to shared.

• The line sent to the requesting cache is also received and processed by the

memory controller, which stores the block in memory.

48

49. The MESI Protocol: Read MISS – Outcome 4

• If no other cache has a copy of the line (clean or modified), then nosignals are returned.

• The initiating processor reads the line.

• What state?

• Transitions the line in its cache from invalid to exclusive

49

50. The MESI Protocol: Read HIT

• When a read hit occurs on a line currently in the local cache, theprocessor simply reads the required item.

• Need to change the state?

• There is no state change: The state remains modified, shared, or exclusive.

50

51. The MESI Protocol: Write MISS

• When a write miss occurs in the local cache,• the processor initiates a memory read to read the line of main memory containing the missing

address

• For this purpose, the processor issues a signal on the bus that means read-with-intentto-modify (RWITM)

• When the line is loaded, it is immediately marked modified

• With respect to other caches, two possible scenarios precede the loading of the line of

data.

51

52. The MESI Protocol: Write MISS – Scenario 1

• First, some other cache may have a modified copy of this line (state = modify)• In this case, the alerted processor signals the initiating processor that another processor has a

modified copy of the line.

• The initiating processor surrenders the bus and waits.

• The other processor gains access to the bus,

• writes the modified cache line back to main memory,

• and transitions the state of the cache line to invalid (because the initiating processor is going to modify this

line).

• Subsequently, the initiating processor will again issue a signal to the bus of RWITM

• and then read the line from main memory,

• modify the line in the cache,

• and mark the line in the modified state

52

53. The MESI Protocol: Write MISS – Scenario 2

• The second scenario is that no other cache has a modified copy of therequested line.

• In this case, no signal is returned, and the initiating processor proceeds to

read in the line and modify it.

• Initiating processor state?

• Anything else need to do? What about if another cache has a clean copy of

the line?

• Meanwhile, if one or more caches have a clean copy of the line in the shared state,

each cache invalidates its copy of the line,

• and if one cache has a clean copy of the line in the exclusive state, it invalidates its

copy of the line.

53

54. The MESI Protocol: Write HIT

• Shared: Before performing the update,• The processor must gain exclusive ownership of the line.

• The processor signals its intent on the bus.

• Each processor that has a shared copy of the line in its cache transitions the sector

from shared to invalid.

• The initiating processor then performs the update

• Do we need to change the state of the initiating processor?

• Yes. Initiating processor change its copy of the line from shared to modified

54

55. The MESI Protocol: Write HIT

• Exclusive:• The processor already has exclusive control of this line,

• and so it simply performs the update

• Do we need to change the state?

• Yes. Initiating processor transitions its copy of the line from exclusive to modified

55

56. The MESI Protocol: Write HIT

• Modified:• The processor already has exclusive control of this line

• and so it simply performs the update

• Do we need to change the state?

• No. It’s already marked as modified in the copy of initiating processor’s cache.

56

57. Summary

• Parallelism• Multithreading

• Multiprocessing

• Multiprogramming

• Multi-core

• Cache coherence problem

• Cache coherence protocols

• Snoopy bus: The MESI Protocol

57

58. End of Lecture 10!

End of Lecture 10!• Additional readings:

• Chapter 17 (Parallel Processing): Stallings, W. Computer organization and

architecture: Designing for performance, Tenth Edition Upper Saddle River, N.J:

Prentice Hall.

• Next week lecture: Pipelining

• Questions?

58

59.

Appendix59

60.

Appendix: University's vision and missionVision

Xiamen University Malaysia aspires to become a university with a

distinct global outlook, featuring first-class teaching and research, and

embracing cultural diversity.

Mission

To nurture young talents with dignity and wisdom, turning them into

fine citizens of the region who will contribute to the prosperity of the

people and social progress of Malaysia, China and Southeast Asia.

60

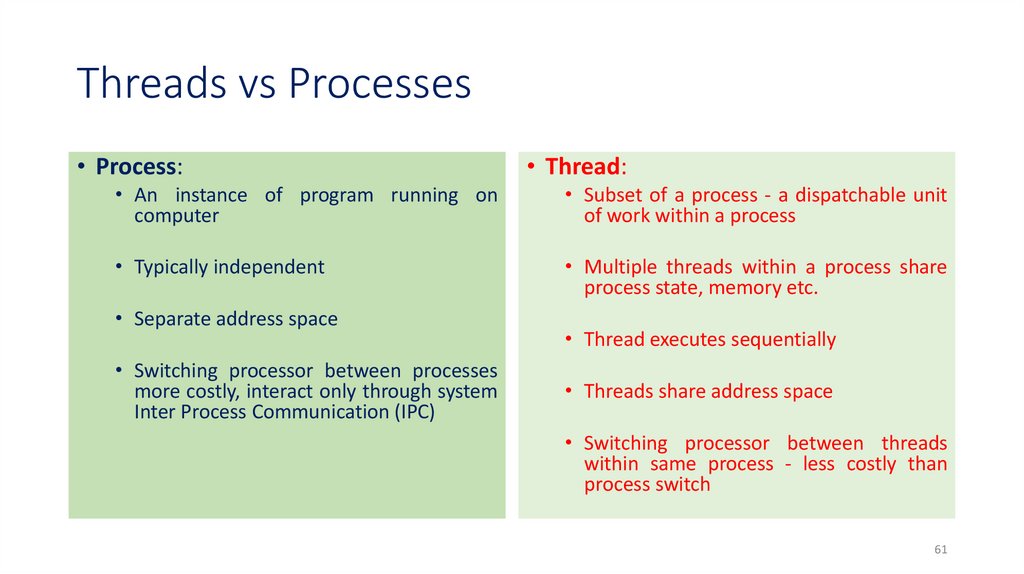

61. Threads vs Processes

• Process:• Thread:

• An instance of program running on

computer

• Subset of a process - a dispatchable unit

of work within a process

• Typically independent

• Multiple threads within a process share

process state, memory etc.

• Separate address space

• Switching processor between processes

more costly, interact only through system

Inter Process Communication (IPC)

• Thread executes sequentially

• Threads share address space

• Switching processor between threads

within same process - less costly than

process switch

61

62. Multi-Core (Single Chip Multiprocessors)

• A multi-core CPU combines two or more independent cores into a single packagecomposed of a single integrated circuit (IC)

• A dual-core processor contains two cores and a quad-core processor contains

four cores.

• Cores in a multicore device may share a single coherent cache (e.g. Intel Core 2)

or may have separate caches (e.g. AMD dual-core processors).

• The processors also share the same interconnection to the rest of the system.

• Each "core" independently implements optimizations such as pipelining.

62

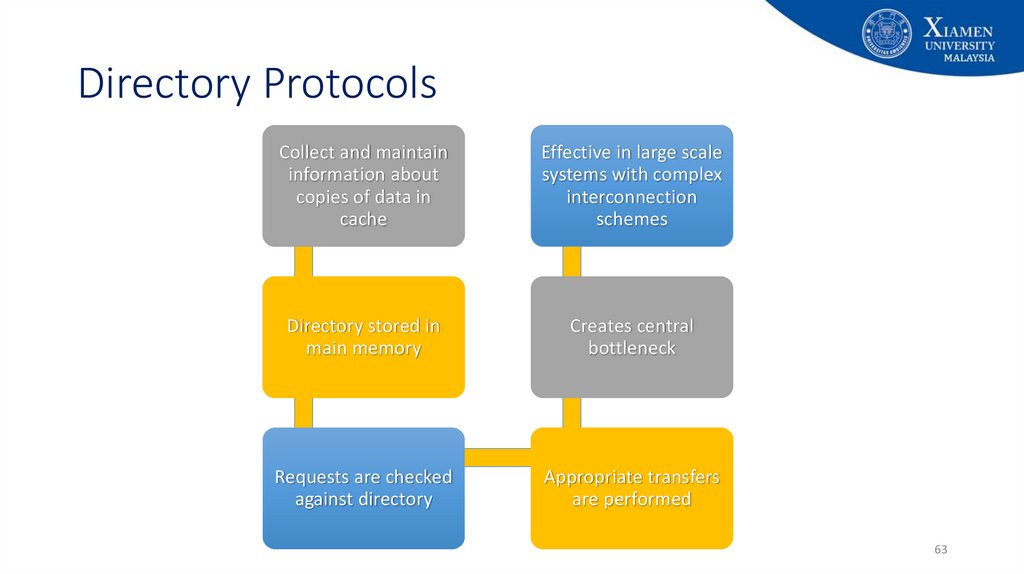

63. Directory Protocols

Collect and maintaininformation about

copies of data in

cache

Effective in large scale

systems with complex

interconnection

schemes

Directory stored in

main memory

Creates central

bottleneck

Requests are checked

against directory

Appropriate transfers

are performed

63

informatics

informatics