Similar presentations:

System Information for Windows (SIW)

1.

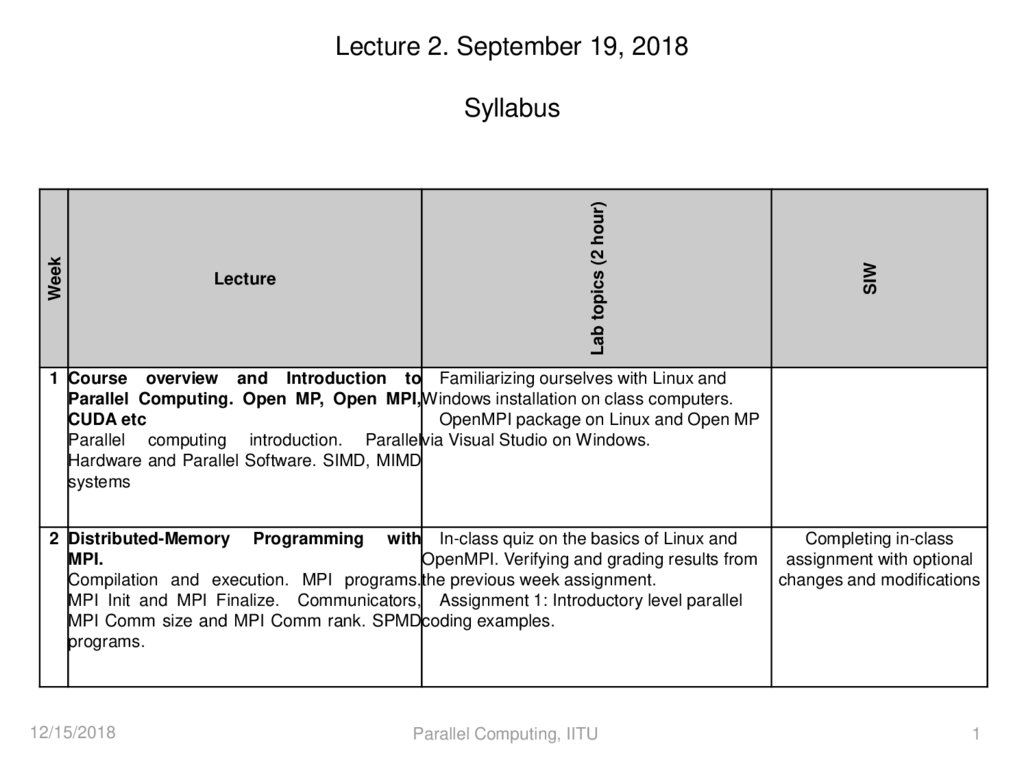

Lecture 2. September 19, 2018SIW

Lecture

Lab topics (2 hour)

Week

Syllabus

1 Course overview and Introduction to Familiarizing ourselves with Linux and

Parallel Computing. Open MP, Open MPI,Windows installation on class computers.

CUDA etc

OpenMPI package on Linux and Open MP

Parallel computing introduction. Parallelvia Visual Studio on Windows.

Hardware and Parallel Software. SIMD, MIMD

systems

2 Distributed-Memory Programming with In-class quiz on the basics of Linux and

MPI.

OpenMPI. Verifying and grading results from

Compilation and execution. MPI programs.the previous week assignment.

MPI Init and MPI Finalize. Communicators, Assignment 1: Introductory level parallel

MPI Comm size and MPI Comm rank. SPMDcoding examples.

programs.

12/15/2018

Parallel Computing, IITU

Completing in-class

assignment with optional

changes and modifications

1

2.

GradingIntermediate Exam (to be discussed)

Period

1st period

2nd period

Exam

Task

Attendance

Assignment 1 - 4

Quizzes

Attendance

Assignment 5 - 6

Quizzes

Final Exam

MCQ

Open Questions

Total mark

12/15/2018

Grade

scale

Parallel Computing, IITU

50

50

100

Weig

ht

10

20

each

10

10

40

each

10

Total

100

100

100

100

2

3. 2. How to compile and run a simple MS-MPI program

MPI is simply a standard which others follow in their implementation.Because of this, there are a wide variety of MPI implementations out there.

1. Installing MPICH2 on a Single Machine

http://mpitutorial.com/tutorials/installing-mpich2/

2. How to compile and run a simple MS-MPI program

https://blogs.technet.microsoft.com/windowshpc/2015/02/02/how-to-compile-and-run-a-simple-ms-mpi-program/

3. Install Open MPI:Open Source High Performance Computing

http://lsi.ugr.es/jmantas/pdp/ayuda/datos/instalaciones/Install_OpenMPI_en.pdf

12/15/2018

Parallel Computing, IITU

3

4. How to compile and run a simple MS-MPI program

https://blogs.technet.microsoft.com/windowshpc/2015/02/02/how-to-compile-and-run-a-simple-ms-mpi-program/Download MS-MPI SDK and Redist installers and install them

https://docs.microsoft.com/en-us/message-passing-interface/microsoft-mpi

https://www.microsoft.com/en-us/download/details.aspx?id=56727

1.

2.

Installation order of know significance.

Make sure that the MS-MPI environment variables exist and memorize the values, if not create them manually

12/15/2018

Parallel Computing, IITU

4

5.

Continued from the previous slide3. Open up Visual Studio and create a new Visual C++ Win32 Console Application project. Let’s name it

MPIHelloWorld or whatever you want and use default settings.

4. Setup the include directories so that the compiler can find the MS-MPI header files. Note that we will be building

for 64 bits so we will point the include directory to $(MSMPI_INC);$(MSMPI_INC)\x64. If you will be building for 32

bits please use $(MSMPI_INC);$(MSMPI_INC)\x86

12/15/2018

Parallel Computing, IITU

5

6.

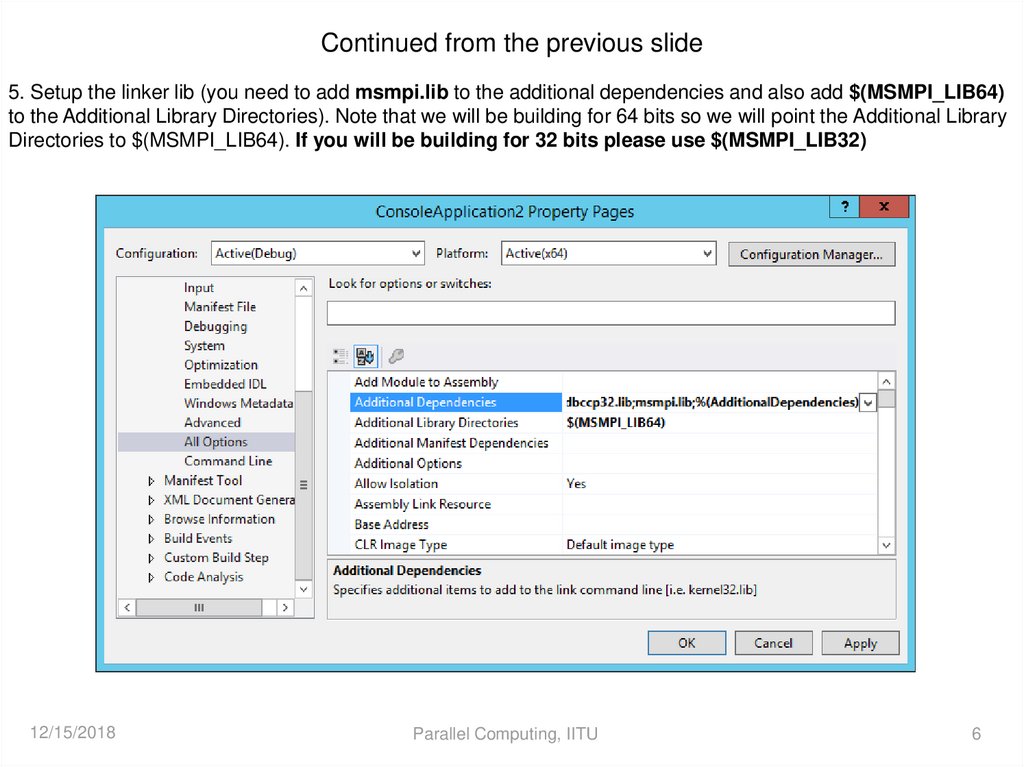

Continued from the previous slide5. Setup the linker lib (you need to add msmpi.lib to the additional dependencies and also add $(MSMPI_LIB64)

to the Additional Library Directories). Note that we will be building for 64 bits so we will point the Additional Library

Directories to $(MSMPI_LIB64). If you will be building for 32 bits please use $(MSMPI_LIB32)

12/15/2018

Parallel Computing, IITU

6

7.

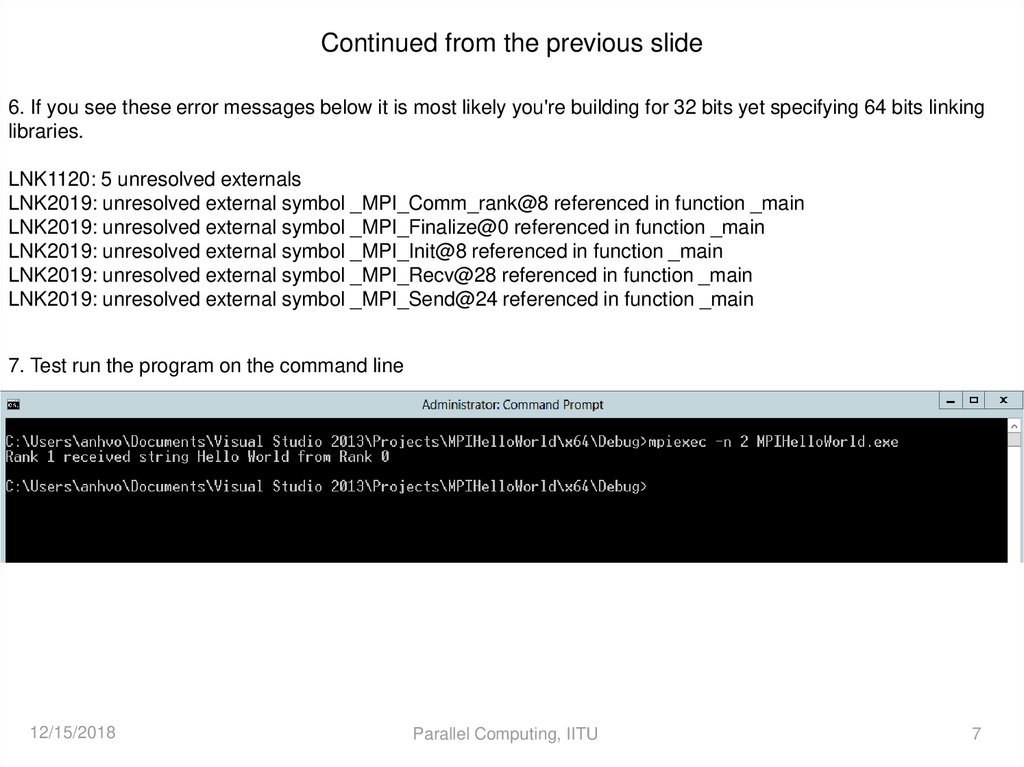

Continued from the previous slide6. If you see these error messages below it is most likely you're building for 32 bits yet specifying 64 bits linking

libraries.

LNK1120: 5 unresolved externals

LNK2019: unresolved external symbol _MPI_Comm_rank@8 referenced in function _main

LNK2019: unresolved external symbol _MPI_Finalize@0 referenced in function _main

LNK2019: unresolved external symbol _MPI_Init@8 referenced in function _main

LNK2019: unresolved external symbol _MPI_Recv@28 referenced in function _main

LNK2019: unresolved external symbol _MPI_Send@24 referenced in function _main

7. Test run the program on the command line

12/15/2018

Parallel Computing, IITU

7

8. mpiexec https://docs.par-tec.com/html/psmpi-userguide/rn01re01.html

The mpiexec command is the typical way to start parallel or serial jobs. It hides the differences of starting jobs ofvarious implementations of the Message Passing Interface, version 2, from the user.

in-class/home assignment: find out, test and demonstrate (using screenshots etc) options specific for mpiexec

for ms-mpi

12/15/2018

Parallel Computing, IITU

8

9. _CRT_SECURE_NO_WARNINGS

12/15/2018Parallel Computing, IITU

9

10. MPI_COMM_WORLD

MPI_INIT defines something called MPI_COMM_WORLD for each process that calls it. MPI_COMM_WORLD is acommunicator. All MPI communication calls require a communicator argument and MPI processes can only

communicate if they share a communicator.

A communicator is an object describing a group of processes. In many applications all processes work together

closely coupled, and the only communicator you need is MPI_COMM_WORLD , the group describing all

processes that your job starts with.

12/15/2018

Parallel Computing, IITU

10

11. MPI 3.1

12/15/2018Parallel Computing, IITU

11

software

software