Similar presentations:

Introduction to server side performance testing

1.

Introduction toserver side performance testing

~30 slides to make the World more performing

December 4, 2017

CONFIDENTIAL

1

2.

AGENDA1

Quality

2

Performance: Why? Who? How?

3

EPAM POG some facts

4

System under the load – general approach

5

Performance testing definitions

6

Types of tests

7

Monitoring

8

Reporting

9

Literature

CONFIDENTIAL

2

3.

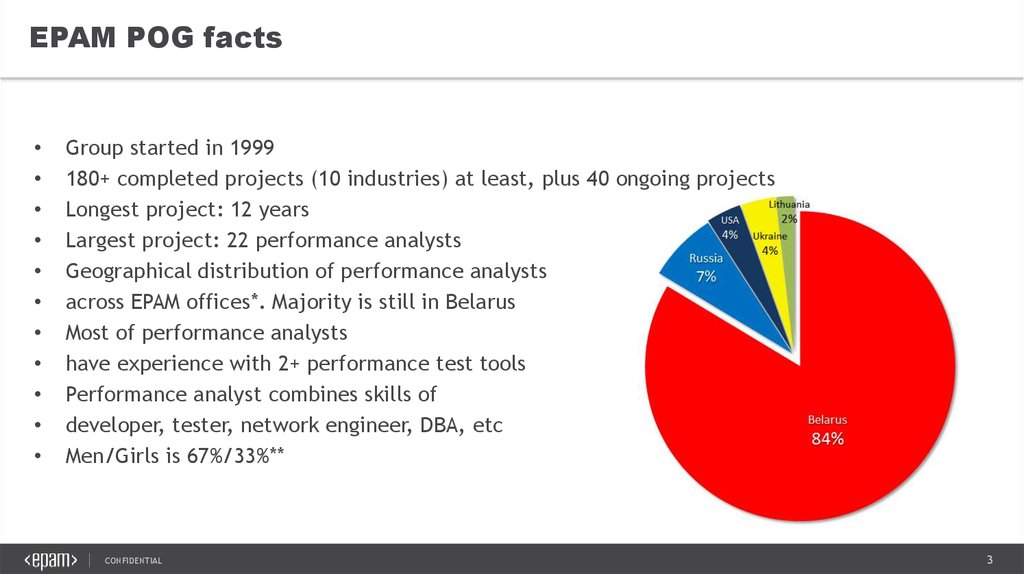

EPAM POG factsGroup started in 1999

180+ completed projects (10 industries) at least, plus 40 ongoing projects

Longest project: 12 years

Largest project: 22 performance analysts

Geographical distribution of performance analysts

across EPAM offices*. Majority is still in Belarus

Most of performance analysts

have experience with 2+ performance test tools

Performance analyst combines skills of

developer, tester, network engineer, DBA, etc

Men/Girls is 67%/33%**

CONFIDENTIAL

3

4.

SOFTWARE QUALITYQuality is a property which shows if product meets its functional requirements (expectations) or

not.

Anything wrong?

One small correction makes difference:

Quality is a property which shows if product meets its requirements (expectations) or not.

Performance - The degree to which a system or component accomplishes its designated functions

within given constraints* (IEEE).

CONFIDENTIAL

4

5.

THE PURPOSE OF PERFORMANCE OPTIMIZATIONAs per ISO 9126, software product quality consists of:

Functionality

Usability

Functional testing process

Reliability

Efficiency

Maintainability

Performance optimization process

Our descendants will do that… hopefully

Portability

CONFIDENTIAL

5

6.

WHY PERFORMANCE?No Performance

No Quality

Poor Performance

Poor Quality

Good Performance

Good Performance

What are usual consequences of poor performance?

Unbearable slow software product’s reaction to user requests

Unexpected application crashes

Expected (still unwanted) application crashes at the moments of extreme increase in load

Software product vulnerability to attacks

Problems with product scalability in case it gets more popular

And more

CONFIDENTIAL

6

7.

STEPS TO ASSURE GOOD PERFORMANCEThe ultimate goal of performance optimization process is to assure good product

performance.

Requirements

To do that the following steps are to be performed (often in an iterative way):

1. Check Define requirements (= requirement analysis)

Testing

2. Measure performance (= performance testing)

3. Find performance bottlenecks (= performance analysis)

Analysis

4. Fix found problems, so performance increases (= tuning)

Tuning

CONFIDENTIAL

7

8.

REALISTIC PICTURE OF THE PROCESSBad input

CONFIDENTIAL

Good output

8

9.

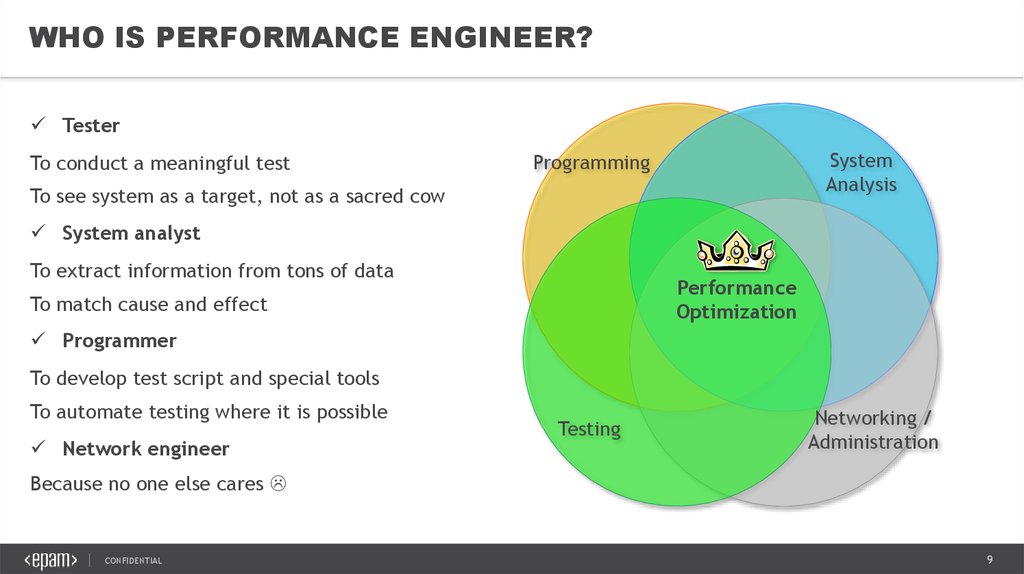

WHO IS PERFORMANCE ENGINEER?Tester

To conduct a meaningful test

System

Analysis

Programming

To see system as a target, not as a sacred cow

System analyst

To extract information from tons of data

Performance

Optimization

To match cause and effect

Programmer

To develop test script and special tools

To automate testing where it is possible

Network engineer

Testing

Networking /

Administration

Because no one else cares

CONFIDENTIAL

9

10.

WHEN TO STARTClassic Approach:

When code is ready and functionally working.

But it’s too late because you need time for performance fix.

Better options:

1.Start test at same time as functional team (at least) – meaning you need to develop scripts and

prepare everything even earlier

2.Start after functional test, but allocate time for performance fixes and functional re-test

Remember!

You’re likely to spend most of time on test results analysis. It is not scripting and test running

what makes it all difficult and long lasting, it is rather searching for the performance

bottlenecks.

CONFIDENTIAL

10

11.

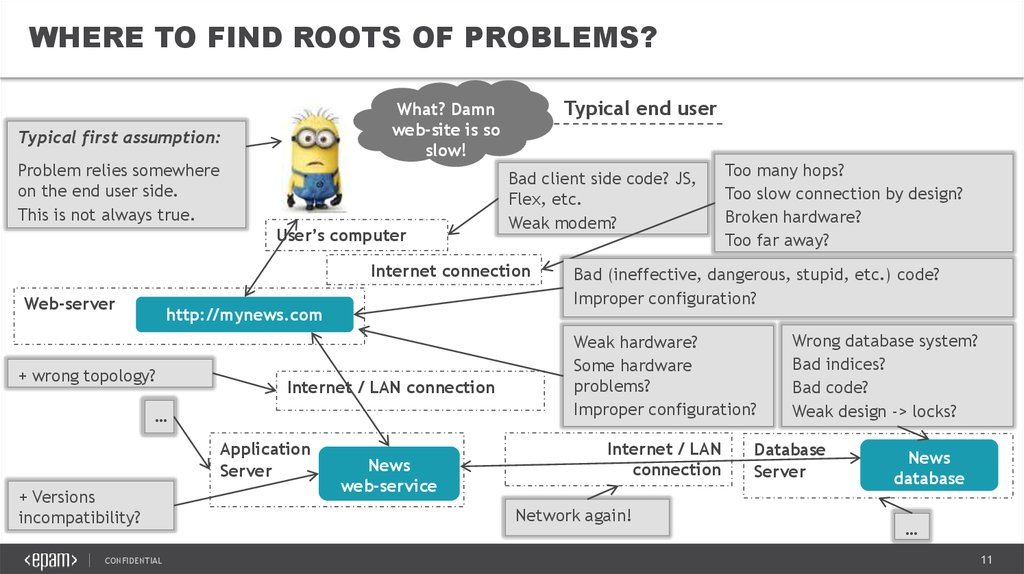

WHERE TO FIND ROOTS OF PROBLEMS?Typical end user

What? Damn

web-site is so

slow!

Typical first assumption:

Problem relies somewhere

on the end user side.

This is not always true.

User’s computer

Bad client side code? JS,

Flex, etc.

Weak modem?

Internet connection

Web-server

http://mynews.com

+ wrong topology?

Internet / LAN connection

…

Application

Server

+ Versions

incompatibility?

CONFIDENTIAL

News

web-service

Too many hops?

Too slow connection by design?

Broken hardware?

Too far away?

Bad (ineffective, dangerous, stupid, etc.) code?

Improper configuration?

Weak hardware?

Some hardware

problems?

Improper configuration?

Internet / LAN

connection

Network again!

Wrong database system?

Bad indices?

Bad code?

Weak design -> locks?

Database

Server

News

database

…

11

12.

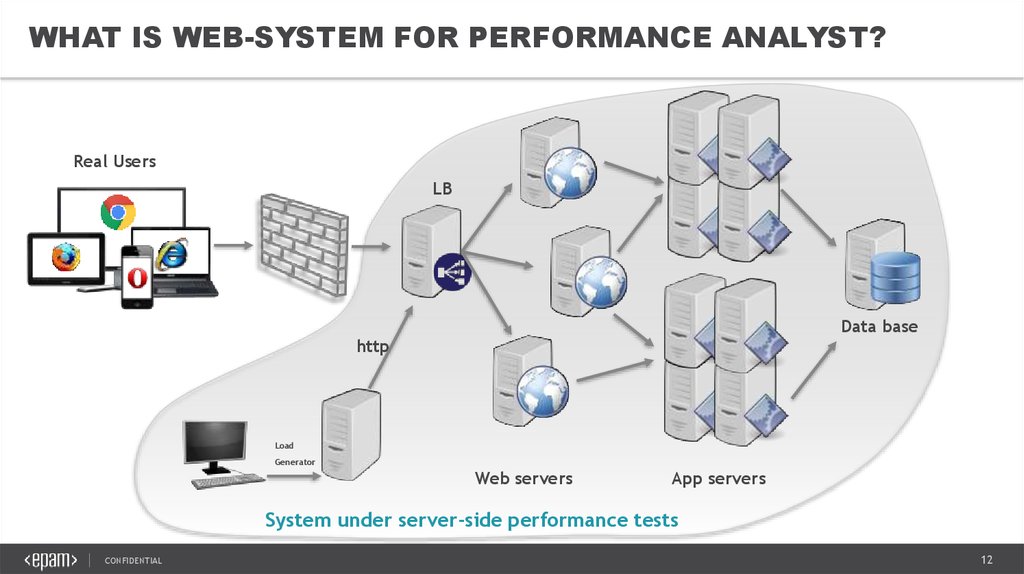

WHAT IS WEB-SYSTEM FOR PERFORMANCE ANALYST?Real Users

LB

Data base

http

Load

Generator

Web servers

App servers

System under server-side performance tests

CONFIDENTIAL

12

13.

CONFIDENTIAL13

14.

SOME DEFINITIONSPerformance = response times + capacity + stability

+ scalability

Performance of a software system: property of a system which indicates its ability to be as

fast, powerful, stable, and scalable as required.

CONFIDENTIAL

14

15.

SOME DEFINITIONSPerformance bottleneck

CONFIDENTIAL

Performance testing

Load testing:

15

16.

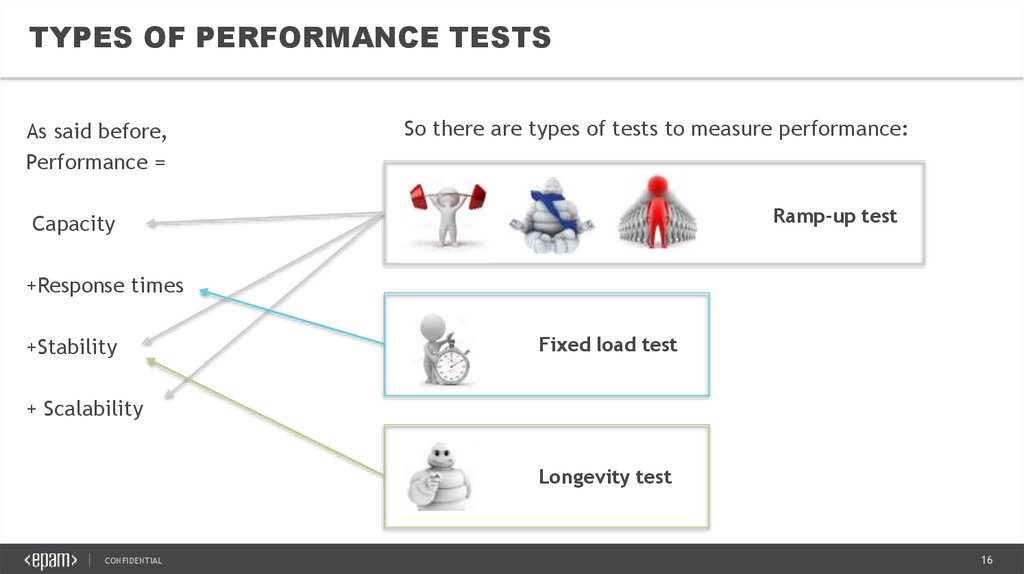

TYPES OF PERFORMANCE TESTSAs said before,

Performance =

So there are types of tests to measure performance:

Ramp-up test

Capacity

+Response times

+Stability

Fixed load test

+ Scalability

Longevity test

CONFIDENTIAL

16

17.

Also TESTS to mentionIn fact, there are some more tests to mention:

Rush-hour

Rendezvous

Render time

Production drip

… And even more to invent!

Ask me how to join performance optimization group to know the details

CONFIDENTIAL

17

18.

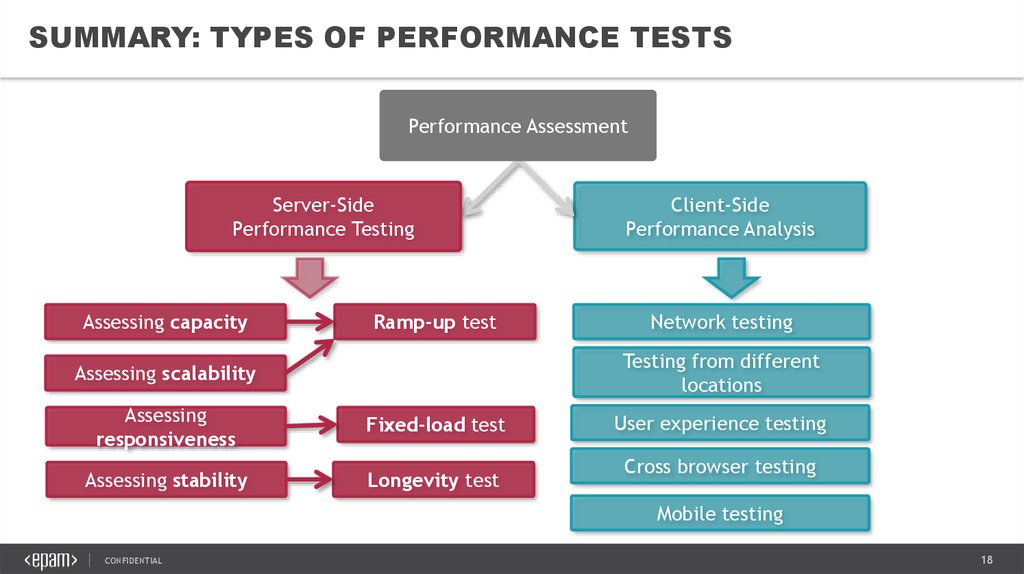

SUMMARY: TYPES OF PERFORMANCE TESTSPerformance Assessment

Server-Side

Performance Testing

Assessing capacity

Ramp-up test

Client-Side

Performance Analysis

Network testing

Testing from different

locations

Assessing scalability

Assessing

responsiveness

Fixed-load test

Assessing stability

Longevity test

User experience testing

Cross browser testing

Mobile testing

CONFIDENTIAL

18

19.

MECHANICS OF CAPACITYAssume there is some web application that is able to process some requests.

It takes 1 second to process 1 request with about 10% of resources utilization.

What happens if 1 user sends 1 request?

What happens if 2 users simultaneously send a request each?

What happens if 10 users simultaneously send a request each?

What happens if 11 users simultaneously send a request each?

Some options to consider:

Option 1: Application will crash.

Option 2: Application will process 10 requests within 1 second and throw 11th request away (not

a good thing, because we make 11th user completely unhappy).

Option 3: Application will process 10 requests within 1 second and put 11th request in the queue

(much better, but still not a good thing).

Option 4: Application will serve all 11 requests, but it will take more than 1 second to process

each of the requests (sill not a good thing, but much better than options 1-3).

What happens if 20 users simultaneously send a request each?

We cannot load this application with more than 10 requests/sec because it is its natural capacity

CONFIDENTIAL

19

20.

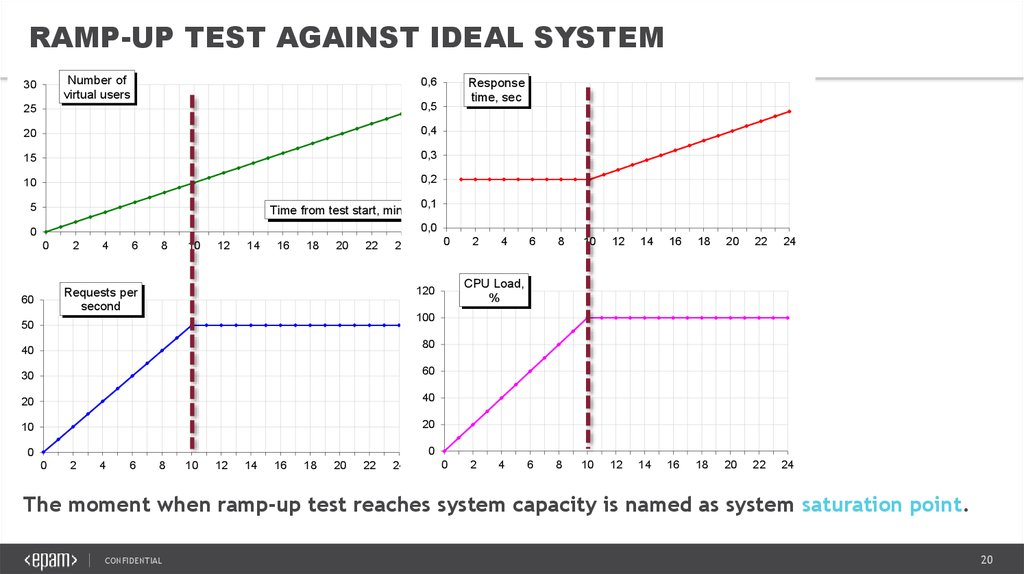

RAMP-UP TEST AGAINST IDEAL SYSTEMNumber of

virtual users

30

0,6

25

0,5

20

0,4

15

0,3

10

0,2

5

Time from test start, min

Response

time, sec

0,1

0,0

0

0

2

4

6

8

10

12

14

16

18

20

22

2

4

6

8

10

12

14

16

18

20

22

24

6

8

10

12

14

16

18

20

22

24

CPU Load,

%

120

Requests per

second

60

0

24

100

50

80

40

30

60

20

40

10

20

0

0

0

2

4

6

8

10

12

14

16

18

20

22

24

0

2

4

The moment when ramp-up test reaches system capacity is named as system saturation point.

CONFIDENTIAL

20

21.

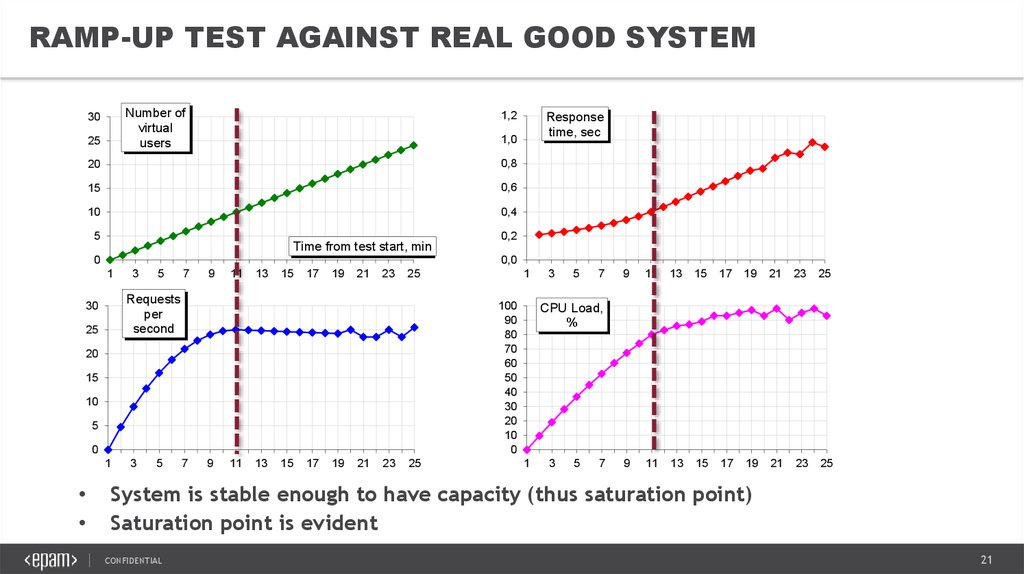

RAMP-UP TEST AGAINST REAL GOOD SYSTEMNumber of

virtual

users

30

25

1,2

1,0

20

0,8

15

0,6

10

0,4

5

Time from test start, min

1

25

0,2

0,0

0

30

Response

time, sec

3

5

Requests

per

second

20

15

10

5

0

7

9

11

13

15

17

19

21

23

1

25

100

90

80

70

60

50

40

30

20

10

0

3

5

7

9

11

13

15

17

19

23

25

21

23

25

CPU Load,

%

1

3news:

5

7

9 11 13 15 17 19 21 23 25

1

3

5

7

9 11 13 15 17 19

Good

• System is stable enough to have capacity (thus saturation point)

• Saturation point is evident

CONFIDENTIAL

21

21

22.

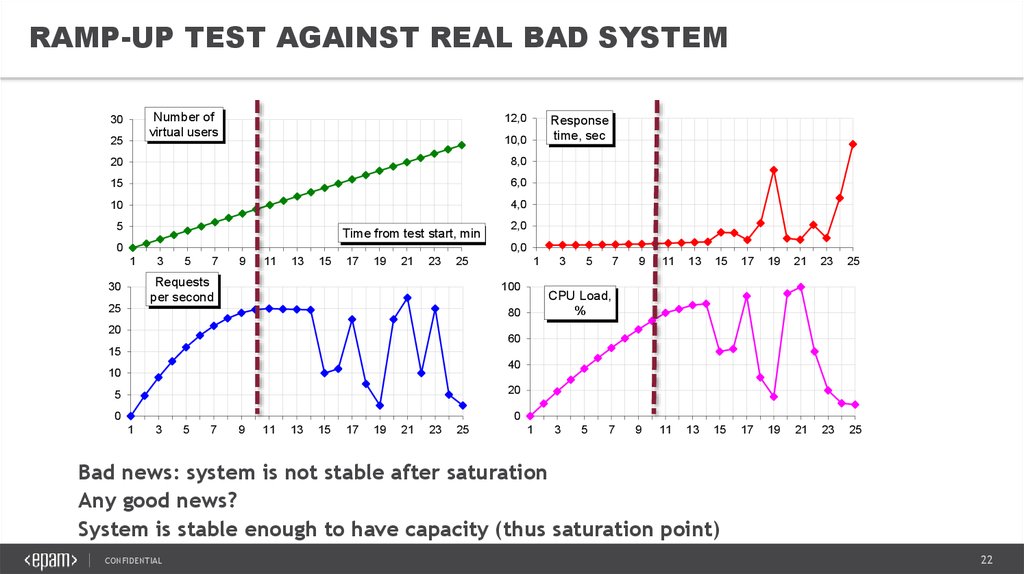

RAMP-UP TEST AGAINST REAL BAD SYSTEMNumber of

virtual users

30

25

12,0

Response

time, sec

10,0

20

8,0

15

6,0

10

4,0

5

Time from test start, min

2,0

0,0

0

1

3

5

7

9

11

13

15

17

19

21

23

Requests

per second

30

25

1

25

100

5

7

9

11

13

15

17

19

21

23

25

9

11

13

15

17

19

21

23

25

CPU Load,

%

80

20

3

60

15

40

10

20

5

0

0

1

3

5

7

9

11

13

15

17

19

21

23

25

1

3

5

7

Bad news: system is not stable after saturation

Any good news?

System is stable enough to have capacity (thus saturation point)

CONFIDENTIAL

22

23.

RAMP-UP TEST AGAINST REALLY BAD SYSTEMNumber of

virtual

users

30

25

20

15

10

5

Time from test start, min

0

1

3

5

7

9

11

13

15

17

19

21

23

Response

time, sec

1

25

Requests

per second

25

45

40

35

30

25

20

15

10

5

0

80

15

60

10

40

5

20

0

5

7

9

11

13

15

17

19

21

23

25

9

11

13

15

17

19

21

23

25

CPU Load,

%

100

20

3

0

1

3

5

7

9

11

13

15

17

19

21

23

25

1

3

5

7

Bad news: a lot of ones

Perfect ramp-up test!

Any good news?

It was we who observed that, not real users

CONFIDENTIAL

23

24.

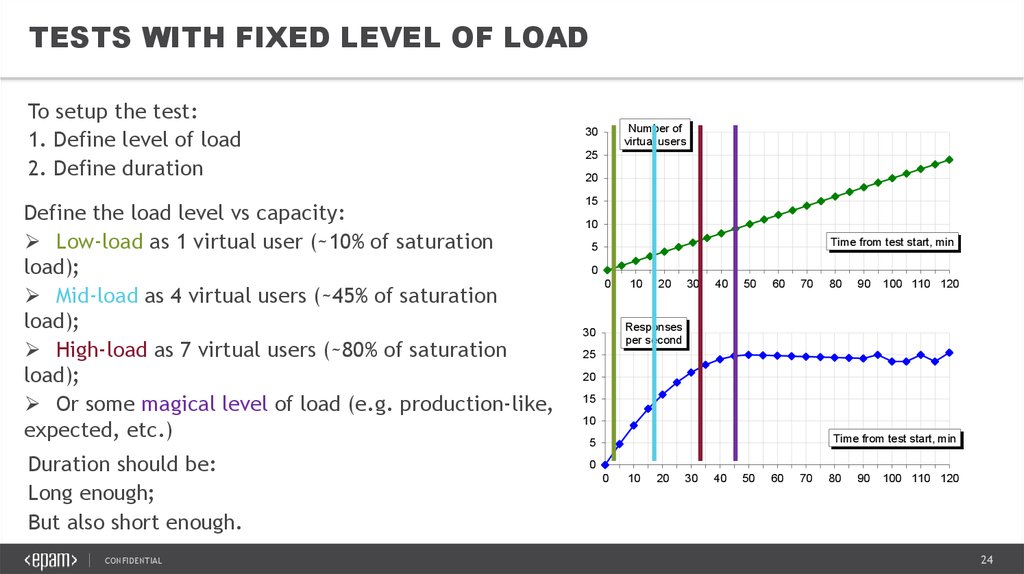

TESTS WITH FIXED LEVEL OF LOADTo setup the test:

1. Define level of load

2. Define duration

Define the load level vs capacity:

Low-load as 1 virtual user (~10% of saturation

load);

Mid-load as 4 virtual users (~45% of saturation

load);

High-load as 7 virtual users (~80% of saturation

load);

Or some magical level of load (e.g. production-like,

expected, etc.)

Duration should be:

Long enough;

But also short enough.

CONFIDENTIAL

Number of

virtual users

30

25

20

15

10

Time from test start, min

5

0

0

10

20

30

40

50

60

70

80

90

100 110 120

Responses

per second

30

25

20

15

10

Time from test start, min

5

0

0

10

20

30

40

50

60

70

80

90

100 110 120

24

25.

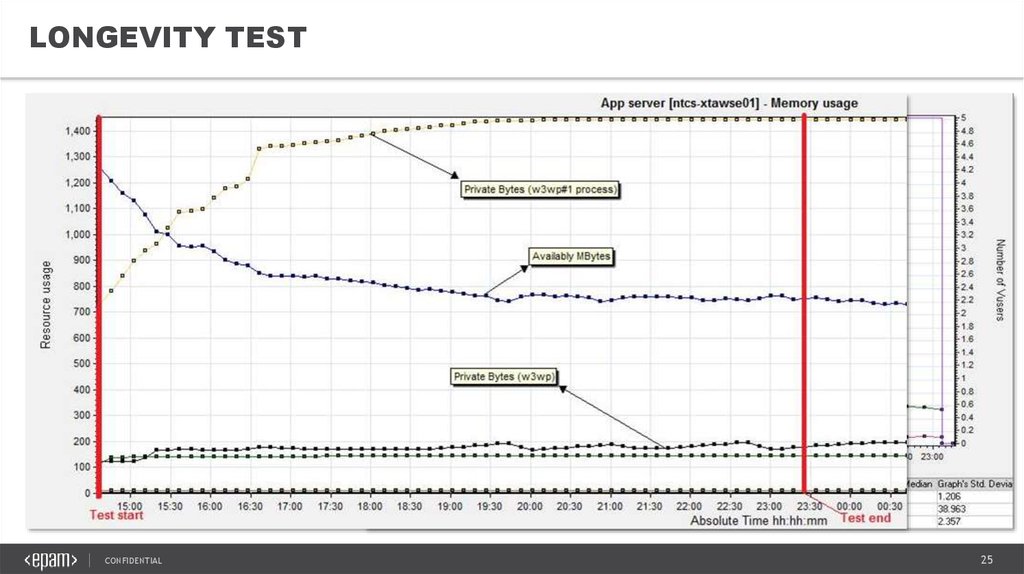

LONGEVITY TESTMake high-load test a long one → run a longevity test

Why is it a different test?

Example: graph from a 8-hour-long test

CONFIDENTIAL

25

26.

WHY TO MONITORIssues and unexpected situations occur during performance testing quite often. In order to

understand the root cause, find the bottlenecks and analyze results thoroughly, hardware resources

monitoring of all system components involved is required.

Important: load generator is also a component of the system under test and bottleneck may reside

there as well!

It`s a good practice to monitor resource online during

a running test (for ramp-up it`s a must): that way

you`ll have at least some high-level idea of what is

happening inside the system.

Also, you can try using your application manually

from time to time during a running test to check if

it`s doing fine: sometimes it`s the only way to catch

sophisticated errors that automated tools may not be

able to capture.

CONFIDENTIAL

26

27.

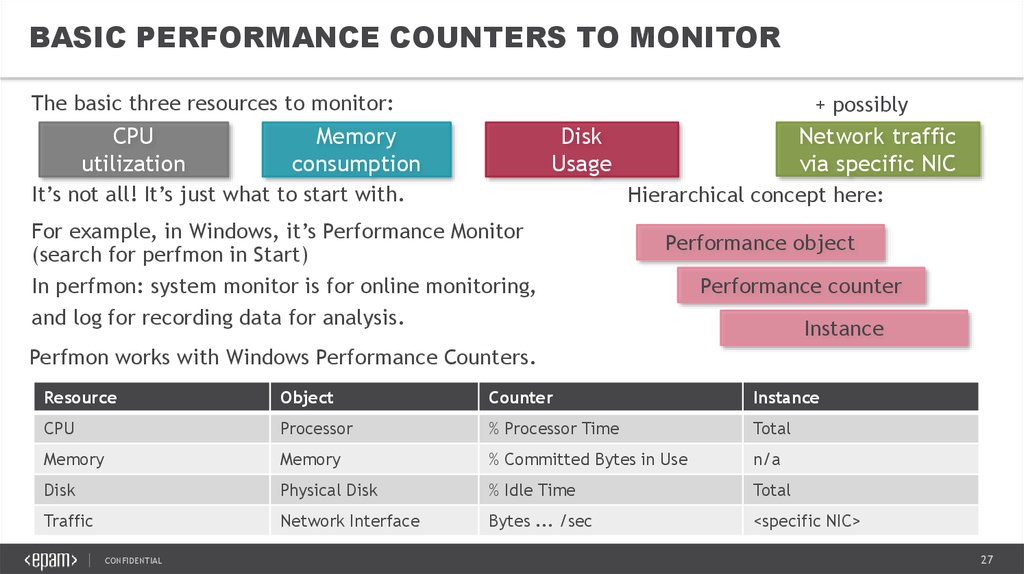

BASIC PERFORMANCE COUNTERS TO MONITORThe basic three resources to monitor:

CPU

utilization

+ possibly

Memory

consumption

Network traffic

via specific NIC

Disk

Usage

It’s not all! It’s just what to start with.

Hierarchical concept here:

For example, in Windows, it’s Performance Monitor

(search for perfmon in Start)

In perfmon: system monitor is for online monitoring,

and log for recording data for analysis.

Performance object

Performance counter

Instance

Perfmon works with Windows Performance Counters.

Resource

Object

Counter

Instance

CPU

Processor

% Processor Time

Total

Memory

Memory

% Committed Bytes in Use

n/a

Disk

Physical Disk

% Idle Time

Total

Traffic

Network Interface

Bytes ... /sec

<specific NIC>

CONFIDENTIAL

27

28.

WHAT TO REPORTThe rule is very simple:

Report results vs. purpose of the test

So, just remember what the goals of the tests are, and it’s clear what to report:

Ramp-up test

Fixed load test

Longevity test

CONFIDENTIAL

28

29.

HOW TO REPORTChoose the right way of reporting, so your information will reach the addressee

The general rules are simple again:

• Remember you’re writing a report for someone who will [make an attempt to]

read it

• Although you might be collected tons of data, report should contain useful

information, not data

• Keep information clear, accurate, and consistent

Typical sections of a performance test results report are:

• Title of the report, author, and date

• Test setup configuration (what, when, where, and how was tested; objectives of the

test)

• Table with related artifacts (links to any raw data, related test results reports, etc)

• Test results section describing findings with all necessary details, supporting charts

and tables

Conclusions and recommendations.* The most difficult one!

CONFIDENTIAL

29

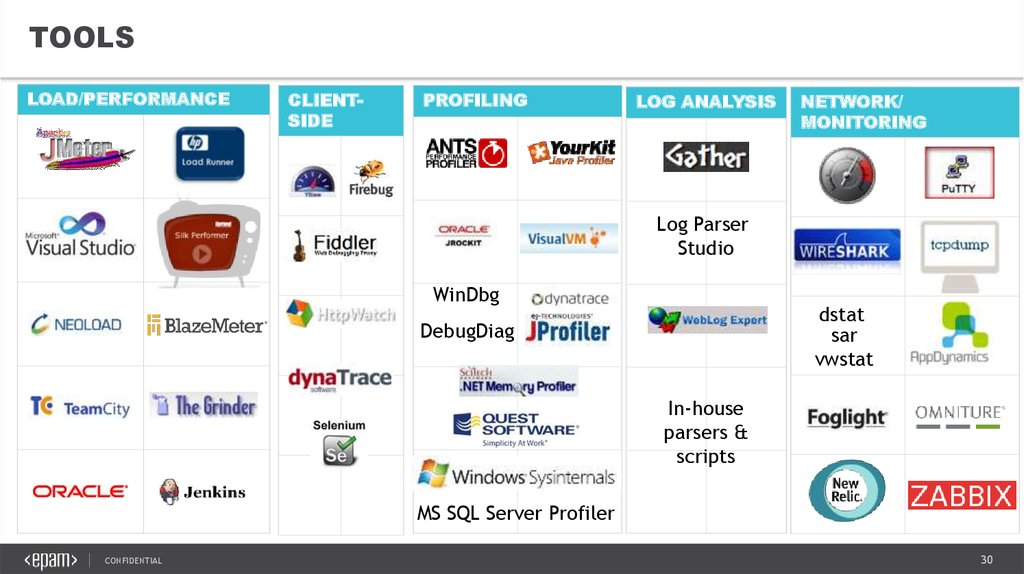

30.

TOOLSLOAD/PERFORMANCE

CLIENTSIDE

PROFILING

LOG ANALYSIS

NETWORK/

MONITORING

Log Parser

Studio

WinDbg

dstat

sar

vwstat

DebugDiag

In-house

parsers &

scripts

MS SQL Server Profiler

CONFIDENTIAL

30

31.

MATERIALS TO EXPLOREUseful book that provides great introduction to both server and client side performance testing:

“Web load testing for dummies”, by Scott Barber and Colin Mason

www.itexpocenter.nl/iec/compuware/WebLoadTestingForDummies.pdf

Nice book about performance testing .NET web-systems (in fact, a lot of general concepts there):

“Performance testing Microsoft .NET web applications”, by Microsoft ACE team

http://www.microsoft.com/mspress/books/5788.aspx

Statistics for dummies :

“The Cartoon Guide to Statistics”, by Larry Gonick and Woolcott Smith

http://www.amazon.com/Cartoon-Guide-Statistics-Larry-Gonick/dp/0062731025

And, introduction to JMeter: http://habrahabr.ru/post/140310/

CONFIDENTIAL

31

32.

THANK YOUMarharyta Halamuzdava Marharyta_Halamuzdava@epam.com

Victoria Blinova Victoria_Blinova@epam.com

CONFIDENTIAL

Aliaksandr Kavaliou Aliaksandr_Kavaliou@epam.com

32

programming

programming