Similar presentations:

Computer Architecture

1.

Some kind of presentationabout Computer Architecture

course

Perhaps it will be useful.

It was made by Artyom Tuzòv (@artyomzifir), by the way, you can thank

him.

2.

Briefly speaking• Here are all the necessary notes and slides from presentations that

may be useful.

• Maybe something is wrong, but idc and idk (almost)

• Good luck with all of this!

3.

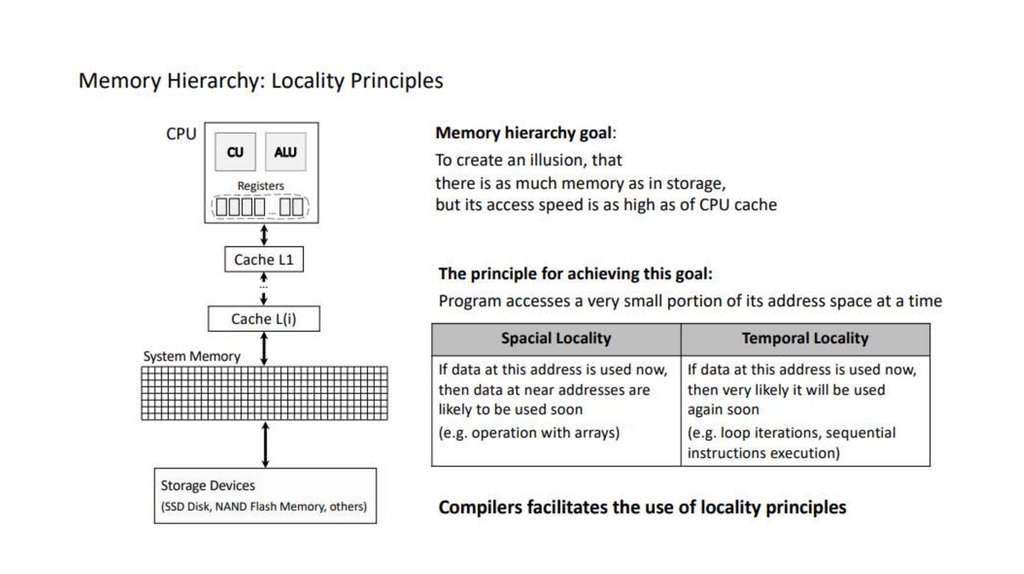

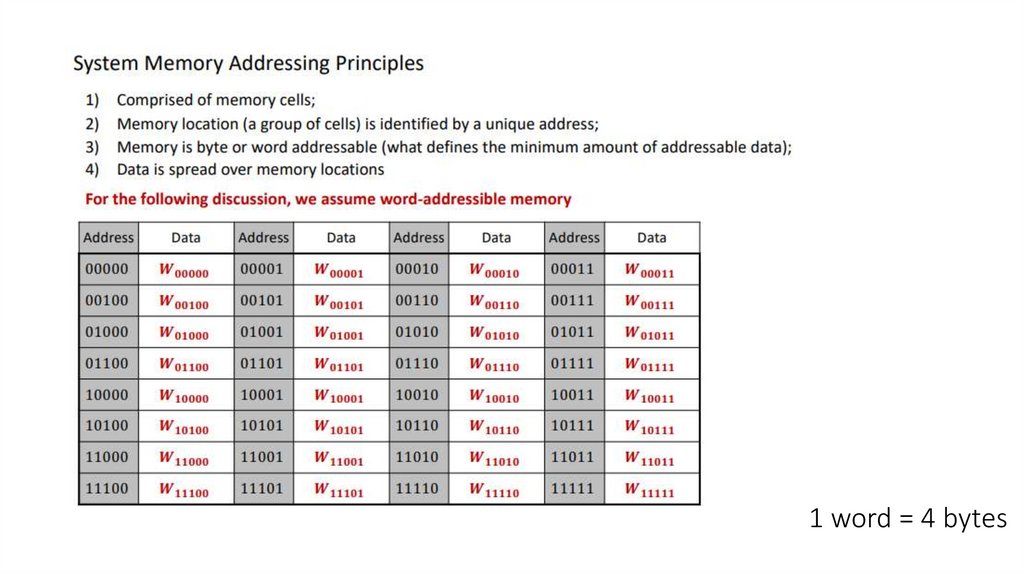

Week #1• Memory hierarchy

• Moore's law

• CPU device (ALU, Control Unit, Registers) and Von Neuman scheme

• What is FPGA?

4.

5.

6.

7.

Week #2• Performance metrics (Benchmarks)

• 9 great ideas of computer architecture

• Latency / Throughput / Execution Time

• Amdahl’s Law

8.

9 (10) GREAT ideas of CompArch1. Burmyakov is gigachad

2. Hierarchy of memories

3. Use abstraction to simplify design

4. Design for Moore’s law

5. Performance via parallelism

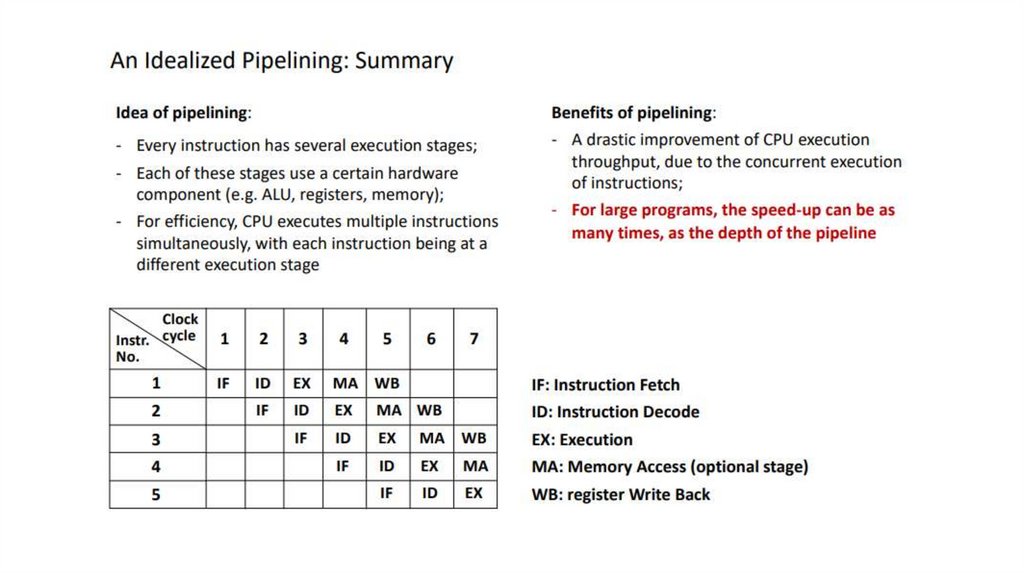

6. Performance via pipelining

7. Performance via speculation (prediction)

8. Dependability (reliability) via redundancy

9. Make the common case fast

10. Finite State Machines (FSM)

9.

What is a benchmark?• What is better: more cores or higher frequency? Idk tbh :-/

• Some metrics are might affect differently in different situations, so we

use benchmarks (program that measure performance by different

tasks)

10.

11.

Latency vs. Execution12.

Latency vs. Throughput13.

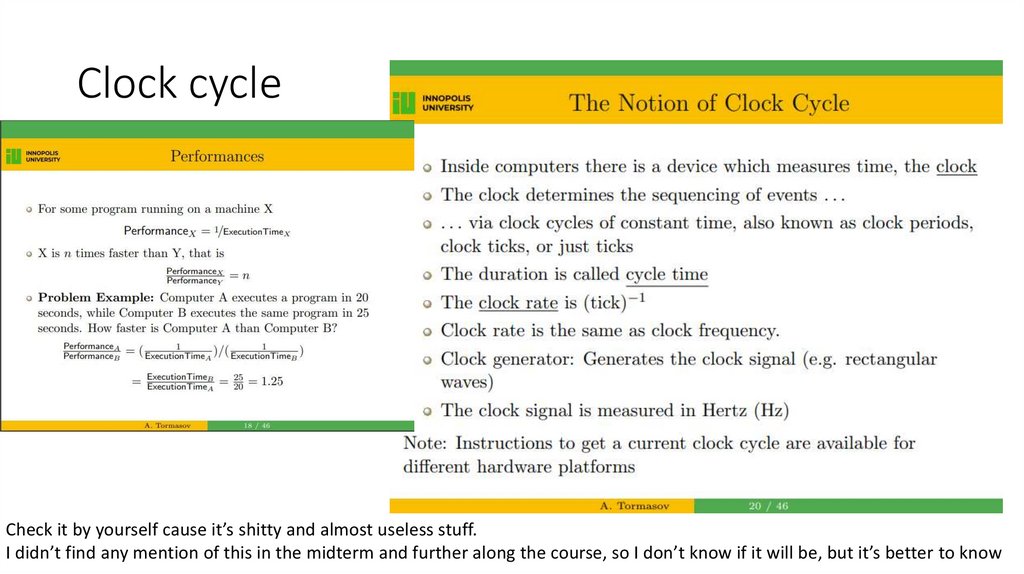

Clock cycleCheck it by yourself cause it’s shitty and almost useless stuff.

I didn’t find any mention of this in the midterm and further along the course, so I don’t know if it will be, but it’s better to know

14.

Week #3• Combinational logic Circuits

• Transistor

• Universal Logic Gates (NAND / NOR)

• Critical path & Propagation Delay

15.

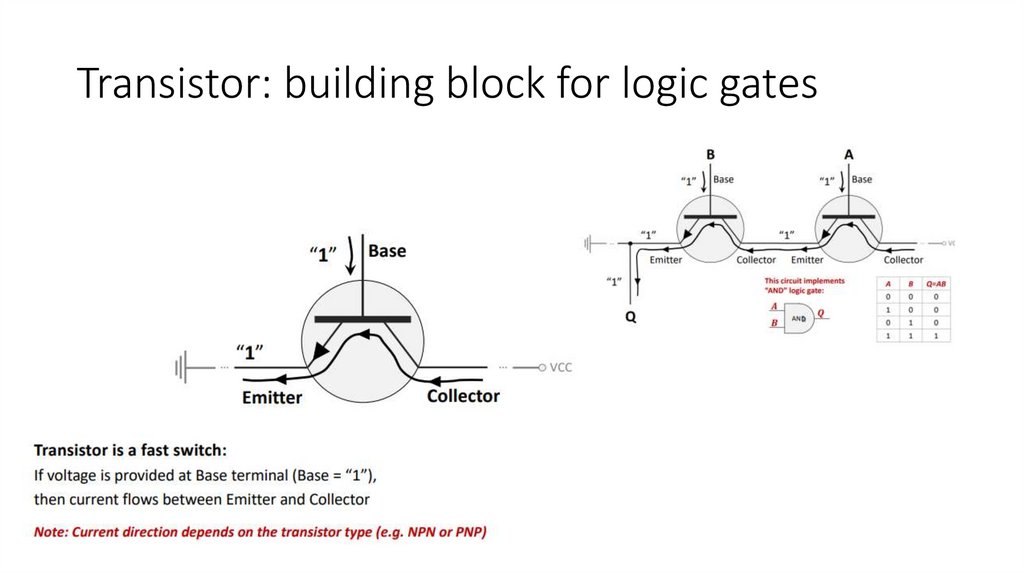

Transistor: building block for logic gates16.

It’s a base you should knowLogic gates

correspond to

physical

devices

implemented

by using

transistors

17.

Critical path & Propagation Delay18.

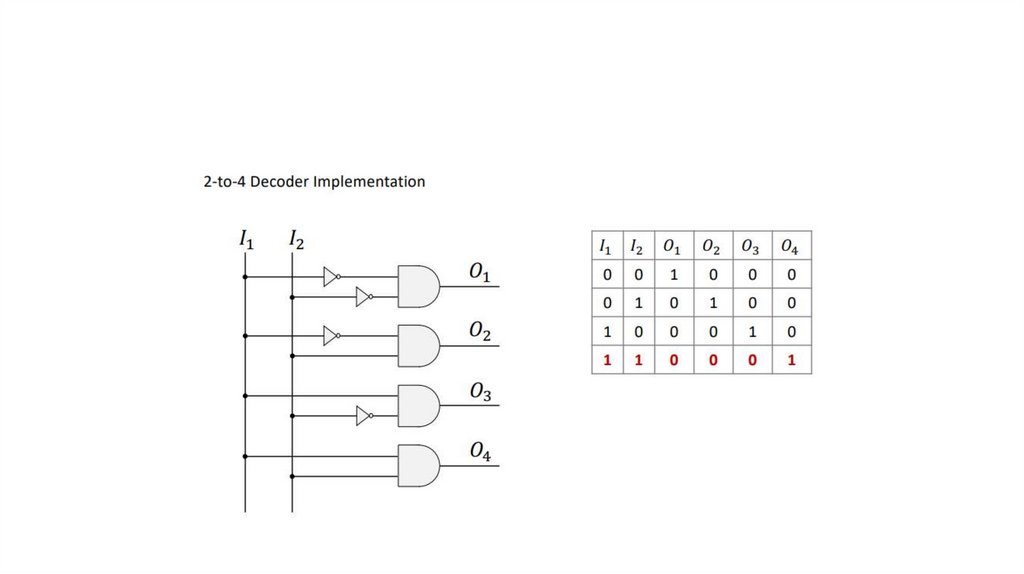

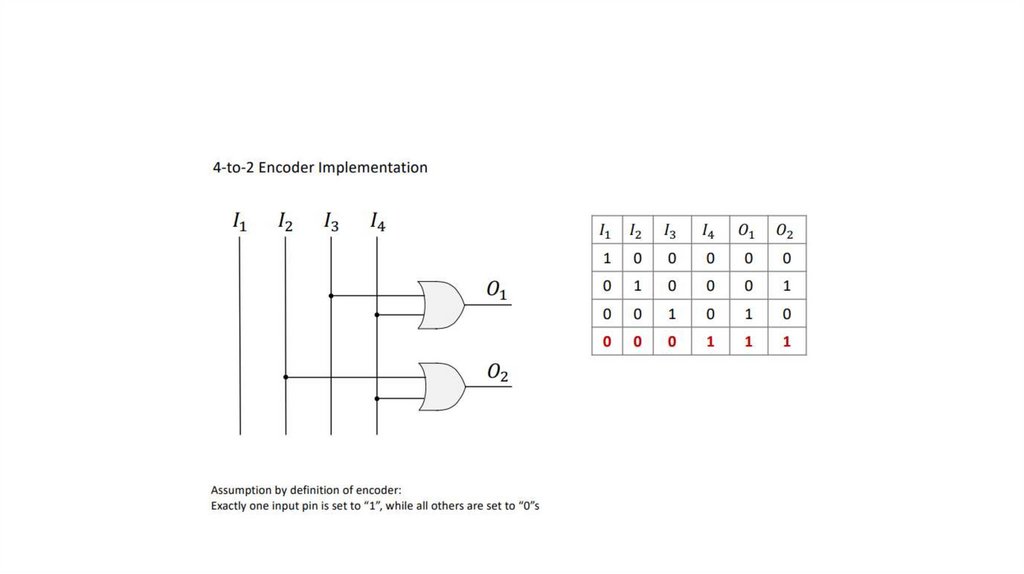

Mux / DeMux & Decoder/Encoder19.

20.

21.

22.

23.

24.

Week #4• Signed Numbers and how to implement them using binary system

• Subtraction and Addition (+Multiplier)

• Characteristics of Combinational Logic Circuits

25.

SignedNumbers

Useless, but better know than don’t

26.

Adder &Subtraction

27.

28.

29.

Week #5• Finite State Machine (Bi-Bi and traffic light)

• Instruction Set (Stack / Accumulator / Register –Based architectures)

• CISC vs. RISC (MIPS?)

• Design Principles

• Program Counter

• Set/Reset & Enable Latches

30.

FSM• A FSM is defined as: A set of states S (circles), an initial state s0 and a

transition function that maps from the current input and current state

to the output and the next state (arrows between states)

31.

Instruction set• Instruction Set (IS) - the vocabulary of computer’s language, e.g. RISCV or Intel x86 instruction sets: Instruction set includes a complete set

of instructions, recognizable by a given hardware platform Different

computers have different instruction sets, but many aspects are

similar

32.

33.

СISC vs. RISC• Reduced Instruction Set Computer (RISC) It simplifies the processor

by efficiently implementing only the instructions that are frequently

used in programs, while the less common operations are

implemented as subroutines. (ARM, MIPS)

• Complex Instruction Set Computer (CISC) It has many specialized

instructions, some of which may only be rarely used in practical

programs. (intel x86)

34.

Design Principles & PC• Design Principles

• 1. Simplicity favors

regularity

• 2. Smaller is faster

• 3. Make the common

case fast

• 4. Good design

demands good

compromises

• Program Counter (PC) - the index/address

of a program instruction being executed

by a processor at a current time

• Program Counter register (PC register) the register containing a program counter

value PC-relative addressing

• This mode can be used to load a register

with a value stored in program memory

• Target address = PC + offset × 4 (each

RISC-V instruction takes 4 bytes)

• PC already incremented by 4 by this time

35.

Set/Reset Latch Circuit36.

Enable Latch37.

Week #6• Flip-Flops

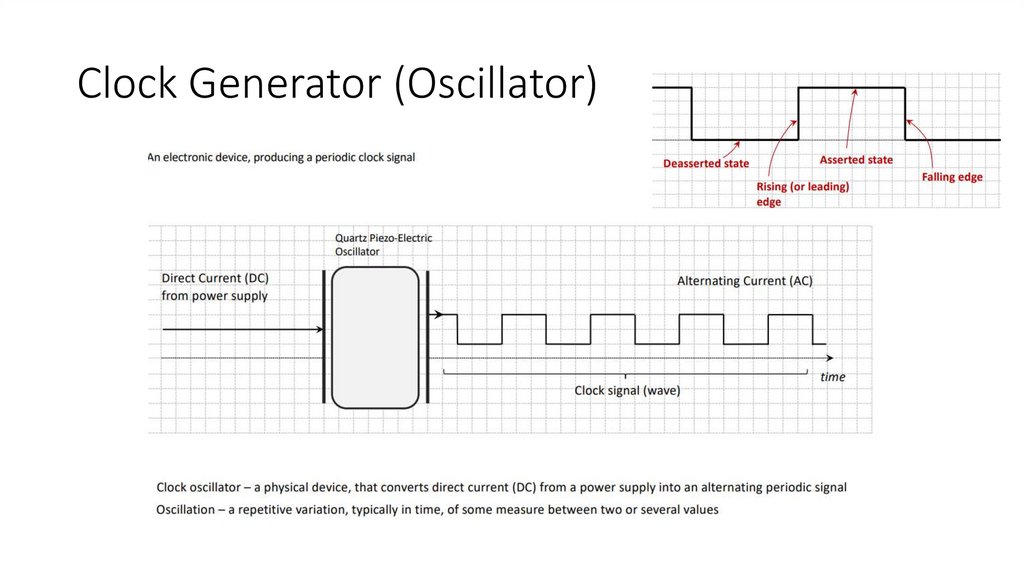

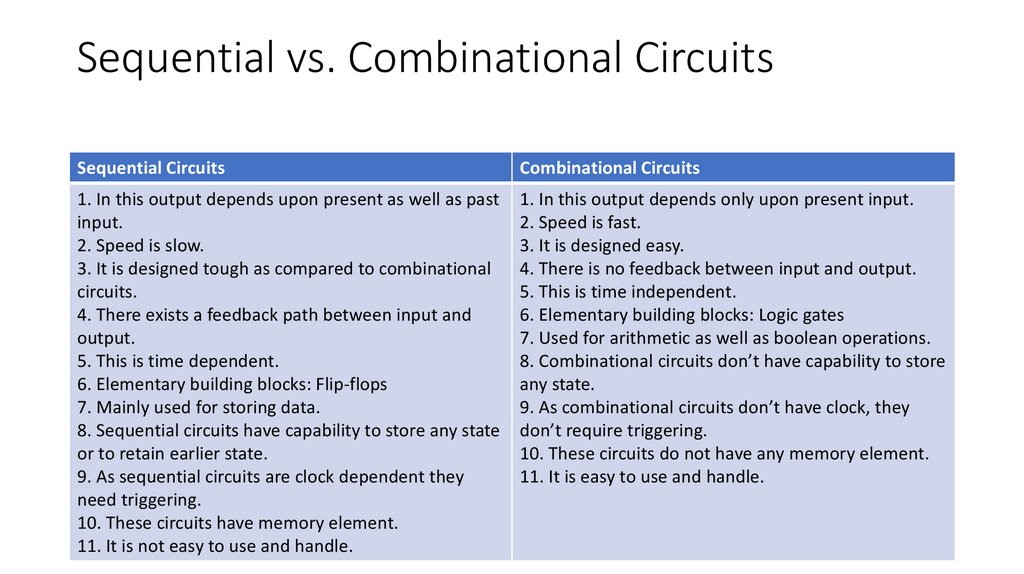

• Clock Generator (Oscillator)

• Sequential and Non-sequential Logic Circuits

• Types of memory

• Volatile & Non-volatile

38.

Clock Generator (Oscillator)39.

A flip flop in digital electronics is a circuit withtwo stable states that can be used to store

binary data.

40.

Sequential vs. Combinational CircuitsSequential Circuits

Combinational Circuits

1. In this output depends upon present as well as past

input.

2. Speed is slow.

3. It is designed tough as compared to combinational

circuits.

4. There exists a feedback path between input and

output.

5. This is time dependent.

6. Elementary building blocks: Flip-flops

7. Mainly used for storing data.

8. Sequential circuits have capability to store any state

or to retain earlier state.

9. As sequential circuits are clock dependent they

need triggering.

10. These circuits have memory element.

11. It is not easy to use and handle.

1. In this output depends only upon present input.

2. Speed is fast.

3. It is designed easy.

4. There is no feedback between input and output.

5. This is time independent.

6. Elementary building blocks: Logic gates

7. Used for arithmetic as well as boolean operations.

8. Combinational circuits don’t have capability to store

any state.

9. As combinational circuits don’t have clock, they

don’t require triggering.

10. These circuits do not have any memory element.

11. It is easy to use and handle.

41.

Types of Memory• Volatile / Non-volatile (Volatile – power supply is necessary for saving

data). Ex volatile: Caches, Registers, Main System Memory.

• Primary – typically main system memory, cache, and ROM for BIOS

are assumed; Secondary – memory units at a lower level of memory

hierarchy (SSD, etc.)

42.

Some graphs43.

Some tables44.

Week #7• Introduction to RISC-V

• Harvard & Von Neumann Architectures

• Principle of Instructions Execution for Register-Based CPU

• RISC-V Architecture Layout

• Verilog

45.

Harvard vs. Von Neumann Architecture46.

Principle of InstructionsExecution for Register-Based

CPU

47.

RISC-V Architecture48.

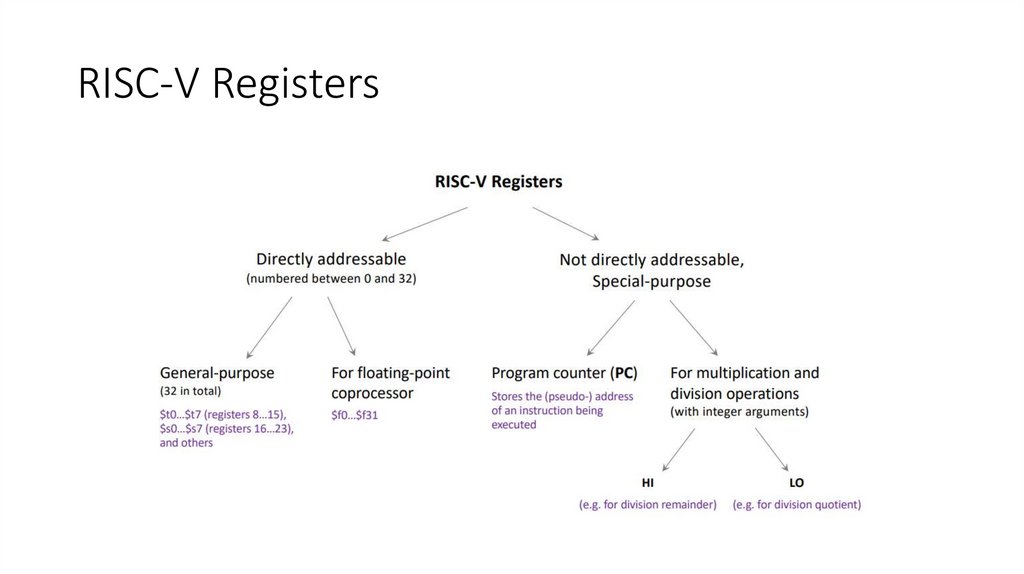

RISC-V Registers49.

Verilog50.

Notes about signs and sintax51.

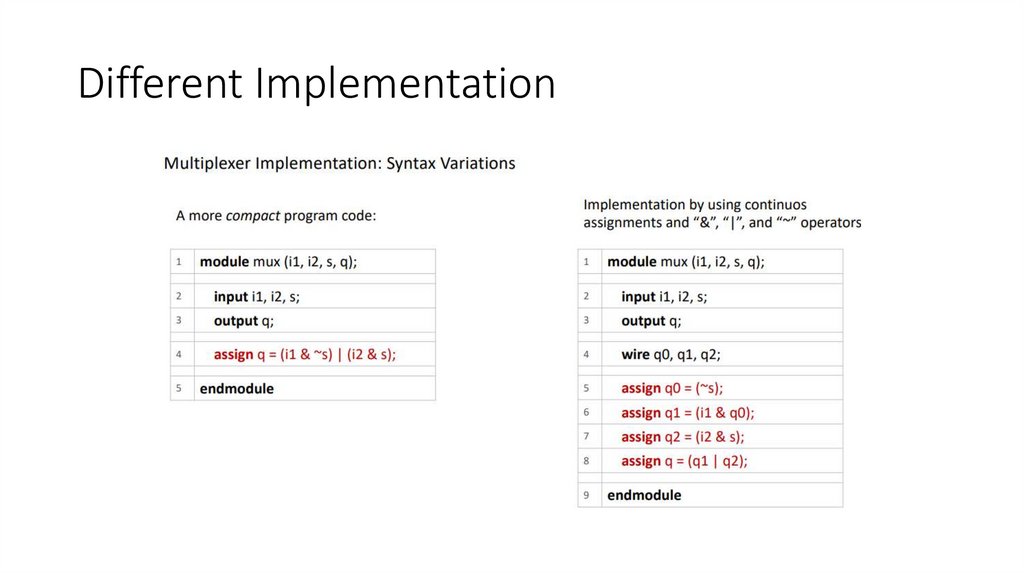

Different Implementation52.

Different implementation53.

You passed midterm! Congratulation!• Now we are going to the most complicated staff…

54.

Week 9• RISC-V Processor: Mapping Subcircuits to Instruction Execution

Stages: IF, ID, EXE, MA, WB

• Some recapping and quiz checking

55.

Week #9• I hope I will done it, if not, soon (or not)

56.

Week 11• GPU vs. CPU? What’s the difference

• Flynn’s Taxonomy (MIMD, SISD, and others)

• RISC-V Processor: Mapping Subcircuits to Instruction Execution

Stages: IF, ID, EXE, MA, WB

57.

58.

59.

Types of instructions in RISC-V• Type I (Immediate):

• Type B (Branch):

Type I instructions contain an immediate value

used as an operand. Examples: ADDI (add

immediate), LW (load word with immediate

offset).

Type B instructions are used for conditional

branches. Examples: BEQ (branch if equal), BNE

(branch if not equal).

• Type R (Register):

• Type U (Upper Immediate):

Type U instructions are used for loading large

Type R instructions perform operations between immediate values.Example: LUI (load upper

registers. Examples: ADD (addition), SUB

immediate).

(subtraction), AND (logical AND).

• Type J (Jump):

• Type S (Store):

Type S instructions are used to store data in

memory. Example: SW (store word).

Type J instructions are used for unconditional

jumps. Example: JAL (jump and link with saving

the return address).

60.

61.

62.

Week 12• CPU pipelining

• Pipelining hazards

63.

64.

65.

66.

67.

68.

69.

70.

71.

72.

73.

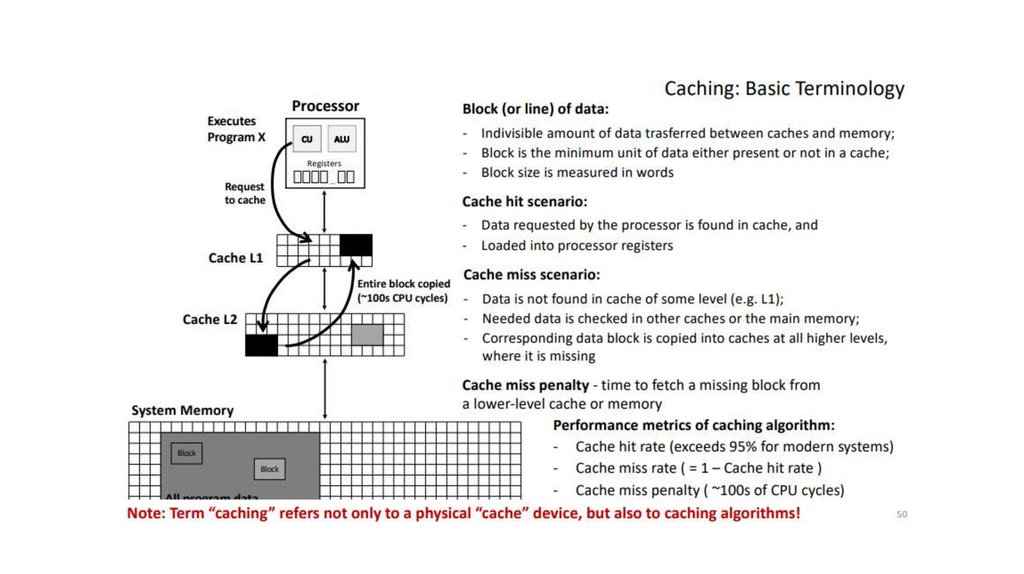

Week 13• Caching

informatics

informatics