Similar presentations:

Linguistically-Informed Self-Attention for Semantic Role Labeling

1.

LISALinguistically-Informed Self-Attention

for Semantic Role Labeling

Emma

1

Strubell

Patrick

1

Verga

1

Daniel

2

Andor

2

David

2

Weiss

Andrew

1

McCallum

2.

Want fast, accurate, robust NLP( (S (NP-SBJ (NP (NNP Pierre) (NNP Vinken))

(, ,)

(ADJP (NML (CD 61) (NNS years))

(JJ old))

(, ,))

(VP (MD will)

(VP (VB join)

(NP (DT the) (NN board))

(PP-CLR (IN as)

(NP (DT a) (JJ nonexecutive) (NN director)))

(NP-TMP (NNP Nov.) (CD 29))))

(. .)))

2

3.

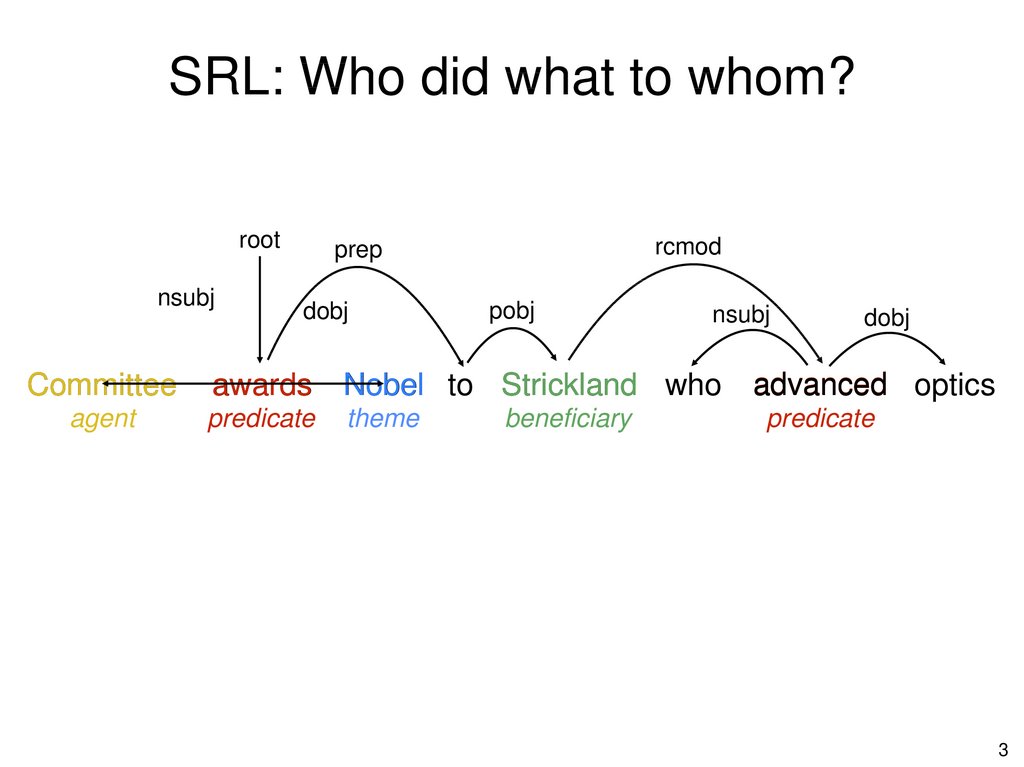

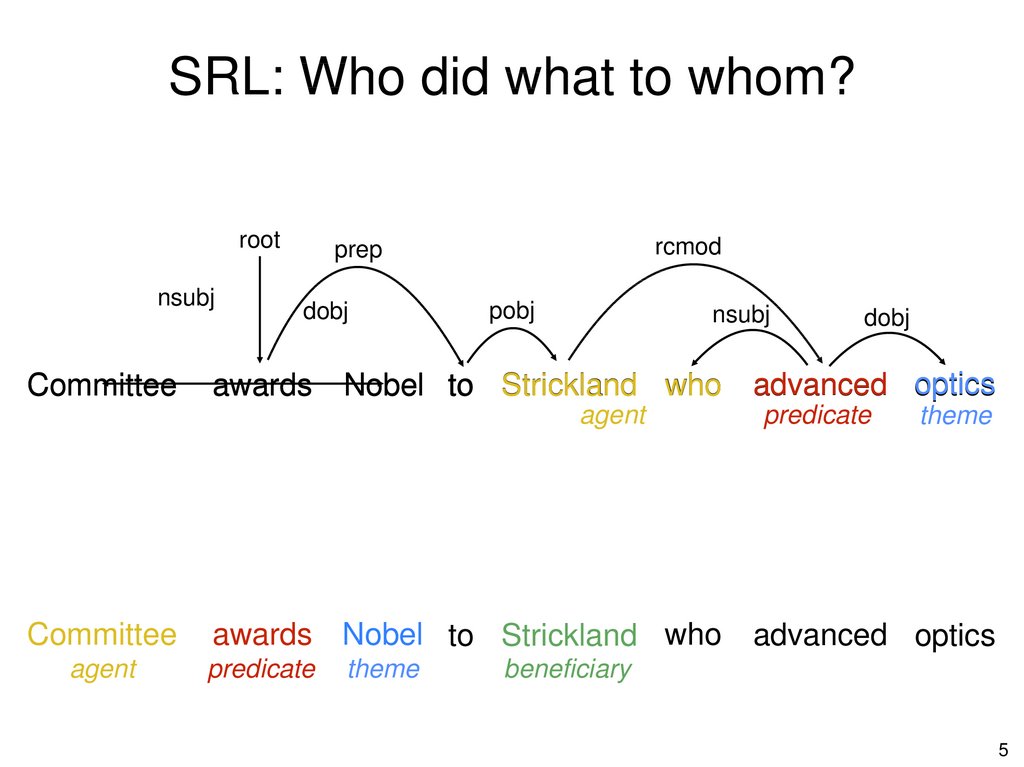

SRL: Who did what to whom?root

nsubj

Committee

agent

rcmod

prep

dobj

pobj

nsubj

awards Nobel to Strickland who

predicate

theme

beneficiary

dobj

advanced optics

predicate

3

4.

SRL: Who did what to whom?root

nsubj

Committee

agent

rcmod

prep

dobj

pobj

nsubj

awards Nobel to Strickland who

predicate

theme

agent

beneficiary

dobj

advanced optics

predicate

theme

4

5.

SRL: Who did what to whom?root

nsubj

Committee

rcmod

prep

dobj

awards

pobj

nsubj

Nobel to Strickland who

agent

Committee

agent

awards Nobel to Strickland who

predicate

theme

dobj

advanced optics

predicate

theme

advanced optics

beneficiary

5

6.

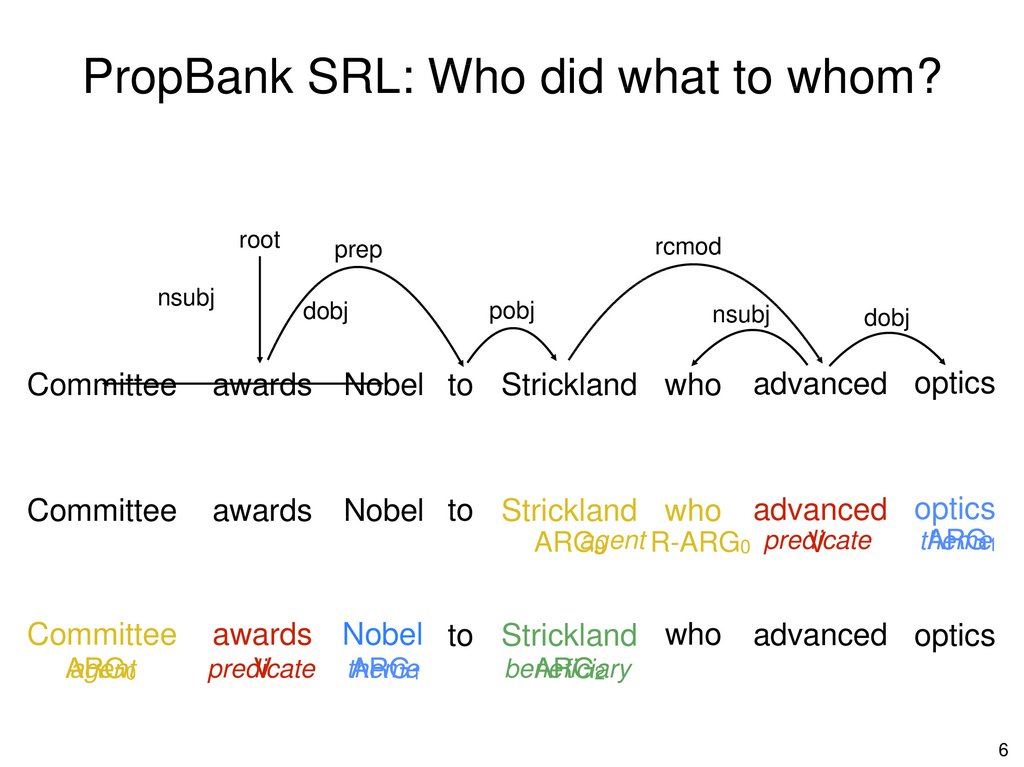

PropBank SRL: Who did what to whom?root

nsubj

rcmod

prep

dobj

pobj

nsubj

dobj

Committee

awards

Nobel to Strickland who

advanced optics

Committee

awards

Nobel to Strickland who

advanced optics

agent

ARG

R-ARG0 predicate

V

0

Committee

agent0

ARG

awards Nobel to Strickland who

predicate

V

theme

ARG1

theme

ARG1

advanced optics

beneficiary

ARG2

6

7.

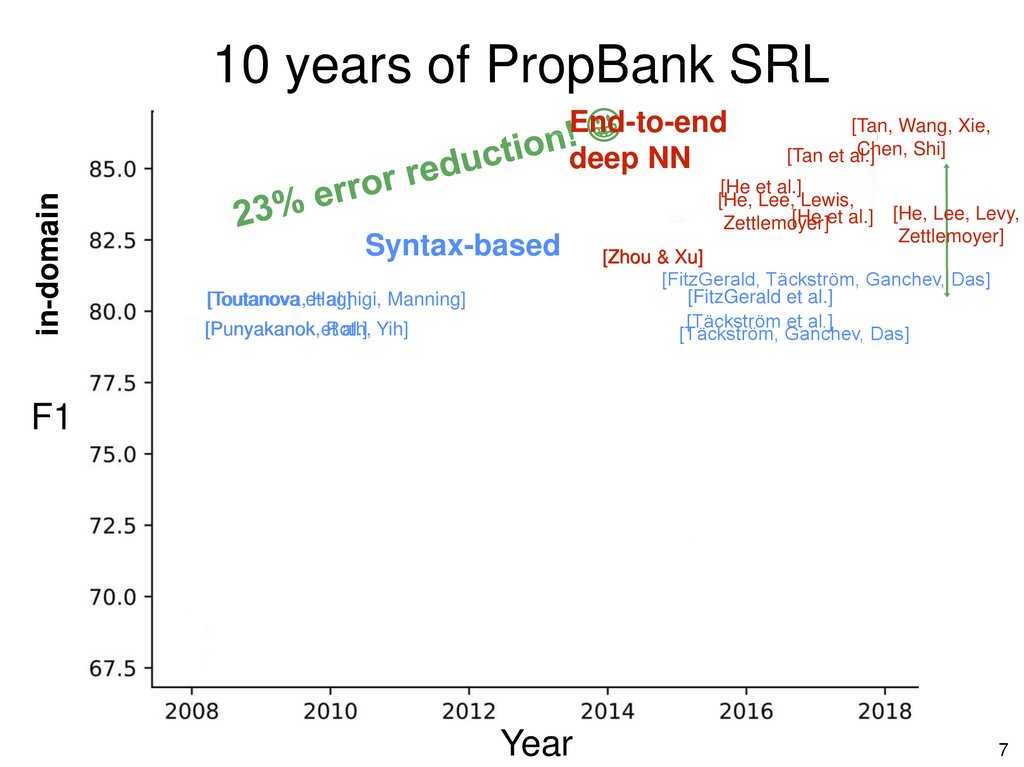

10 years of PropBank SRLin-domain

End-to-end

deep NN

[Tan, Wang, Xie,

Chen, Shi]

[Tan et al.]

[He et al.]

[He, Lee, Lewis,

[He et al.]

Zettlemoyer]

Syntax-based

[Toutanova,etHaghigi,

Manning]

[Toutanova

al.]

[Punyakanok,

[Punyakanok et

Roth,

al.] Yih]

[He, Lee, Levy,

Zettlemoyer]

[Zhou & Xu]

[FitzGerald, Täckström, Ganchev, Das]

[FitzGerald et al.]

[Täckström et al.]

[Täckström, Ganchev, Das]

F1

Year

7

8.

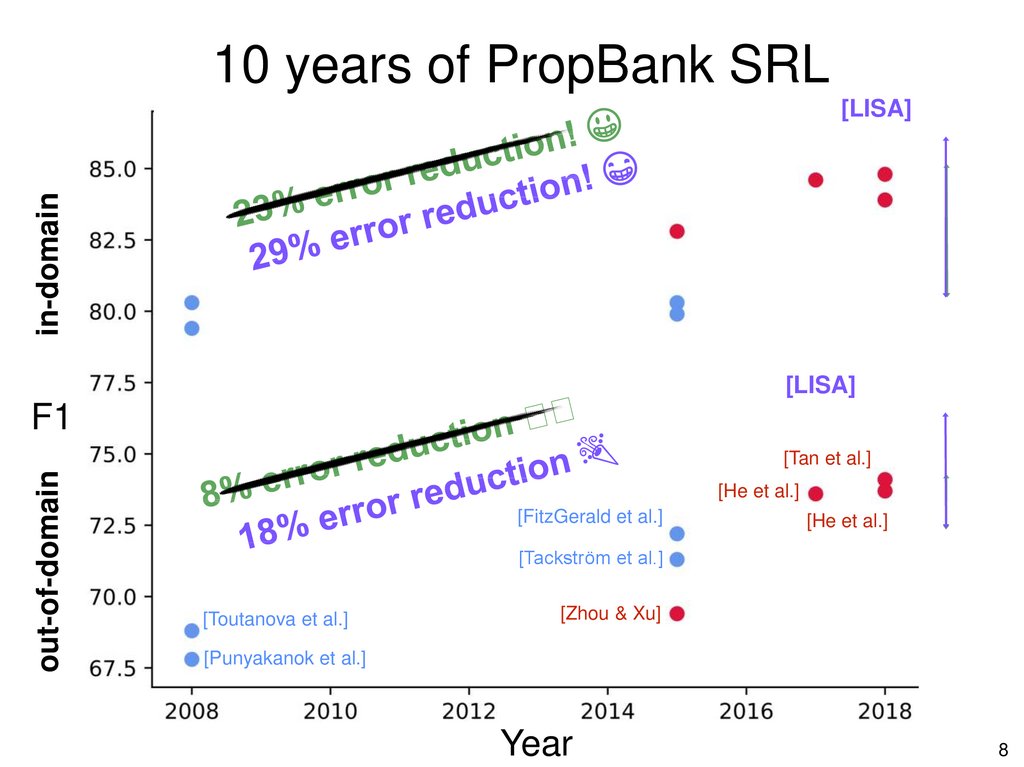

10 years of PropBank SRLin-domain

[LISA]

[LISA]

F1

out-of-domain

[Tan et al.]

[He et al.]

[FitzGerald et al.]

[He et al.]

[Tackström et al.]

[Toutanova et al.]

[Zhou & Xu]

[Punyakanok et al.]

Year

8

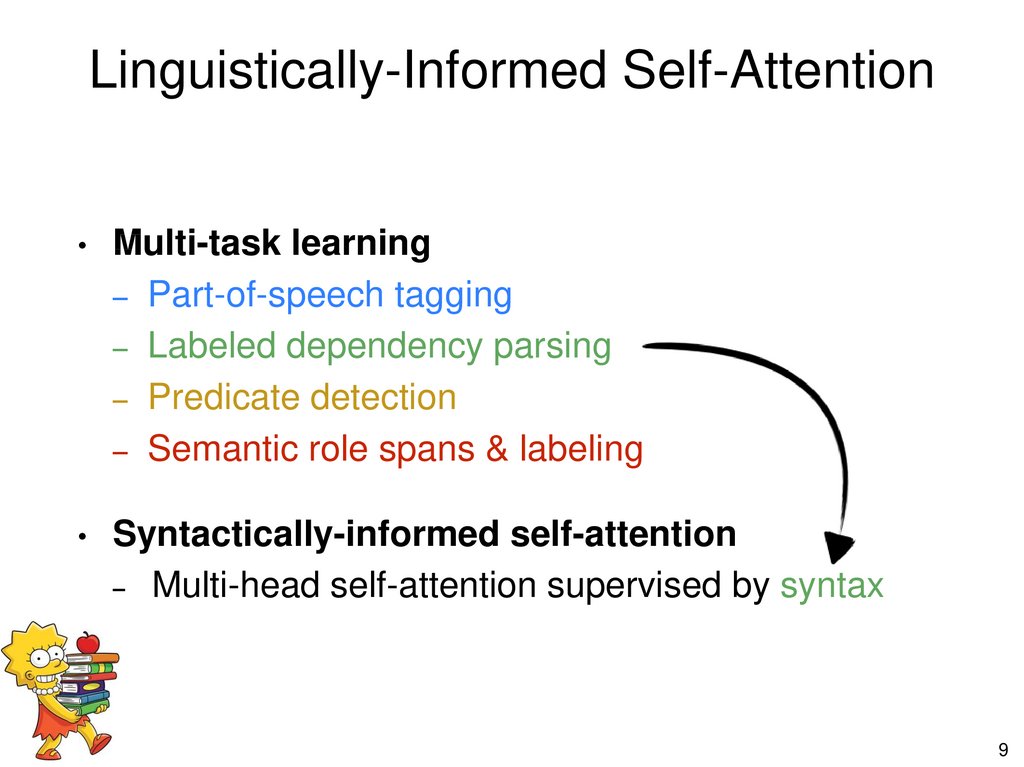

9.

Linguistically-Informed Self-AttentionMulti-task learning, single-pass inference

– Part-of-speech tagging

– Labeled dependency parsing

– Predicate detection

– Semantic role spans & labeling

Syntactically-informed self-attention

Multi-head self-attention

self-attention supervised

supervised by

by syntax

syntax

– Multi-head

9

10.

OutlineWant fast, accurate, robust NLU

PropBank SRL: Who did what to whom?

10 years of PropBank SRL

LISA: Linguistically-informed self attention

Multi-head self-attention

Syntactically-informed self-attention

Multi-task learning, single-pass inference

[Vaswani et al. 2017]

Experimental results & error analysis

10

11.

Self-attention[Vaswani et al. 2017]

Q

K

V

Layer p

Nobel committee awards Strickland who advanced optics

11

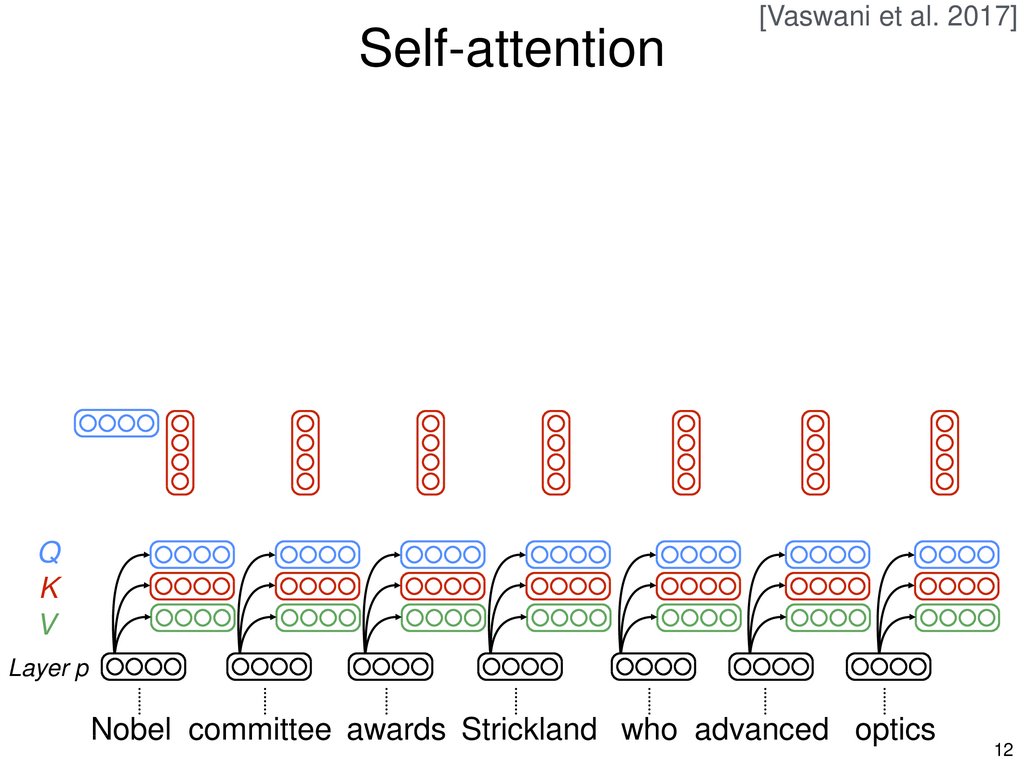

12.

Self-attention[Vaswani et al. 2017]

Q

K

V

Layer p

Nobel committee awards Strickland who advanced optics

12

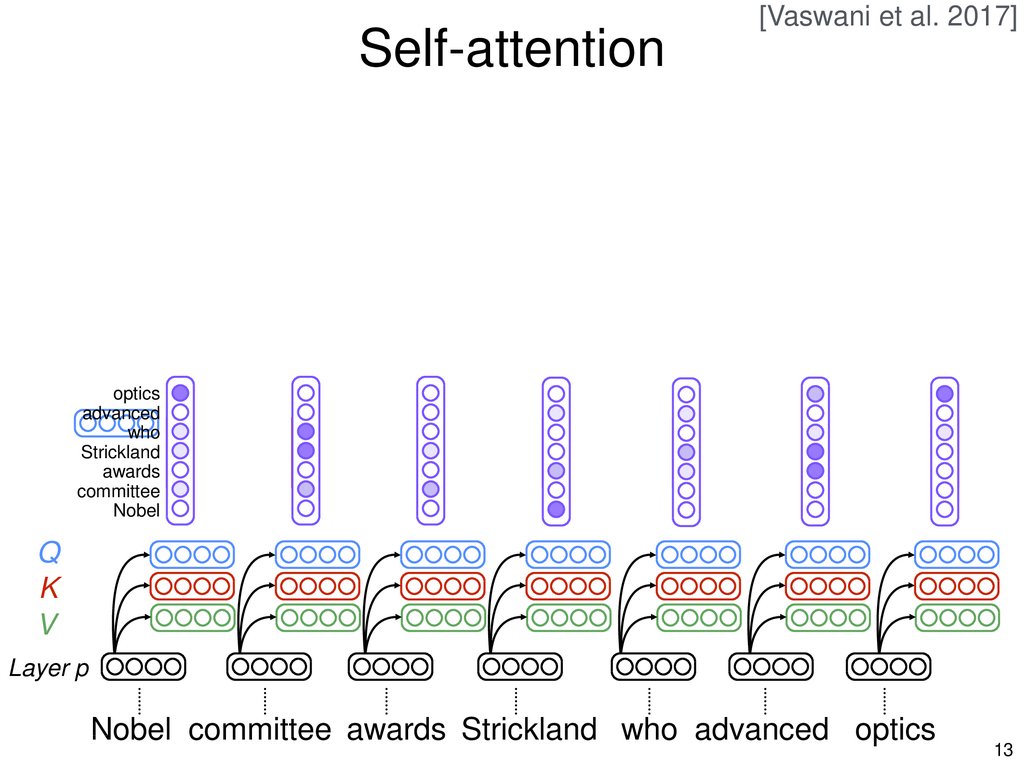

13.

Self-attention[Vaswani et al. 2017]

optics

advanced

who

Strickland

awards

committee

Nobel

Q

K

V

Layer p

Nobel committee awards Strickland who advanced optics

13

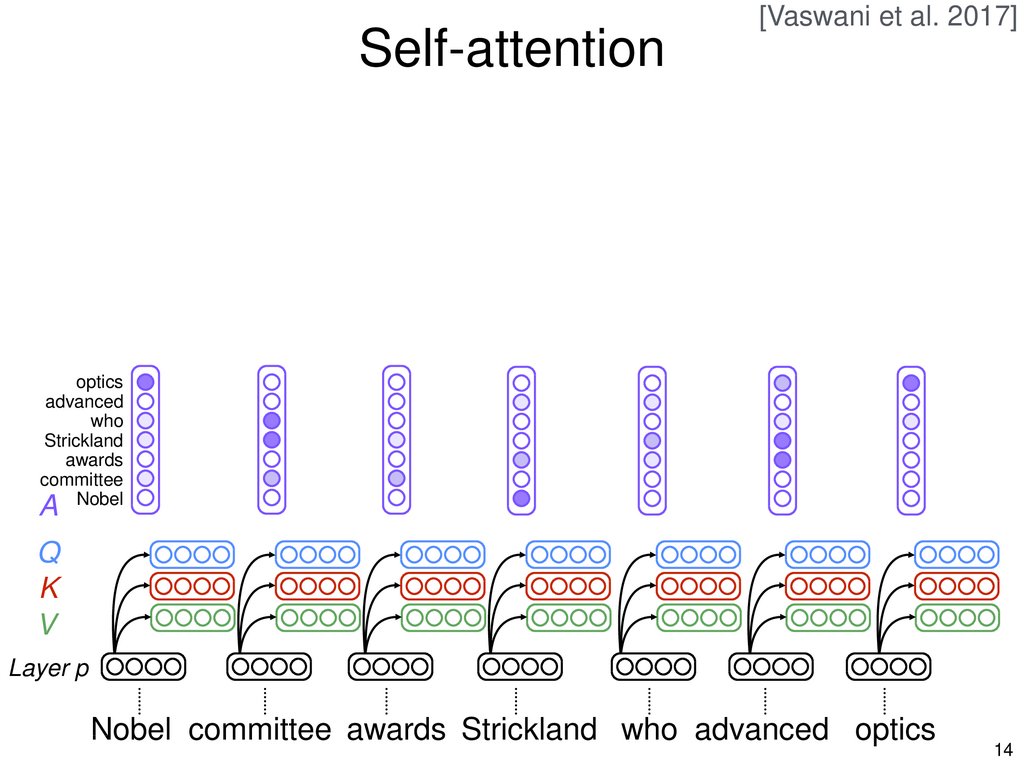

14.

Self-attention[Vaswani et al. 2017]

optics

advanced

who

Strickland

awards

committee

Nobel

A

Q

K

V

Layer p

Nobel committee awards Strickland who advanced optics

14

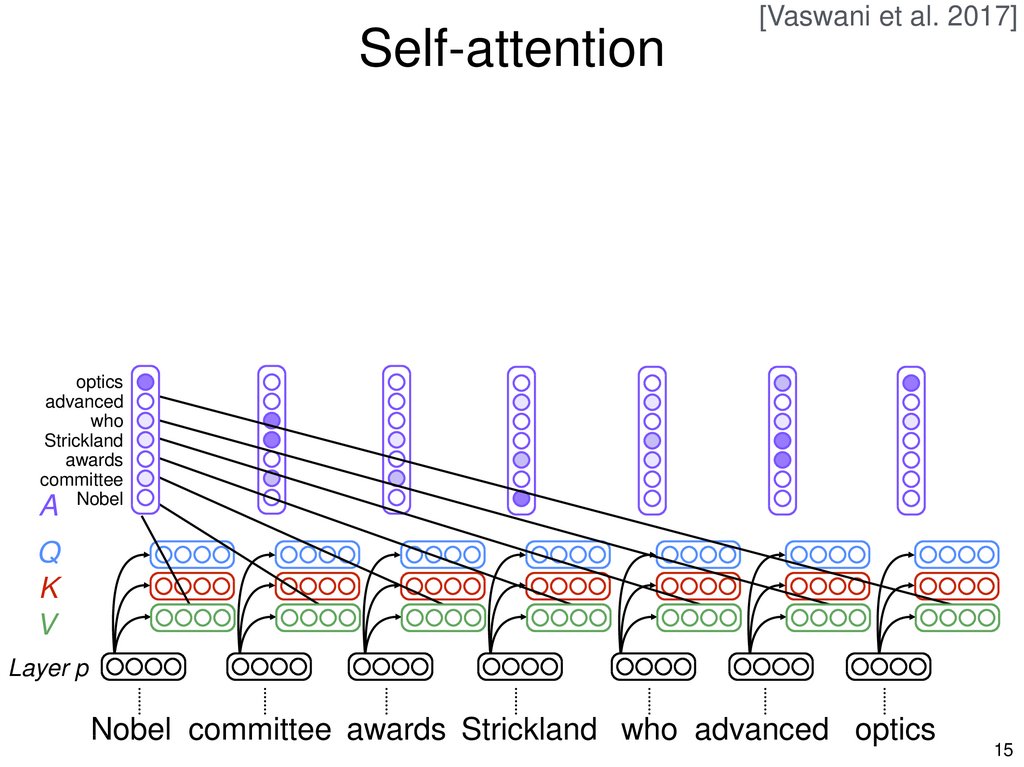

15.

Self-attention[Vaswani et al. 2017]

optics

advanced

who

Strickland

awards

committee

Nobel

A

Q

K

V

Layer p

Nobel committee awards Strickland who advanced optics

15

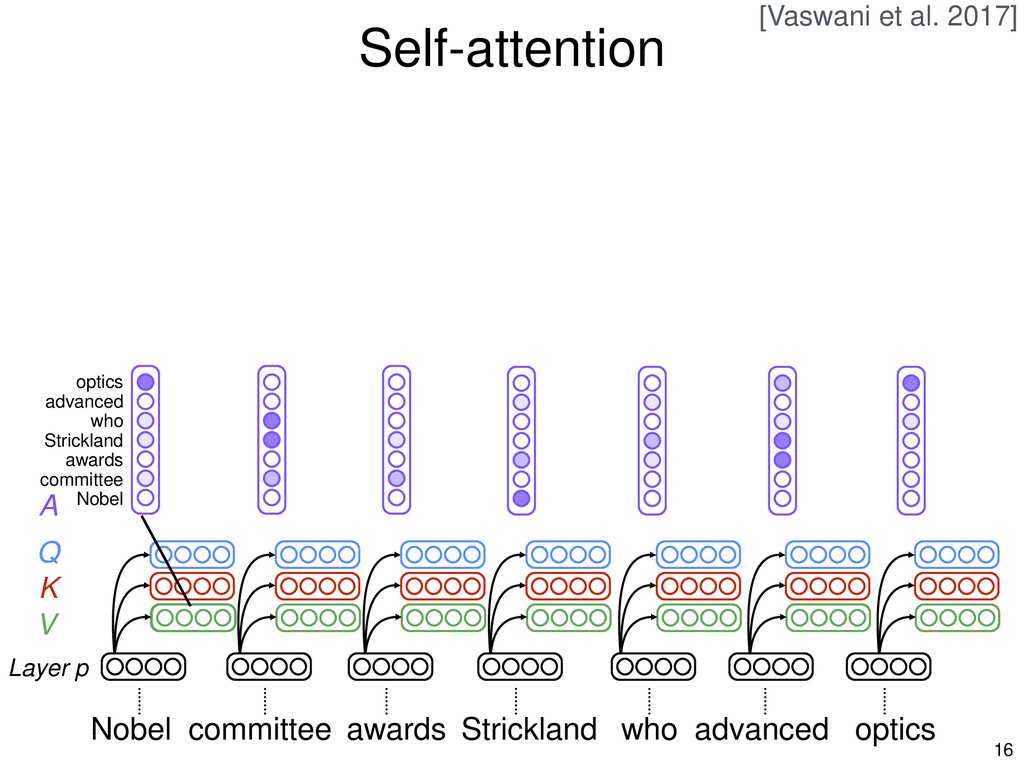

16.

Self-attention[Vaswani et al. 2017]

optics

advanced

who

Strickland

awards

committee

Nobel

A

Q

K

V

Layer p

Nobel committee awards Strickland who advanced optics

16

17.

Self-attention[Vaswani et al. 2017]

M

optics

advanced

who

Strickland

awards

committee

Nobel

A

Q

K

V

Layer p

Nobel committee awards Strickland who advanced optics

17

18.

Self-attention[Vaswani et al. 2017]

M

optics

advanced

who

Strickland

awards

committee

Nobel

A

Q

K

V

Layer p

Nobel committee awards Strickland who advanced optics

18

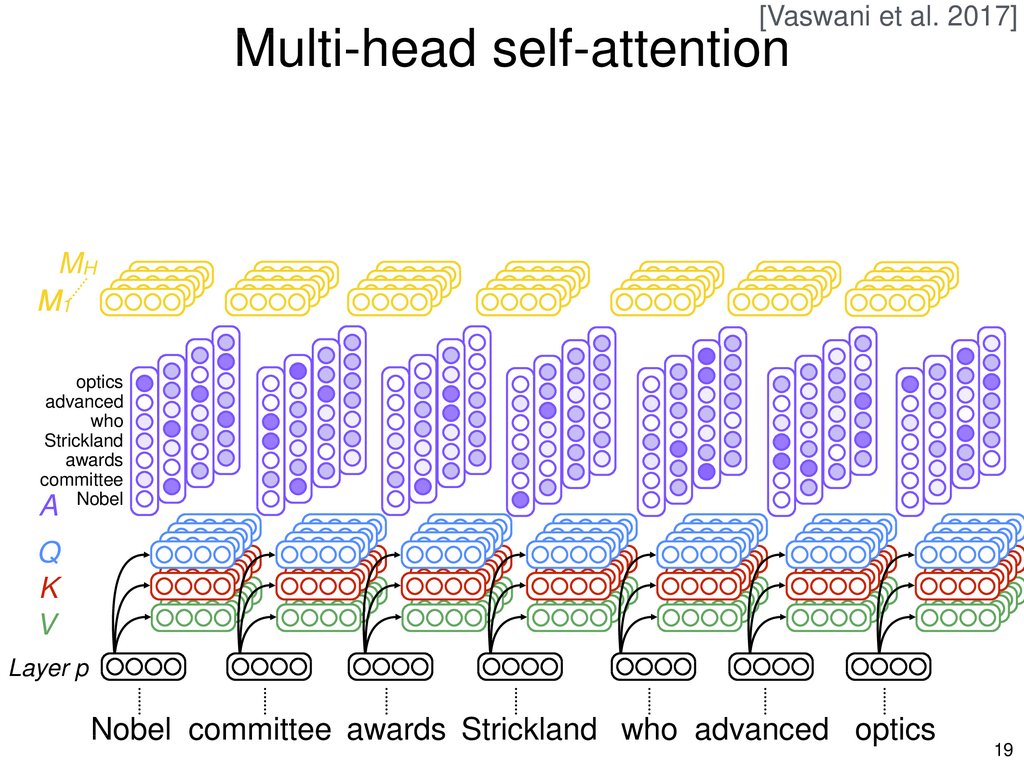

19.

[Vaswani et al. 2017]Multi-head self-attention

MH

M1

optics

advanced

who

Strickland

awards

committee

Nobel

A

Q

K

V

Layer p

Nobel committee awards Strickland who advanced optics

19

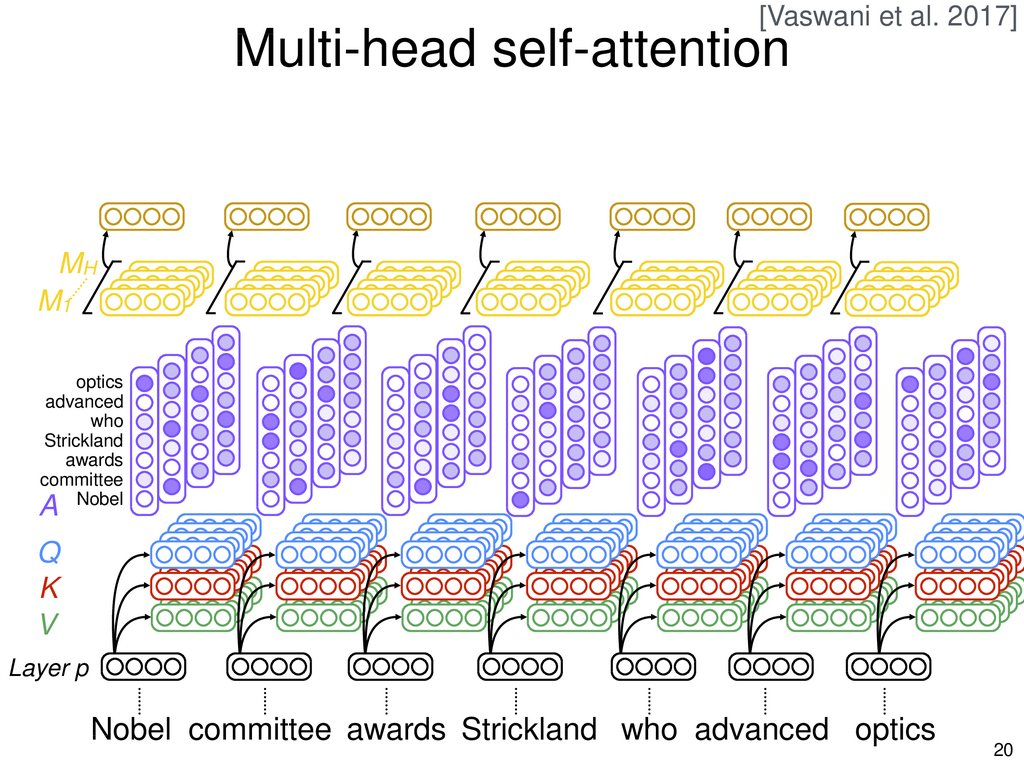

20.

[Vaswani et al. 2017]Multi-head self-attention

MH

M1

optics

advanced

who

Strickland

awards

committee

Nobel

A

Q

K

V

Layer p

Nobel committee awards Strickland who advanced optics

20

21.

[Vaswani et al. 2017]Multi-head self-attention

Feed

Forward

Forward

Forward

Feed

Forward

Forward

Forward

Feed

Forward

Forward

Forward

Feed

Forward

Forward

Forward

Feed

Forward

Forward

Forward

Feed

Forward

Forward

Forward

Feed

Forward

Forward

Forward

MH

M1

optics

advanced

who

Strickland

awards

committee

Nobel

A

Q

K

V

Layer

Layer p

p+1

Nobel committee awards Strickland who advanced optics

21

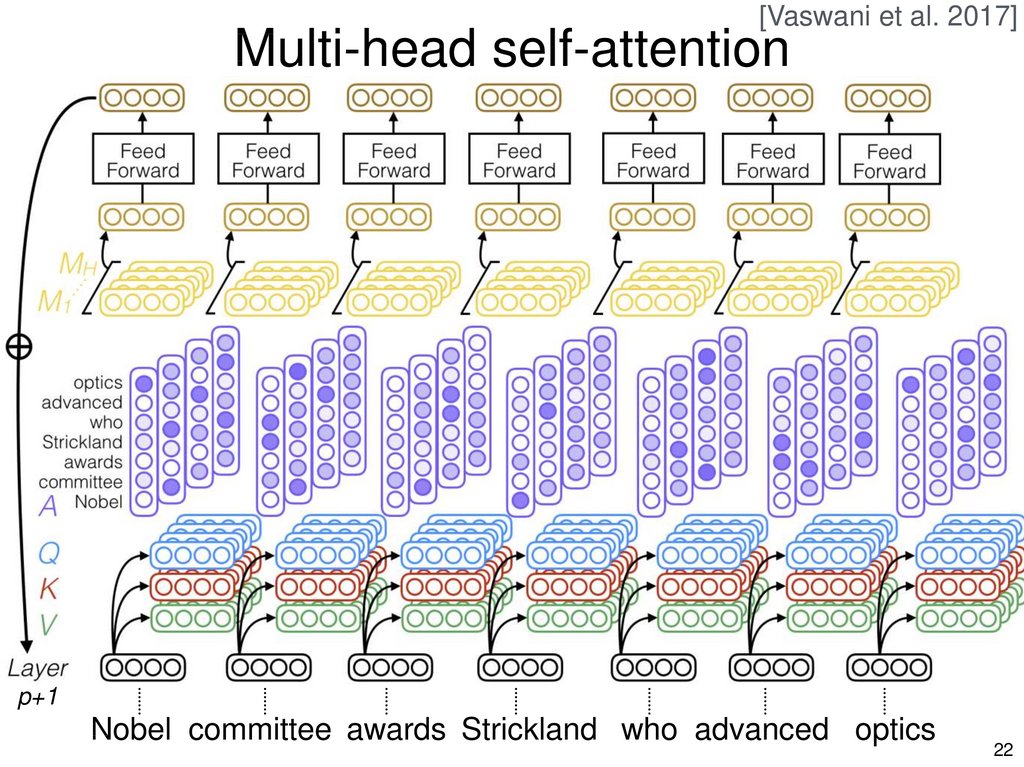

22.

[Vaswani et al. 2017]Multi-head self-attention

p+1

Nobel committee awards Strickland who advanced optics

22

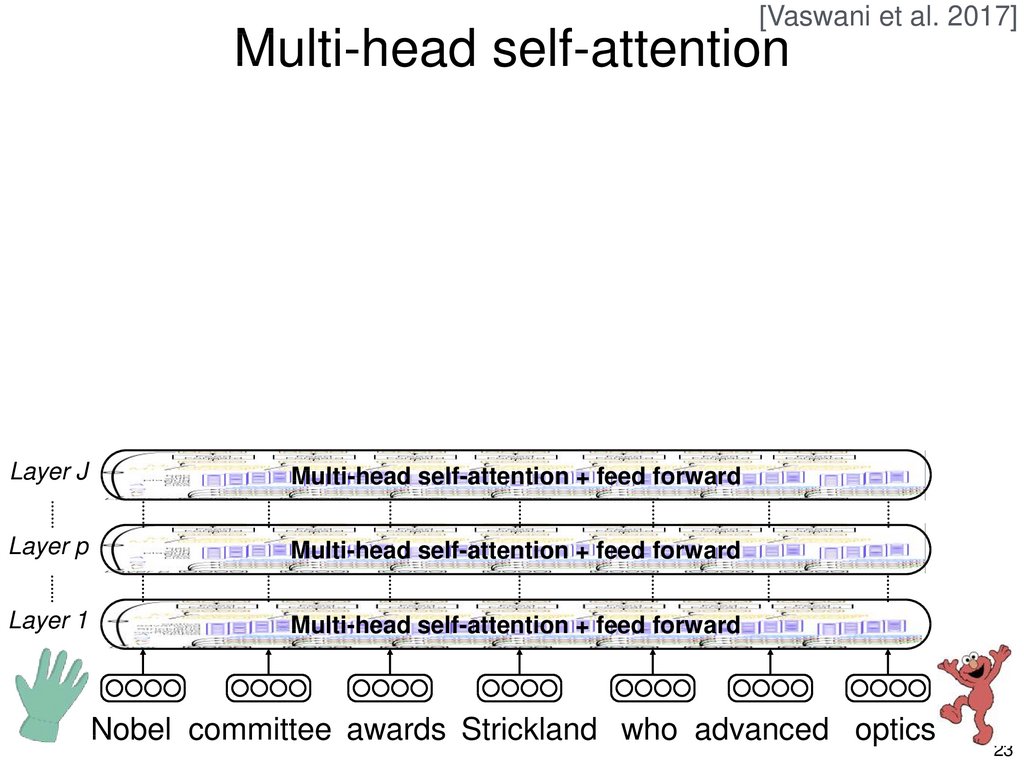

23.

[Vaswani et al. 2017]Multi-head self-attention

Layer J

Multi-head self-attention + feed forward

Layer p

Multi-head self-attention + feed forward

Layer 1

Multi-head self-attention + feed forward

Nobel committee awards Strickland who advanced optics

23

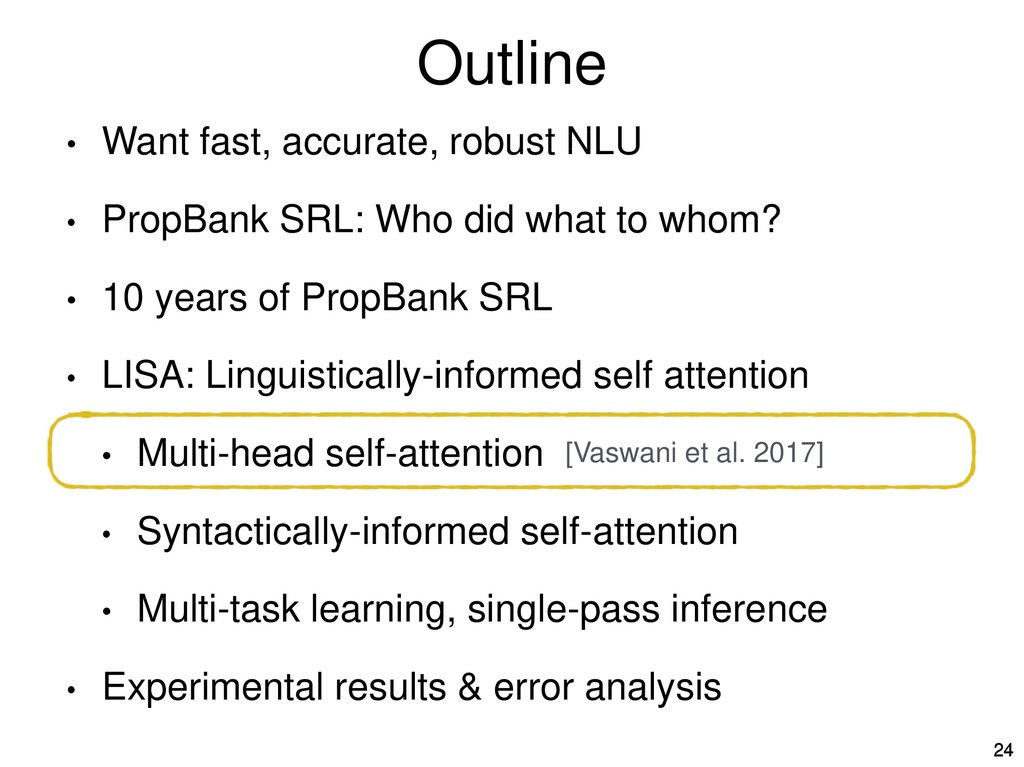

24.

OutlineWant fast, accurate, robust NLU

PropBank SRL: Who did what to whom?

10 years of PropBank SRL

LISA: Linguistically-informed self attention

Multi-head self-attention

Syntactically-informed self-attention

Multi-task learning, single-pass inference

[Vaswani et al. 2017]

Experimental results & error analysis

24

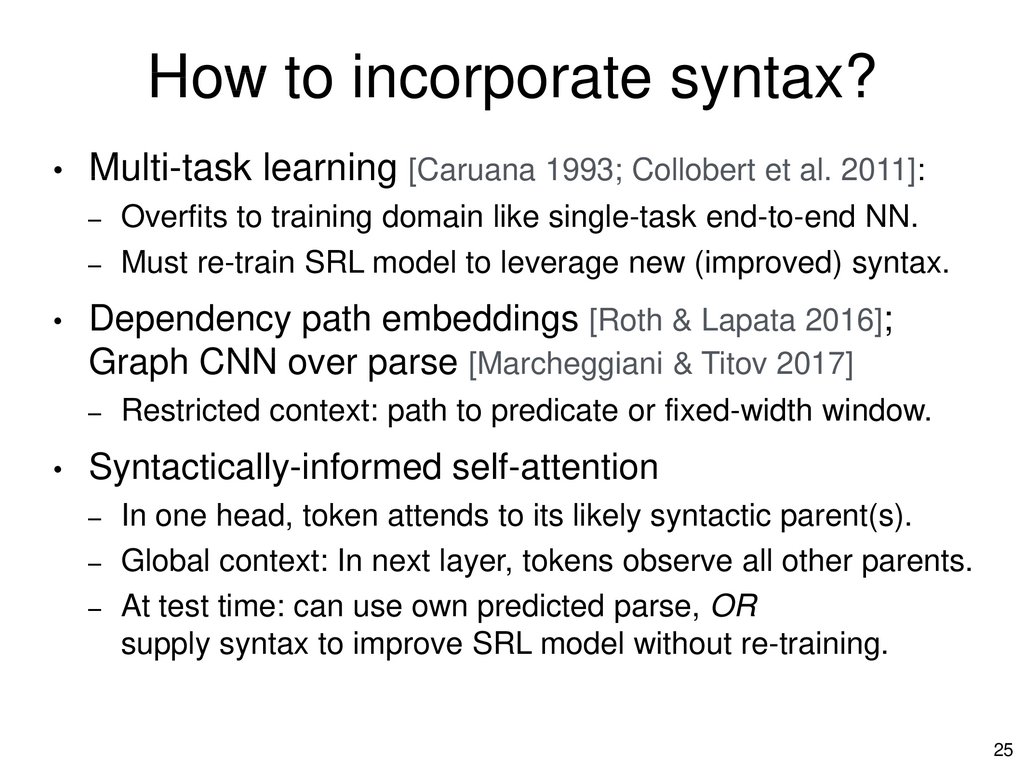

25.

How to incorporate syntax?Multi-task learning [Caruana 1993; Collobert et al. 2011]:

–

–

Dependency path embeddings [Roth & Lapata 2016];

Graph CNN over parse [Marcheggiani & Titov 2017]

–

Overfits to training domain like single-task end-to-end NN.

Must re-train SRL model to leverage new (improved) syntax.

Restricted context: path to predicate or fixed-width window.

Syntactically-informed self-attention

–

–

–

In one head, token attends to its likely syntactic parent(s).

Global context: In next layer, tokens observe all other parents.

At test time: can use own predicted parse, OR

supply syntax to improve SRL model without re-training.

25

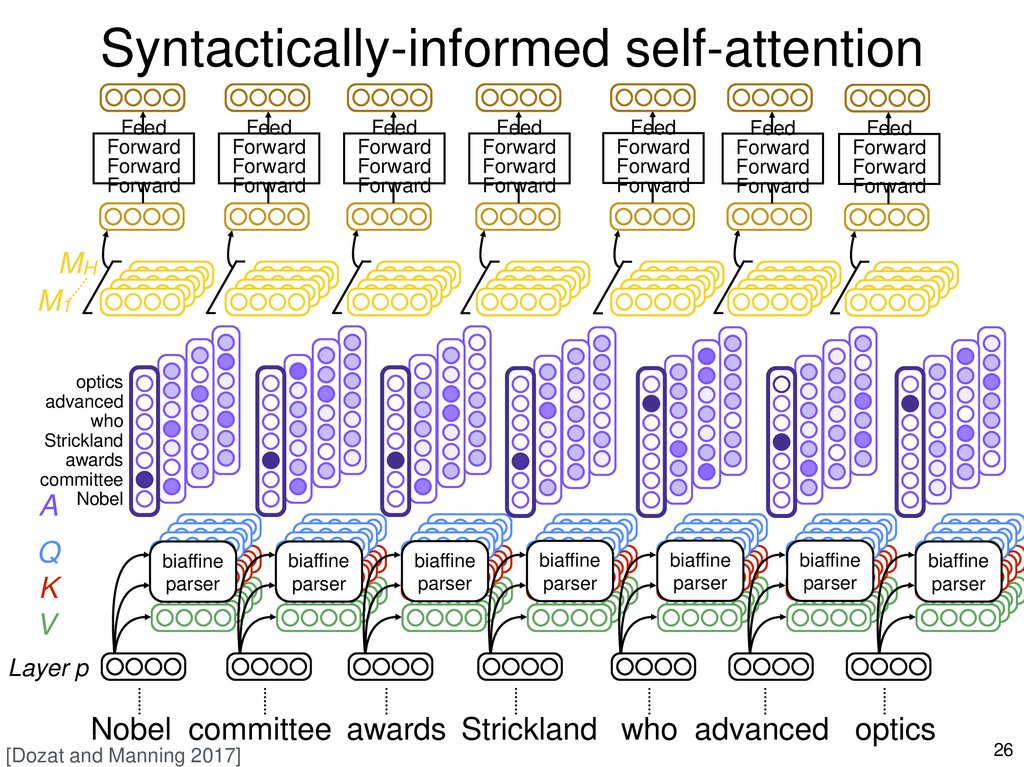

26.

Syntactically-informed self-attentionFeed

Forward

Forward

Forward

Feed

Forward

Forward

Forward

Feed

Forward

Forward

Forward

Feed

Forward

Forward

Forward

Feed

Forward

Forward

Forward

Feed

Forward

Forward

Forward

Feed

Forward

Forward

Forward

MH

M1

optics

advanced

who

Strickland

awards

committee

Nobel

A

Q

K

V

biaffine

parser

biaffine

parser

biaffine

parser

biaffine

parser

biaffine

parser

biaffine

parser

biaffine

parser

Layer p

Nobel committee awards Strickland who advanced optics

[Dozat and Manning 2017]

26

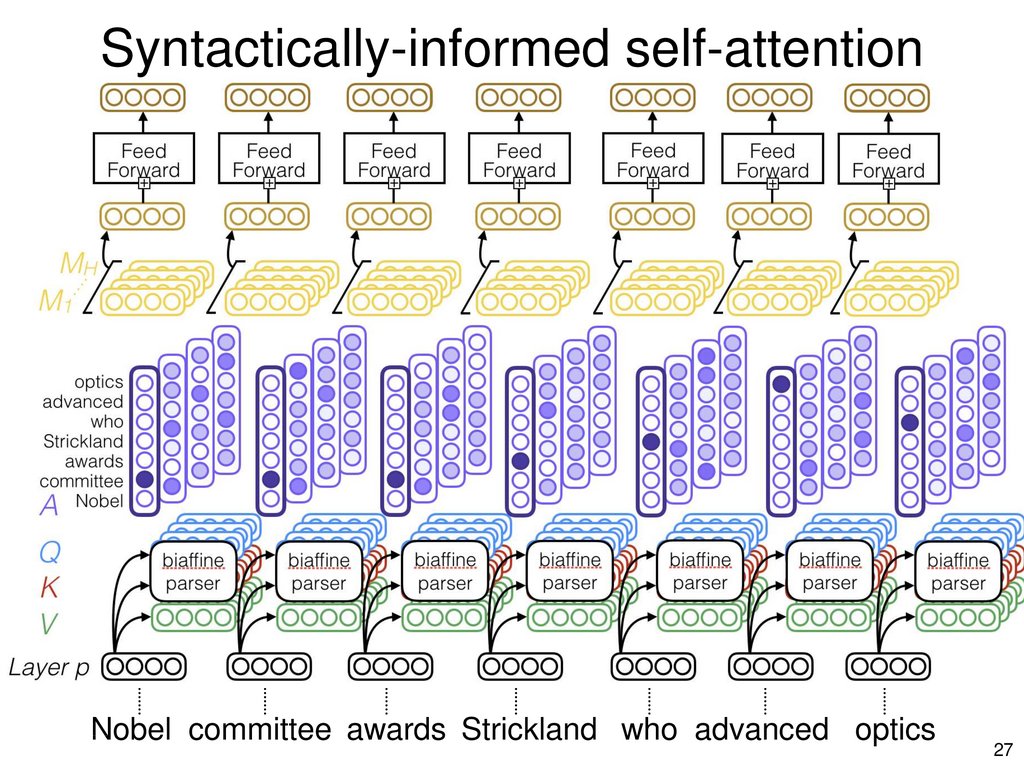

27.

Syntactically-informed self-attentionNobel committee awards Strickland who advanced optics

27

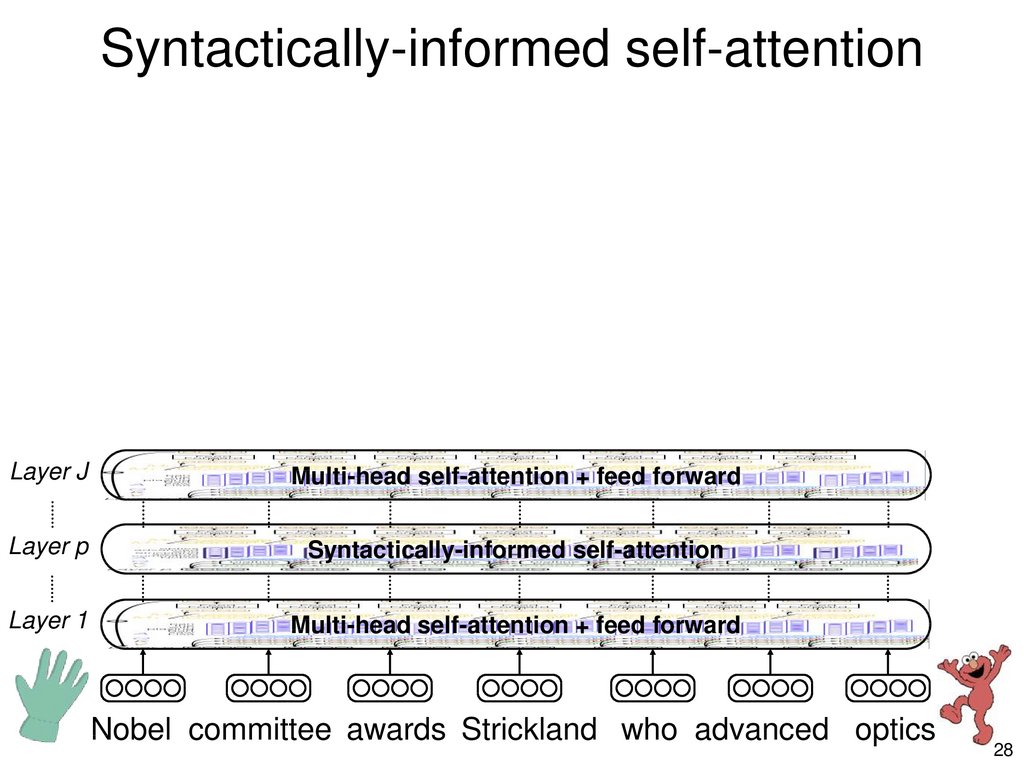

28.

Syntactically-informed self-attentionLayer J

Multi-head self-attention + feed forward

Layer p

Syntactically-informed self-attention

Layer 1

Multi-head self-attention + feed forward

Nobel committee awards Strickland who advanced optics

28

29.

OutlineWant fast, accurate, robust NLU

PropBank SRL: Who did what to whom?

10 years of PropBank SRL

LISA: Linguistically-informed self attention

Multi-head self-attention

Syntactically-informed self-attention

Multi-task learning, single-pass inference

Experimental results & error analysis

29

30.

LISA: Linguistically-Informed Self-AttentionLayer J

NNP

Multi-head self-attention + feed forward

NN

VBZ/PRED

WP

VBN/PRED

NNP

Layer rp

Syntactically-informed

Multi-head

self-attention self-attention

+ feed forward

Layer 1

Multi-head self-attention + feed forward

NN

Nobel committee awards Strickland who advanced optics

30

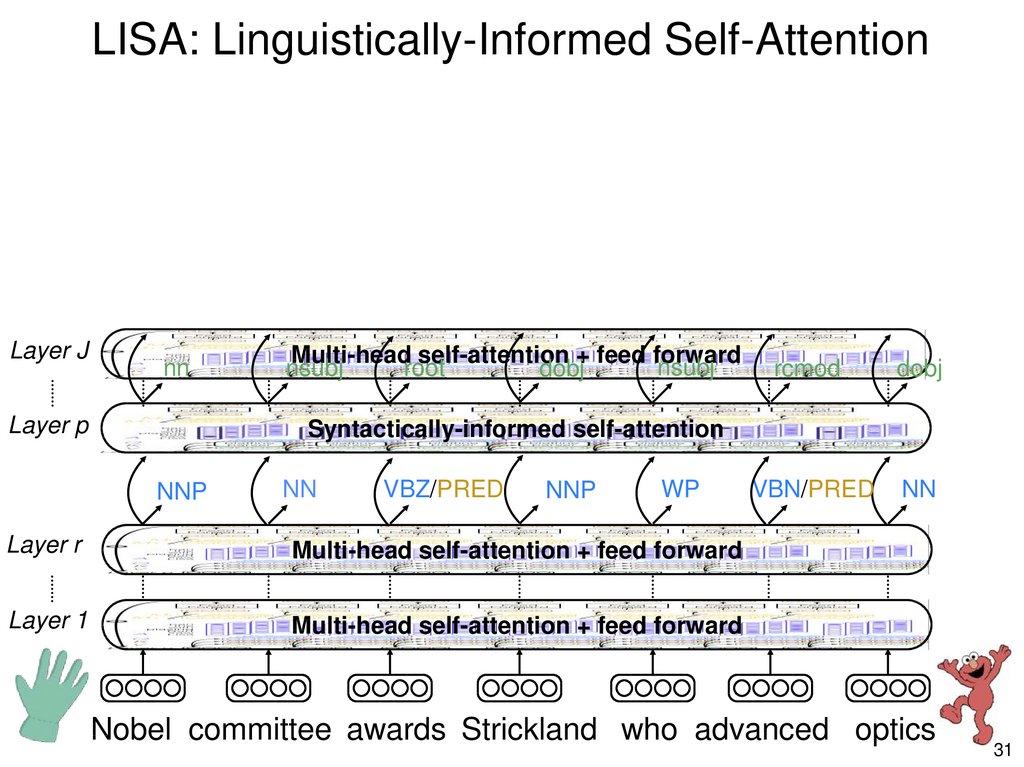

31.

LISA: Linguistically-Informed Self-AttentionLayer J

nn

Layer p

Multi-head self-attention + feed forward

nsubj

nsubj

root

dobj

rcmod

dobj

VBN/PRED

NN

Syntactically-informed self-attention

NNP

NN

VBZ/PRED

NNP

WP

Layer r

Multi-head self-attention + feed forward

Layer 1

Multi-head self-attention + feed forward

Nobel committee awards Strickland who advanced optics

31

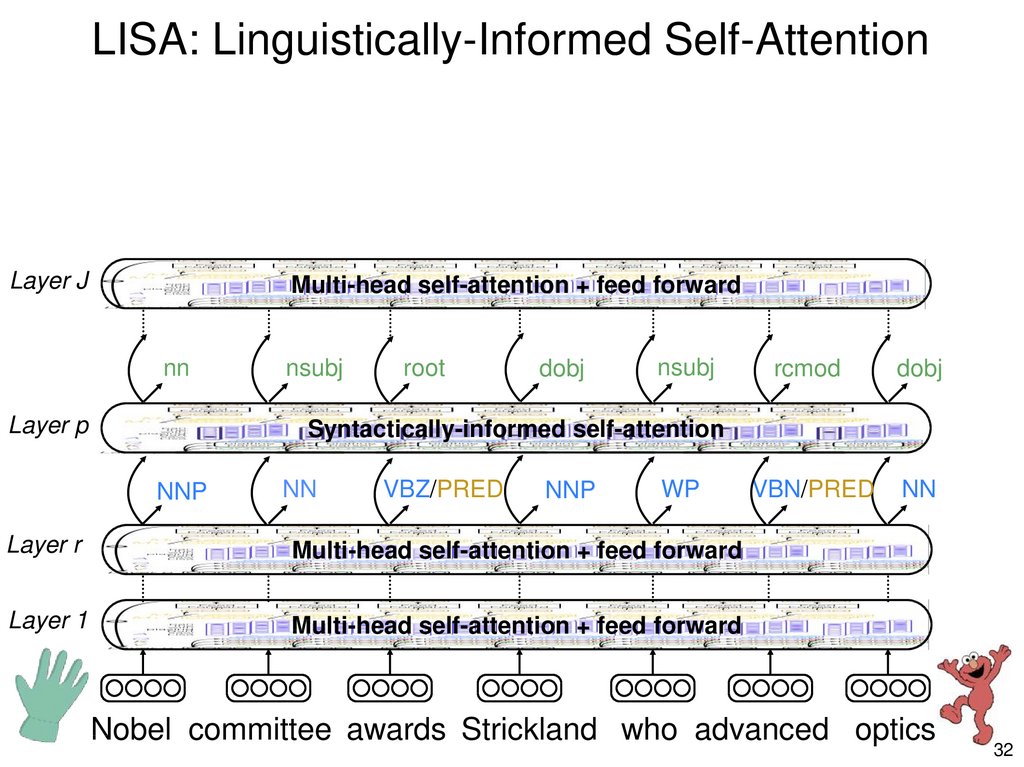

32.

LISA: Linguistically-Informed Self-AttentionLayer J

Multi-head self-attention + feed forward

nn

Layer p

nsubj

root

dobj

nsubj

rcmod

dobj

VBN/PRED

NN

Syntactically-informed self-attention

NNP

NN

VBZ/PRED

NNP

WP

Layer r

Multi-head self-attention + feed forward

Layer 1

Multi-head self-attention + feed forward

Nobel committee awards Strickland who advanced optics

32

33.

LISA: Linguistically-Informed Self-AttentionBilinear

predicates

args

Layer J

Multi-head self-attention + feed forward

nn

Layer p

root

dobj

nsubj

rcmod

dobj

VBN/PRED

NN

Syntactically-informed self-attention

NNP

Layer r

nsubj

NN

VBZ/PRED

NNP

WP

Multi-head self-attention + feed forward

Nobel committee awards Strickland who advanced optics

33

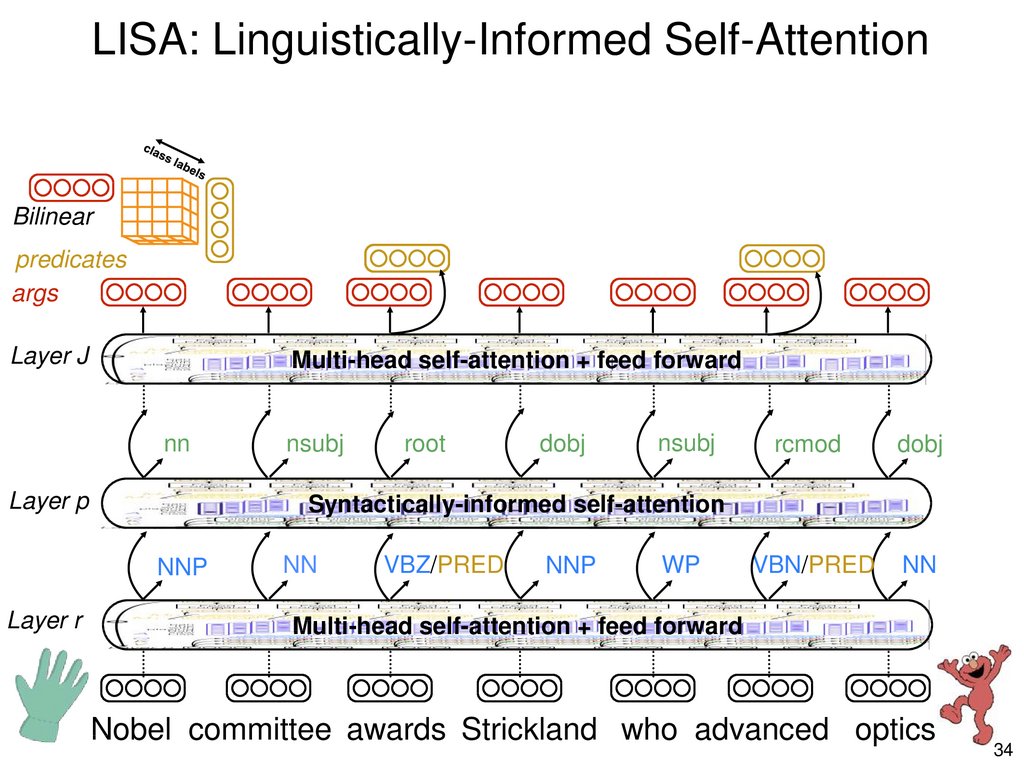

34.

LISA: Linguistically-Informed Self-AttentionBilinear

predicates

args

Layer J

Multi-head self-attention + feed forward

nn

Layer p

root

dobj

nsubj

rcmod

dobj

VBN/PRED

NN

Syntactically-informed self-attention

NNP

Layer r

nsubj

NN

VBZ/PRED

NNP

WP

Multi-head self-attention + feed forward

Nobel committee awards Strickland who advanced optics

34

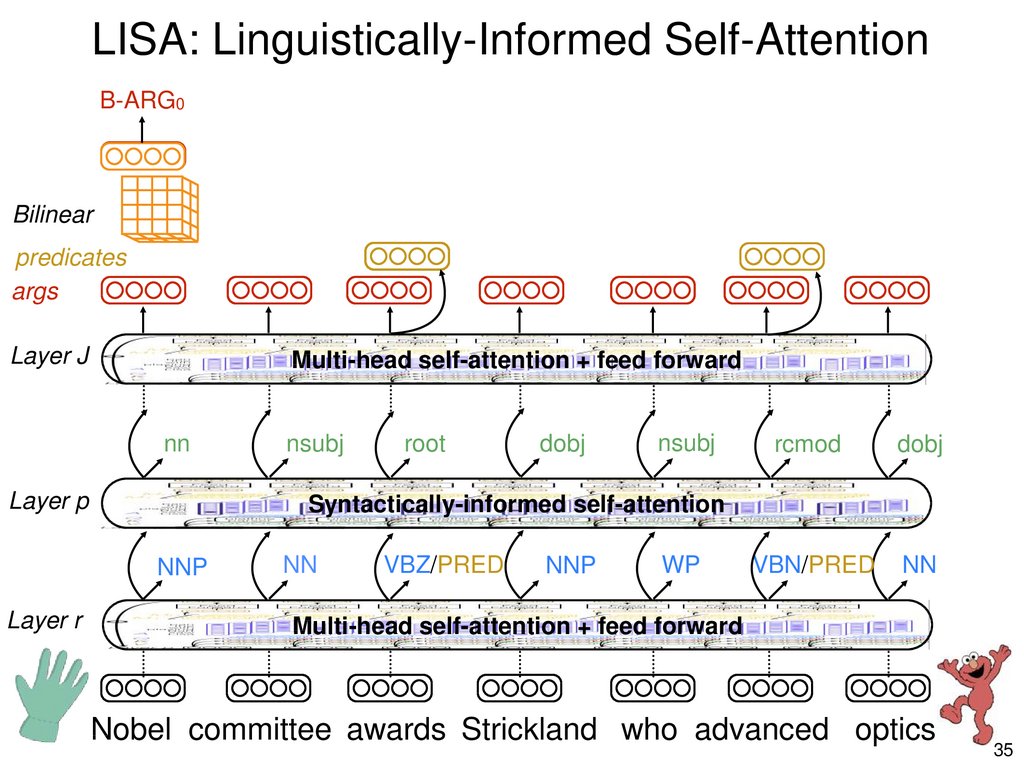

35.

LISA: Linguistically-Informed Self-AttentionB-ARG0

Bilinear

predicates

args

Layer J

Multi-head self-attention + feed forward

nn

Layer p

root

dobj

nsubj

rcmod

dobj

VBN/PRED

NN

Syntactically-informed self-attention

NNP

Layer r

nsubj

NN

VBZ/PRED

NNP

WP

Multi-head self-attention + feed forward

Nobel committee awards Strickland who advanced optics

35

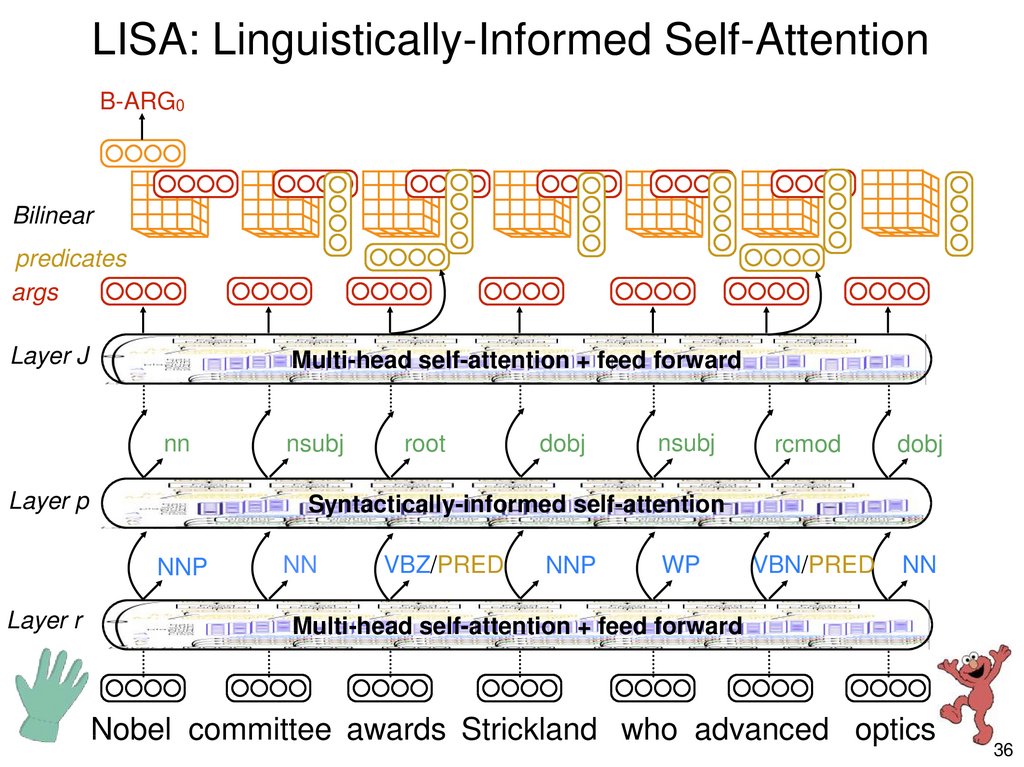

36.

LISA: Linguistically-Informed Self-AttentionB-ARG0

Bilinear

predicates

args

Layer J

Multi-head self-attention + feed forward

nn

Layer p

root

dobj

nsubj

rcmod

dobj

VBN/PRED

NN

Syntactically-informed self-attention

NNP

Layer r

nsubj

NN

VBZ/PRED

NNP

WP

Multi-head self-attention + feed forward

Nobel committee awards Strickland who advanced optics

36

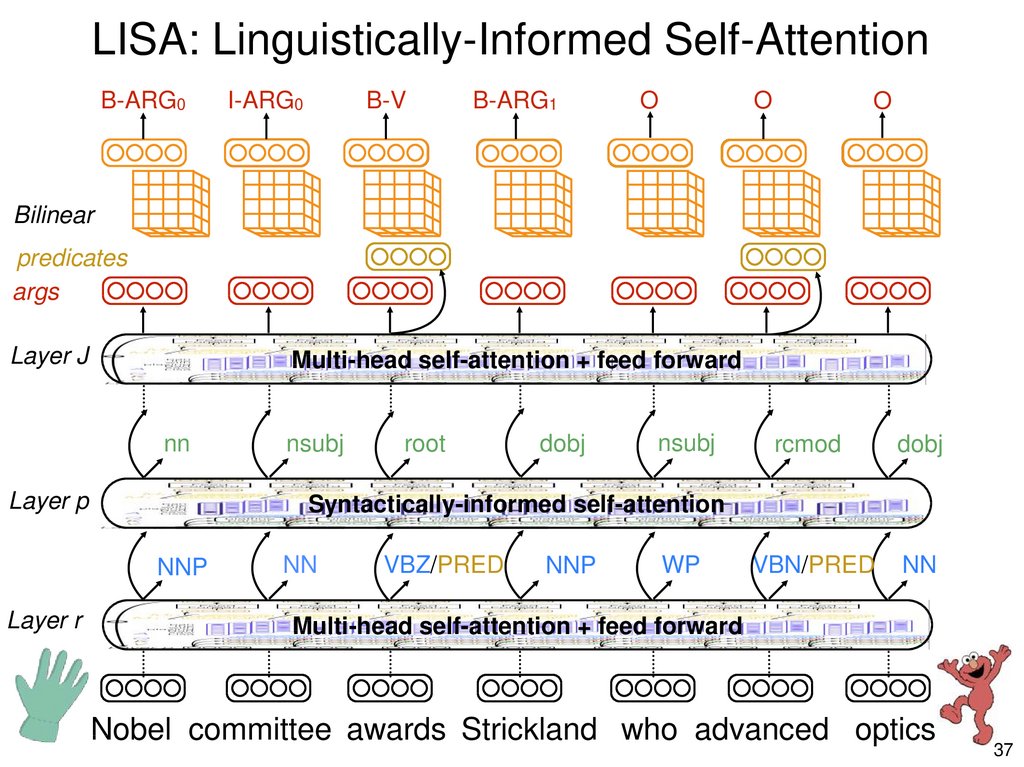

37.

LISA: Linguistically-Informed Self-AttentionB-ARG0

I-ARG0

B-V

B-ARG1

O

O

O

Bilinear

predicates

args

Layer J

Multi-head self-attention + feed forward

nn

Layer p

root

dobj

nsubj

rcmod

dobj

VBN/PRED

NN

Syntactically-informed self-attention

NNP

Layer r

nsubj

NN

VBZ/PRED

NNP

WP

Multi-head self-attention + feed forward

Nobel committee awards Strickland who advanced optics

37

38.

LISA: Linguistically-Informed Self-AttentionB-ARG0

I-ARG0

B-V

B-ARG1

O

O

O

Bilinear

predicates

args

Layer J

Multi-head self-attention + feed forward

nn

Layer p

root

dobj

nsubj

rcmod

dobj

VBN/PRED

NN

Syntactically-informed self-attention

NNP

Layer r

nsubj

NN

VBZ/PRED

NNP

WP

Multi-head self-attention + feed forward

Nobel committee awards Strickland who advanced optics

38

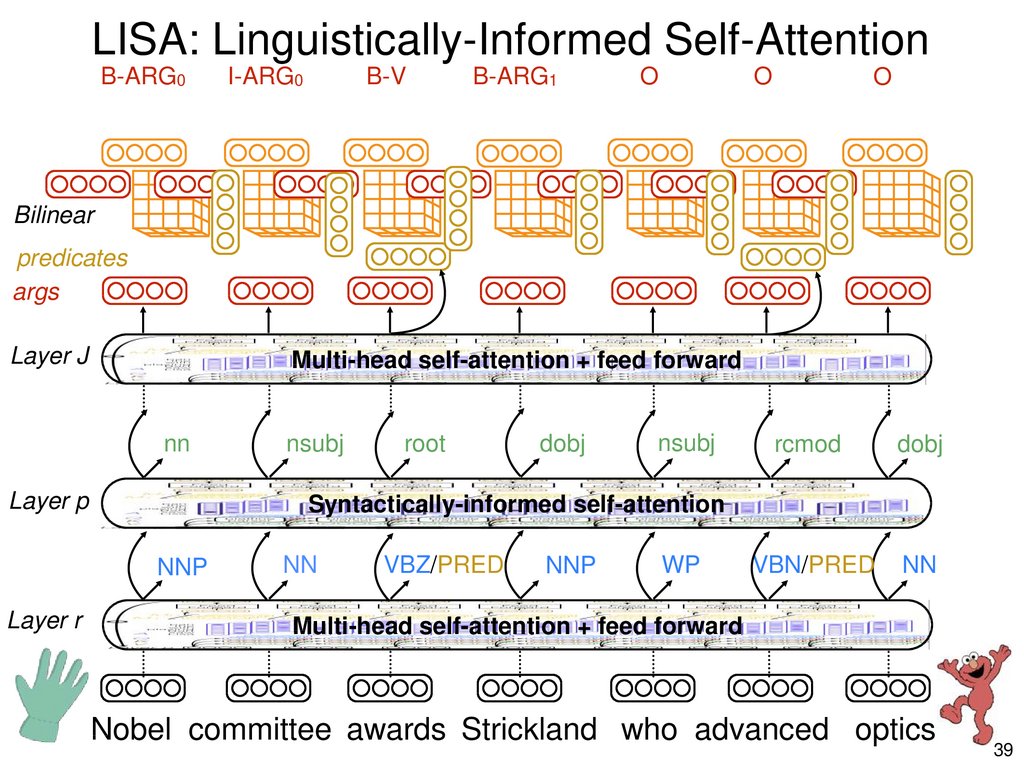

39.

LISA: Linguistically-Informed Self-AttentionB-ARG0

I-ARG0

B-V

B-ARG1

O

O

O

Bilinear

predicates

args

Layer J

Multi-head self-attention + feed forward

nn

Layer p

root

dobj

nsubj

rcmod

dobj

VBN/PRED

NN

Syntactically-informed self-attention

NNP

Layer r

nsubj

NN

VBZ/PRED

NNP

WP

Multi-head self-attention + feed forward

Nobel committee awards Strickland who advanced optics

39

40.

LISA: Linguistically-Informed Self-AttentionB-ARG0

I-ARG0

B-V

B-ARG1

O

O

O

Bilinear

predicates

args

Layer J

Multi-head self-attention + feed forward

nn

Layer p

root

dobj

nsubj

rcmod

dobj

VBN/PRED

NN

Syntactically-informed self-attention

NNP

Layer r

nsubj

NN

VBZ/PRED

NNP

WP

Multi-head self-attention + feed forward

Nobel committee awards Strickland who advanced optics

40

41.

LISA: Linguistically-Informed Self-AttentionB-ARG0

O

I-ARG0

O

B-V

O

B-ARG1

B-ARG0

O

B-R-ARG0

O

B-V

O

B-ARG1

Bilinear

predicates

args

Layer J

Multi-head self-attention + feed forward

nn

Layer p

root

dobj

nsubj

rcmod

dobj

VBN/PRED

NN

Syntactically-informed self-attention

NNP

Layer r

nsubj

NN

VBZ/PRED

NNP

WP

Multi-head self-attention + feed forward

Nobel committee awards Strickland who advanced optics

41

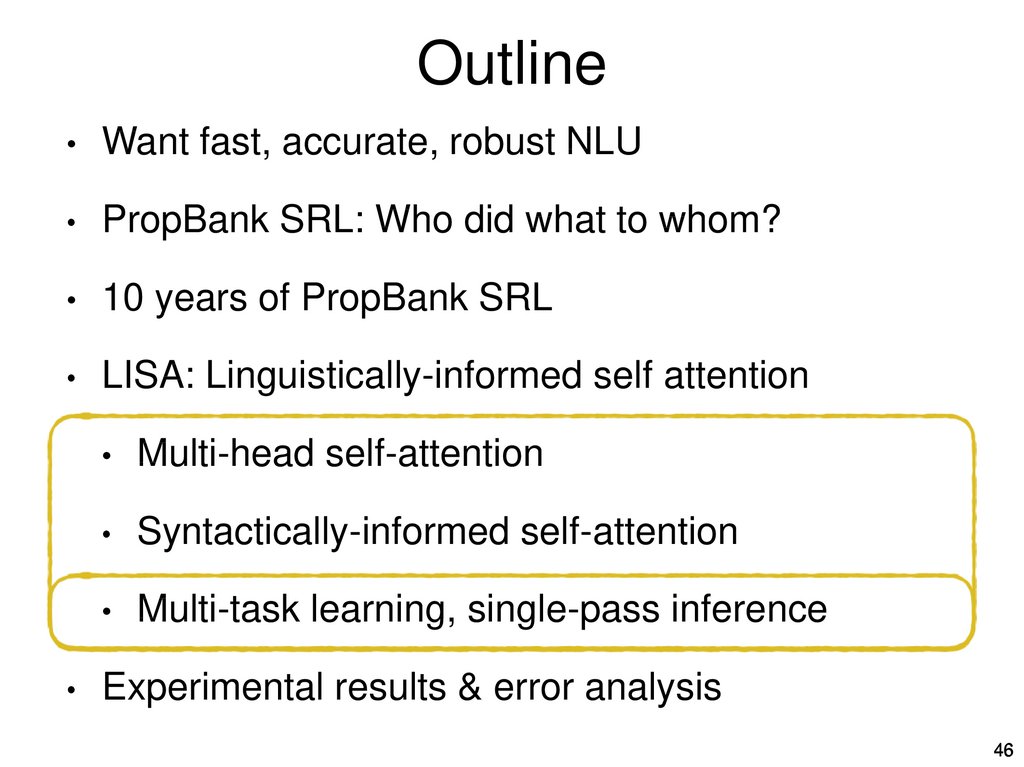

42.

OutlineWant fast, accurate, robust NLU

PropBank SRL: Who did what to whom?

10 years of PropBank SRL

LISA: Linguistically-informed self attention

Multi-head self-attention

Syntactically-informed self-attention

Multi-task learning, single-pass inference

Experimental results & error analysis

46

43.

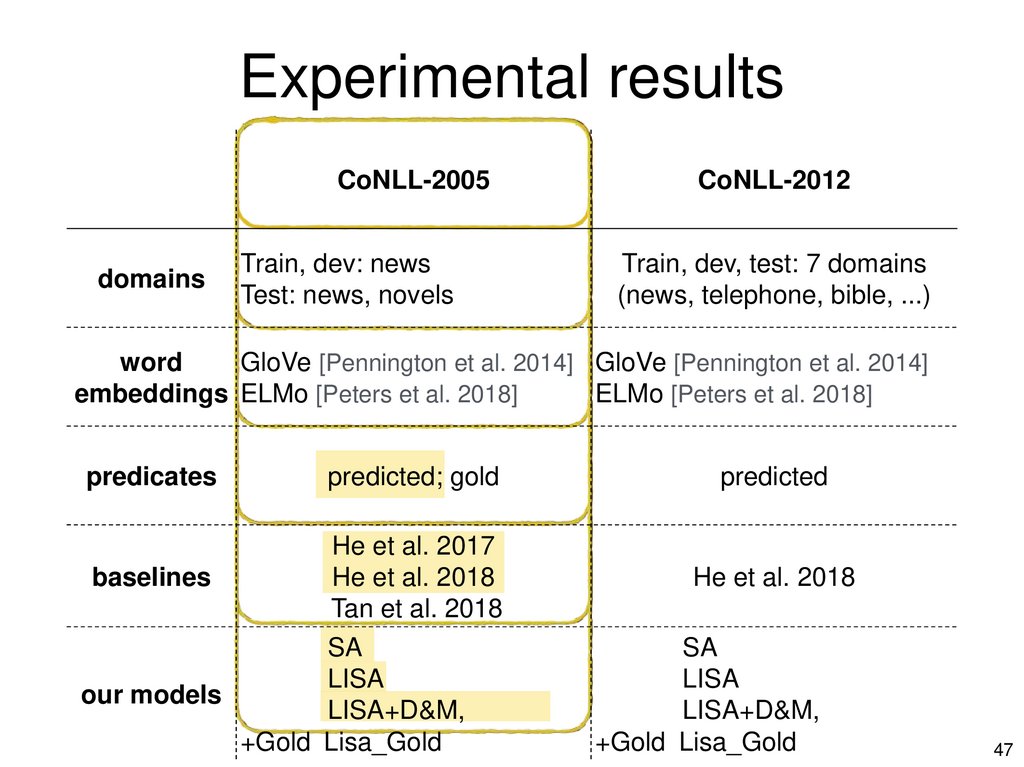

Experimental resultsCoNLL-2005

domains

Train, dev: news

Test: news, novels

CoNLL-2012

Train, dev, test: 7 domains

(news, telephone, bible, ...)

word

GloVe [Pennington et al. 2014] GloVe [Pennington et al. 2014]

embeddings ELMo [Peters et al. 2018]

ELMo [Peters et al. 2018]

predicates

predicted; gold

predicted

baselines

He et al. 2017

He et al. 2018

Tan et al. 2018

He et al. 2018

SA

LISA

our models

LISA+D&M,

+Gold Lisa_Gold

SA

LISA

LISA+D&M,

+Gold Lisa_Gold

47

44.

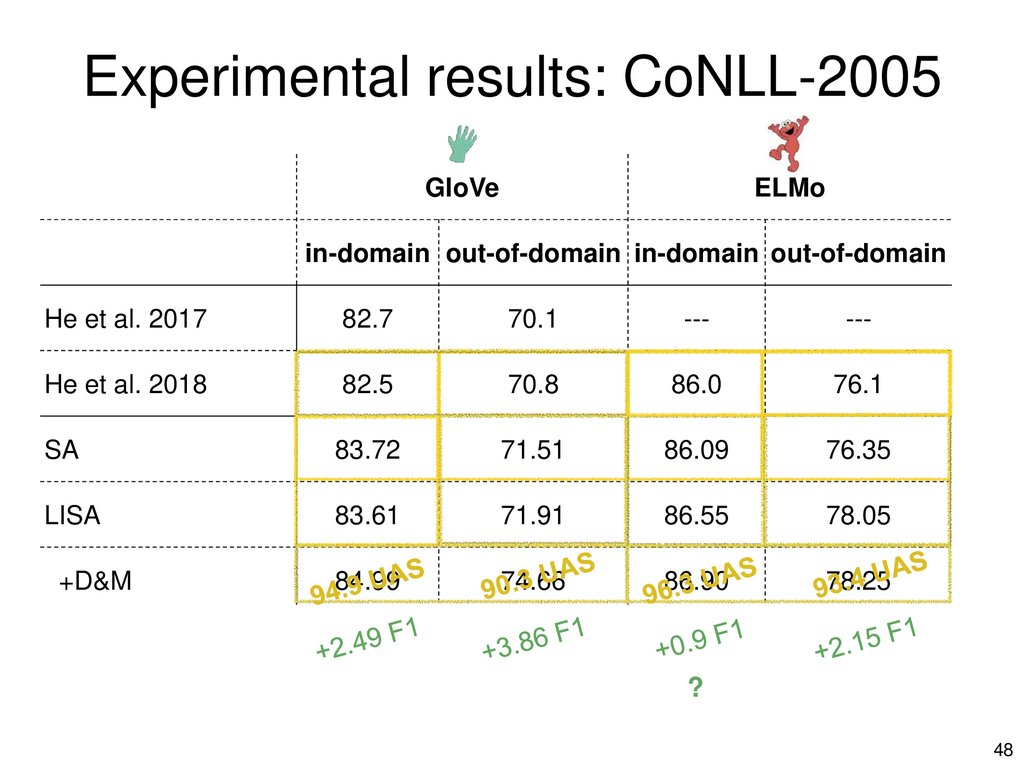

Experimental results: CoNLL-2005GloVe

ELMo

in-domain out-of-domain in-domain out-of-domain

He et al. 2017

82.7

70.1

---

---

He et al. 2018

82.5

70.8

86.0

76.1

SA

83.72

71.51

86.09

76.35

LISA

83.61

71.91

86.55

78.05

84.99

74.66

86.90

78.25

+D&M

+Gold

?

48

45.

Experimental results: CoNLL-2005GloVe

ELMo

in-domain (dev)

in-domain (dev)

He et al. 2017

81.5

---

He et al. 2018

81.6

85.3

SA

82.39

85.26

LISA

82.24

85.35

+D&M

83.58

85.17

+Gold

86.81

87.63

49

46.

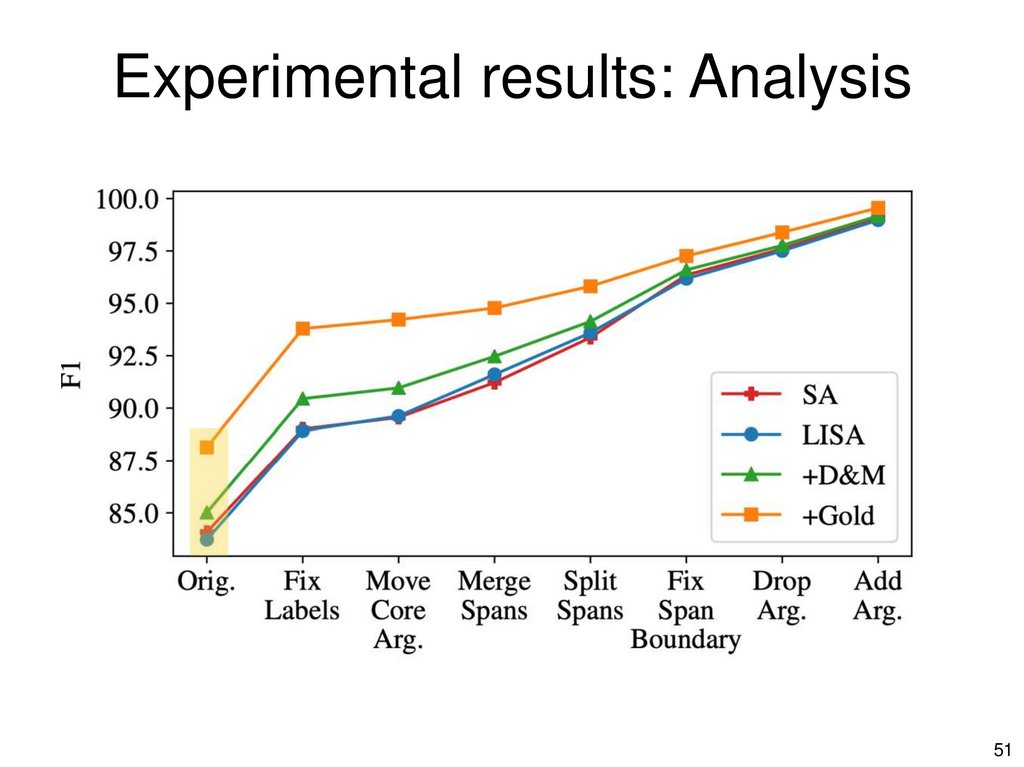

Experimental results: Analysis51

47.

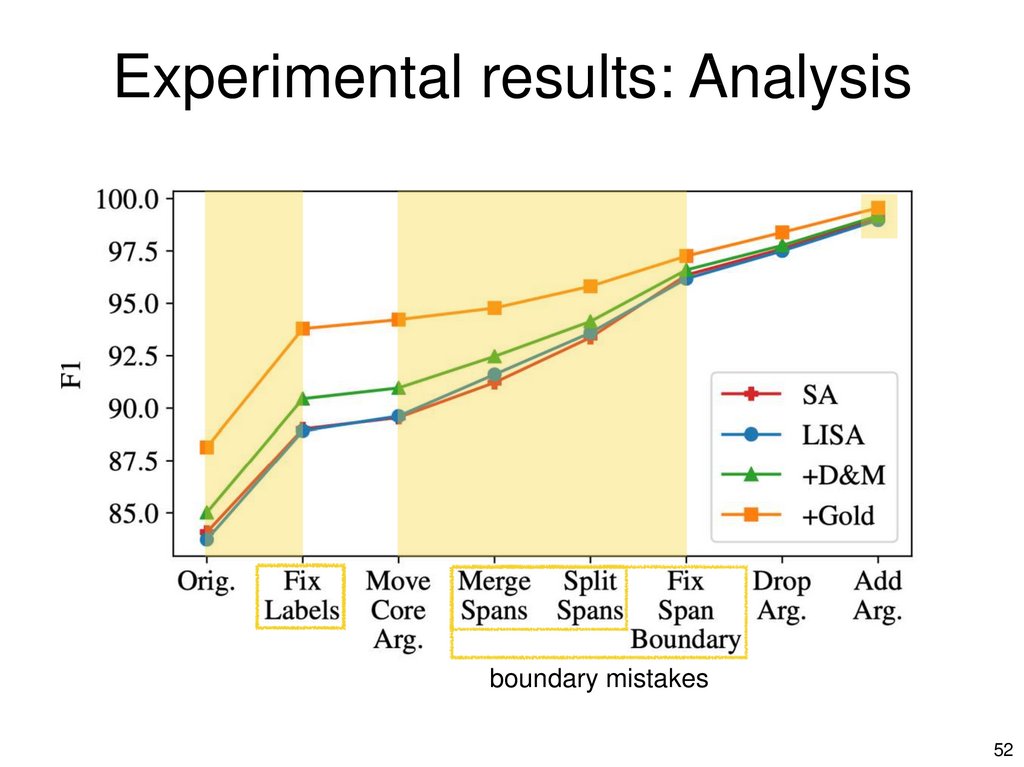

Experimental results: Analysisboundary mistakes

52

48.

SummaryLISA: Multi-task learning + multi-head self attention

trained to attend to syntactic parents

–

–

–

Achieves state-of-the-art F1 on PropBank SRL

Linguistic structure improves generalization

Fast: encodes sequence only once to predict predicates,

parts-of-speech, labeled dependency parse, SRL

Everyone wants to run NLP on the entire web:

–

accuracy, robustness, computational efficiency.

Models & Code:

https://github.com/strubell/LISA

I am on the academic job market this spring!

53

english

english