Similar presentations:

PHL103 Lecture 11-Functionalism

1.

PHL103Lecture 11-Functionalism

2.

I. Multiple realizability: A challenge for identity theory &motivation for functionalism

II. Machine functionalism

III. Functionalism and the mind/body problem

IV. Some problems for functionalism

3. I. Multiple realizability: A challenge for identity theory & motivation for functionalism

I. Multiple realizability: A challenge for identity theory& motivation for functionalism

4.

Recall the basic thesis of identity theoryEvery mental state is identical to a physical state of the brain.

Identity theory (Type-physicalism): For any mental state M, there

is a brain state B, such that M = B.

The thesis identifies mental types (e.g. anger, pain, belief) with types of

brain state

PAIN = C-FIBER ACTIVATION

VISUAL IMAGE = BRAIN STATE V

BELIEF = BRAIN STATE B

DESIRE = BRAIN STATE D

5.

Recall the basic thesisEvery mental state is identical to a physical state of the brain.

Identity theory (Type-physicalism): For any mental state M, there

is a brain state B, such that M = B.

The thesis identifies mental types (e.g. anger, pain, belief) with types of

brain state

PAIN = C-FIBER ACTIVATION

Implications:

there are no changes in mental life without changes in the physical

states of the brain (supervenience).

An organism is in pain if and only if a certain type of brain state

occurs; C-fiber activation is both necessary and sufficient for the

mental state PAIN.

6.

The success conditions for identity theory“Consider what the brain-state theorist has to do to make good on his

claims. He has to specify a physical-chemical state such that any organism

(not just a mammal) is in pain if and only if (a) it possesses a brain of

suitable physical-chemical structure; and (b) its brain is in that physicalchemical state. This means that the physical-chemical state in question

must be a possible physical state of a mammalian brain, a reptilian brain, a

mollusc’s brain…etc. At the same time, it must not be a possible (physically

possible) state of the brain of any physically possible creature that cannot

feel pain. Even if such a state can be found, it must be nomologically

certain that it will also be a state of the brain of any extra-terrrestrial life

that may be found that will be capable of feeling pain before we can even

entertain the supposition that it may be pain” (Putnam, ‘The Nature of

Mental States’, 77).

7.

Some thought experimentsOctopus pain

8.

Some thought experimentsOctopus pain

Martian pain

9.

The problem: success conditions for identity theory“…the hypothesis becomes even more ambitious when we realize that the

brain-state theorist is not just saying that pain is a brain state; he is, of

course, concerned to maintain that every psychological state is a brain state.

Thus if we can find even one psychological predicate which can clearly be

applied to both a mammal and an octopus…, but whose physical-chemical

‘correlate’ is different in the two cases, the brain-state theory has collapsed”

(Putnam 77).

10.

Chauvinism and the challenge of multiple realizabilityMultiple realizability: Mental states can be realized by different

physical processes or structures.

This is what the thought experiments teach us: it is possible for an

octopus, a human, and a Martian to all experience the same type of

mental state (e.g. PAIN) in spite of significant neural differences

(such that they could not be in the same type of brain state).

11.

Chauvinism and the challenge of multiple realizabilityMultiple realizability: Mental states can be realized by different

physical processes or structures.

The possibility of multiple realizability sheds significant doubt upon the

ultimate success of identity theory. This worry can be expressed as a:

Conceptual worry: Agents with the same types of mental states but

differing brains are easily conceived. So identity theory looks

unpromising as a metaphysics of mind.

Empirical worry: It seems “overwhelmingly likely” that we will discover

one creature with the same mental state type but distinct neural

structures. So identity theory looks doomed as a science of the mind.

12.

Chauvinism and the challenge ofmultiple realizability

This challenge can also be seen as a motivation

for an alternative theory of mind:

Functionalism.

Instead of thinking of mental states as identified

with brain states, we allow that they are

realized by brain states (in humans), and think

about the states themselves in terms of their

causal-functional roles…

13. II. Machine functionalism

14.

Background:Computational

technology and

the Turing Test

15.

Functionalism and computersMachine functionalism (and often

functionalism generally) is

sometimes described by a

computational analogy:

the mind is to the brain as

computer software is to computer

hardware

To understand the analogy, we first

have to understand in basic terms

how a software or program relates

to the computer running it.

16.

Turing machines (Alan Turing, 1950)Turing machines are abstract computational devices, used to clarify

and analyze what can be computed.

The “state machine” involves a set of instructions that determine

how, given any input, the machine changes its internal states and

outputs. This instruction program is called a machine table.

Turing was interested in whether, in principle, any given task could

be computed in this way.

We are interested in the concept of a Turing machine table, and how

it allows us to define inner states in terms of their relations to

inputs and outputs.

17.

Turing machinesA modified example

Imagine a simple computing machine, whose task is to

distribute pop given the appropriate combination of input.

Pop Machine

Suppose the pop machine accepts only nickels (N) and dimes (D) as

input.

The internal states consist only of (0), (5), (10), and (15)

A pop is outputted only when the machine reaches state 15 (a pop

costs 15 cents); otherwise the machine waits.

With these details in hand, we can construct a set of rules for the

operation of the machine.

18.

Turing machinesA modified example

Imagine a simple computing machine, whose task is to

distribute pop given the appropriate combination of input.

Soda Machine

Rules:

If input (N) and in state (0), then enter state (5) and output (wait).

If input (N) and in state (5), then enter state (10) and output (wait).

If input (N) and in state (10), then enter state (0) and output (soda).

If input (D) and in state (0), then enter state (10) and output (wait).

If input (D) and in state (5), then enter state (0) and output (pop).

If input (D) and in state (10), then enter state (5) and output (pop)

Note that these rules insure task completion and continued

functioning of the machine.

19.

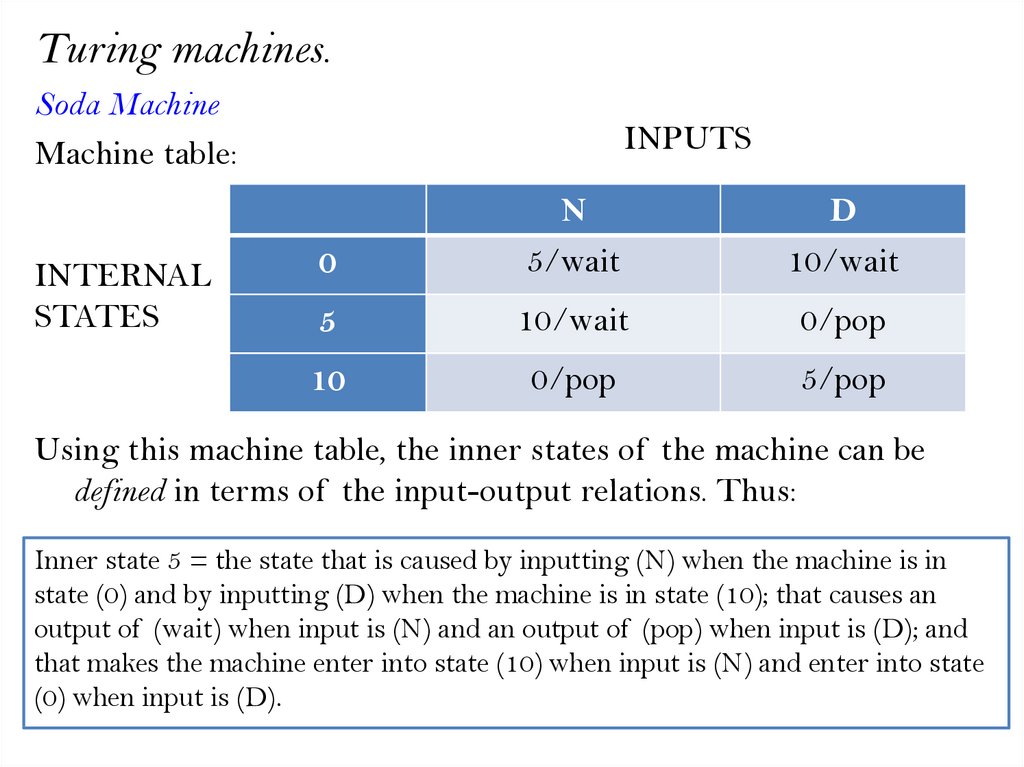

Turing machines.Soda Machine

Rules:

If

If

If

If

If

If

input (N) and in state (0), then enter state (5) and output (wait).

input (N) and in state (5), then enter state (10) and output (wait).

input (N) and in state (10), then enter state (0) and output (soda).

input (D) and in state (0), then enter state (10) and output (wait).

input (D) and in state (5), then enter state (0) and output (pop).

input (D) and in state (10), then enter state (5) and output (pop)

Machine table:

INPUTS

INTERNAL

STATES

0

N

5/wait

D

10/wait

5

10/wait

0/pop

10

0/pop

5/pop

20.

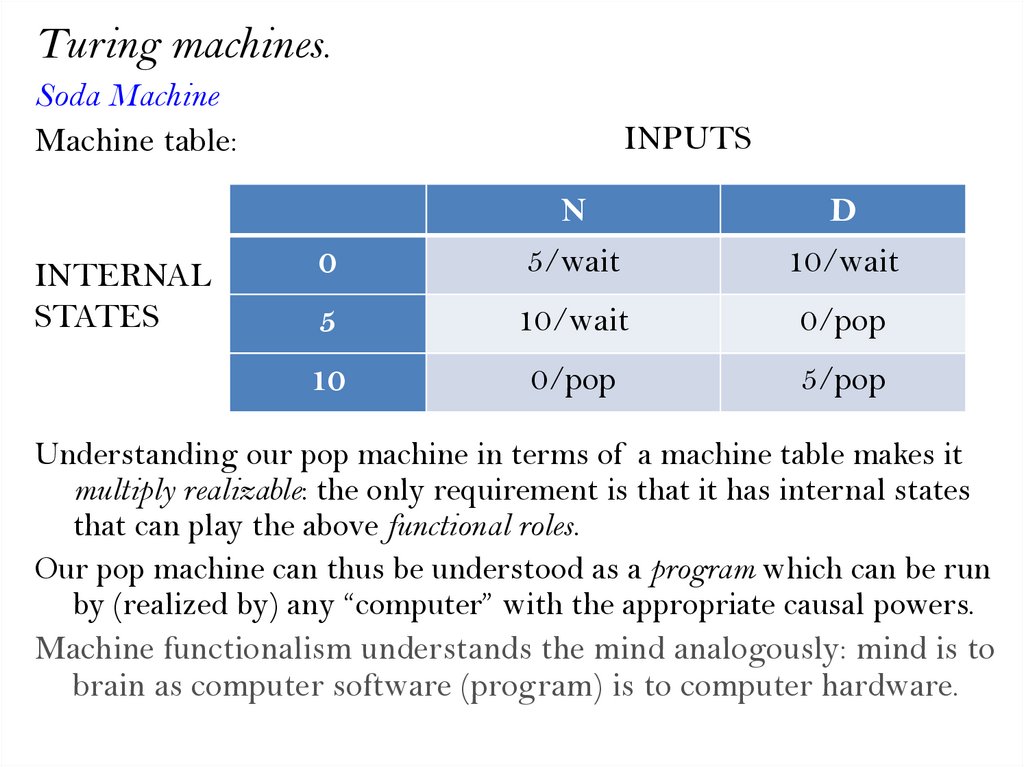

Turing machines.Soda Machine

Machine table:

INTERNAL

STATES

INPUTS

0

N

5/wait

D

10/wait

5

10/wait

0/pop

10

0/pop

5/pop

Using this machine table, the inner states of the machine can be

defined in terms of the input-output relations. Thus:

Inner state 5 = the state that is caused by inputting (N) when the machine is in

state (0) and by inputting (D) when the machine is in state (10); that causes an

output of (wait) when input is (N) and an output of (pop) when input is (D); and

that makes the machine enter into state (10) when input is (N) and enter into state

(0) when input is (D).

21.

Turing machines.Soda Machine

Inner state 5 = (i) the state that is caused by inputting (N) when the machine is in

state (0) and by inputting (D) when the machine is in state (10); (ii) that causes an

output of (wait) when input is (N) and an output of (pop) when input is (D); and

(iii) that makes the machine enter into state (10) when input is (N) and enter into

state (0) when input is (D).

Note that ‘inner state 5’ is thus defined by three types of clause:

(i) the inputs that typically cause it

(ii) the outputs it typically causes

(iii) the inner state changes it typically causes.

All the internal states of the machine can be defined accordingly: in

terms of the causal-functional role in the system.

22.

Turing machines.Soda Machine

Machine table:

INTERNAL

STATES

INPUTS

0

N

5/wait

D

10/wait

5

10/wait

0/pop

10

0/pop

5/pop

Understanding our pop machine in terms of a machine table makes it

multiply realizable: the only requirement is that it has internal states

that can play the above functional roles.

Our pop machine can thus be understood as a program which can be run

by (realized by) any “computer” with the appropriate causal powers.

Machine functionalism understands the mind analogously: mind is to

brain as computer software (program) is to computer hardware.

23.

Machine functionalismMental states can similarly be defined in terms of causal-functional

role; in terms of input-output relations specified by a machine

table.

A toy theory of pain

Suppose we have

two inner states: pain (P) and relief (R)

two inputs: smashing finger with hammer and taking aspirin

two outputs: saying ‘Ouch!’ and saying ‘Ahhh’.

INPUTS

INTERNAL

STATES

Hammer smash

finger

Take aspirin

P

P/ ‘Ouch!’

R/ ‘Ahhh’

R

P/ ‘Ouch!’

R/ ‘Ahhh’

24.

Machine functionalismA toy theory of pain

INTERNAL

STATES

INPUTS

Hammer smash

finger (HS)

Take aspirin

(TA)

P

P/ ‘Ouch!’

R/ ‘Ahhh’

R

P/ ‘Ouch!’

R/ ‘Ahhh’

Just as we did with the internal states of Pop machine, we can define

these mental states (on this simple theory of pain) by inputoutput relations.

Mental state P = (i) the state that that is caused by input (HS) when one is in state

(P) and caused by input (HS) when one is in state (R); (ii) that causes output

“Ouch!” when one is in state (P) and input is (HS) and that causes output “Ahhh”

when one is in P and input is (TA); and that causes the system to enter into state

(P) when input is (HS) and enter into state (R) when input is (TA).

25.

Machine functionalismA toy theory of pain

INPUTS

INTERNAL

STATES

Hammer smash

finger (HS)

Take aspirin

(TA)

P

P/ ‘Ouch!’

R/ ‘Ahhh’

R

P/ ‘Ouch!’

R/ ‘Ahhh’

Mental state P = (i) the state that that is caused by input (HS) when one is in state

(P) and caused by input (HS) when one is in state (R); (ii) that causes output

“Ouch!” when one is in state (P) and input is (HS) and that causes output “Ahhh”

when one is in P and input is (TA); and that causes the system to enter into state

(P) when input is (HS) and enter into state (R) when input is (TA).

Note again that the internal states are defined by 3 types of clause:

(i) the inputs that typically cause it

(ii) the outputs it typically causes

(iii) the inner state changes it typically causes.

26.

Machine functionalism andcomputational theory of mind

Thus early functionalism theorized

the mind as an (admittedly

complex) computer, where

mental states are understood in

terms of their computational

role.

27.

Machine functionalism and computational theory of mindThe computational analogy is a powerful one: to think of minds in

terms of mental operations (a la computational operations) is to

abstract from the material realization (the hardware) of those

operations and focus on the operations themselves, in terms of

their functional roles.

Minds can thus be understood in terms of these higher level

properties, not in terms of lower level physical properties (e.g.

brains or computer chips).

Machine functionalism accommodates multiple realizability:

computers are multiply realizable

if minds are computers, then minds will be multiply realizable

28.

Functionalism-The basic ideaMental states are internal states of a system, identified with the

causal-functional role they play in that system.

For any state Φ, these roles are defined in terms of 3 types of

clause:

(i) Input clause: the inputs that typically cause Φ

(ii) Output clause: the outputs Φ typically causes

(iii) Internal mediation clause: the inner state changes Φ typically

causes.

Mental states are thus identified with a job description:

The state PAIN is identified with…

The state VISUAL IMAGE is identified with…

The state BELIEF is identified with…

…

29.

Arguments and motivationInference to the best explanation

“I contend…that this hypothesis, in spite of it admitted vagueness, is far

less vague than the ‘physical-chemical’ state hypothesis is today, and far

more susceptible to investigation of both a mathematical and an

empirical kind. Indeed, to investigate this hypothesis is just to attempt

to produce ‘mechanical’ models of organisms—and isn’t this, in a sense,

just what psychology is about?” (Putnam 76).

“I shall now compare the hypothesis just advanced with (a) the hypothesis

that pain is a brain state, and (b) the hypothesis that pain is a behavior

disposition” (Putnam 76).

The implication, once identity theory and other materialist theories

are considered, is that by inference to the best explanation,

functionalism is the best theory.

…What does it better explain?

30.

Arguments and motivationMultiple realizability

31.

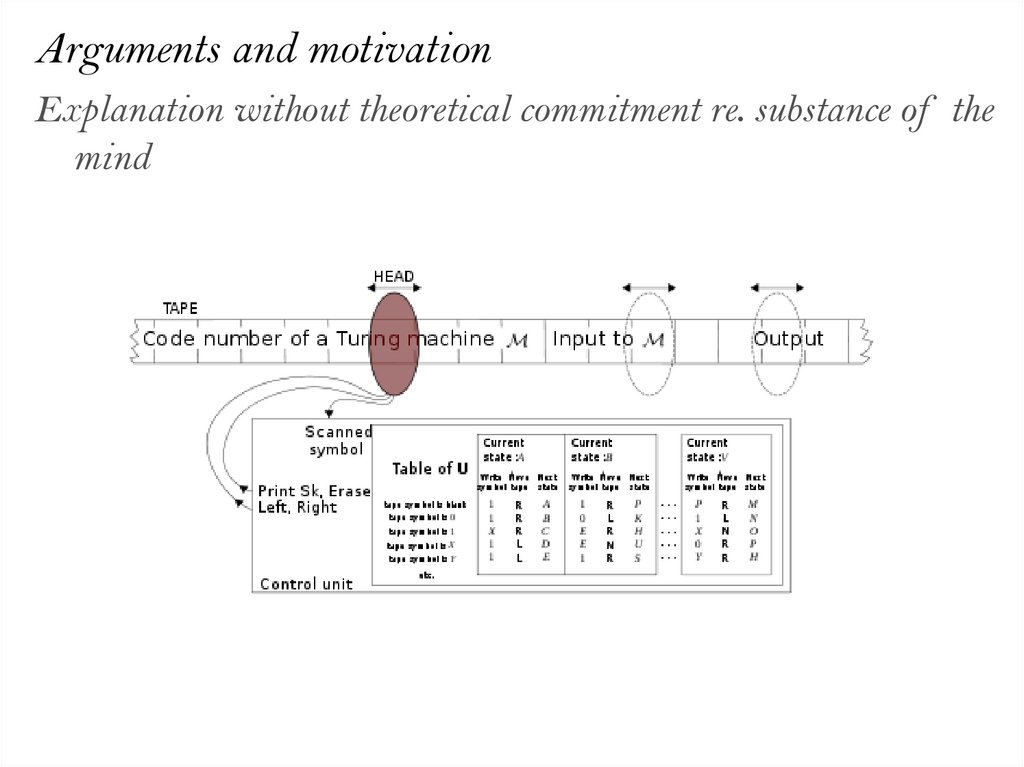

Arguments and motivationExplanation without theoretical commitment re. substance of the

mind

32.

Arguments and motivationAccommodation of causal role of mental

Substance Dualism

33.

Arguments and motivationInference to the best explanation

Insofar as functionalism provides the best explanation with respect

to these and other features of the mind, we have good abductive

evidence that it is the correct theory.

34. III. Functionalism and the mind/body problem

35.

The mind/body problemThe logical problem

Inconsistent tetrad:

(1) The human body is a material thing.

(2) The human mind is a spiritual thing.

(3) Mind and body causally interact.

(4) Spirit and matter do not causally interact.

Possible solutions:

Reject (4): Spirit and matter do causally interact.

Dualist Interactionism

(Descartes)

36.

The mind/body problemThe logical problem

Inconsistent tetrad:

(1) The human body is a material thing.

(2) The human mind is a spiritual thing.

(3) Mind and body causally interact.

(4) Spirit and matter do not causally interact.

Possible solutions:

Reject (2): The human mind is a material thing.

Materialism: Identity

theory

37.

The mind/body problemThe logical problem

Inconsistent tetrad:

(1) The human body is a material thing.

(2) The human mind is a spiritual thing.

(3) Mind and body causally interact.

(4) Spirit and matter do not causally interact.

Possible solutions:

Reject (2): The human mind is a material thing.

Materialism: Identity

theory

Functionalism?

38.

The mind/body problemThe logical problem

Inconsistent tetrad:

(1) The human body is a material thing.

(2) The human mind is a spiritual thing.

(3) Mind and body causally interact.

(4) Spirit and matter do not causally interact.

Possible solutions:

Functionalism can remain non-committal on which proposition

to deny: it requires only that mental states are casualfunctional roles.

And since causal-functional roles are multiply realizable, the

mind could be material OR immaterial.

Functionalism?

39.

The mind/body problemThe logical problem

Inconsistent tetrad:

(1) The human body is a material thing.

(2) The human mind is a spiritual thing.

(3) Mind and body causally interact.

(4) Spirit and matter do not causally interact.

Possible solutions:

So although functionalists tend to be materialists: functionalism

as such provides an explanation of mental states without

committing to the substantial nature of the mind.

Functionalism?

management

management