Similar presentations:

Applied Computing is a field within SCIENCE which applies practical approaches of computer science to real world problems

1.

Prof. Dhiya Al-JumeilyProfessor of Software Engineering/Associate Dean

Faculty of Engineering and Technology

d.aljumeily@ljmu.ac.uk

2.

3.

WHERE IS LIVERPOOL• Good transport links with the rest of Britain

• 40 minutes from Manchester International Airport

• 2 hours by train from London

• One of the UK’s top tourist destinations

• Home for Liverpool and Everton football clubs

4.

Liverpool is officially the UK’s friendliest City(Condé Nast Readers Travellers Awards)

5.

6.

7.

8.

9.

10.

11.

12.

13.

14. COMPUTING

Inspired By The PastMotivating The Future

15.

Conventional Vs Biologically Inspired ComputingBig Data and Data Science

Application of Data Science in Healthcare and Medicine

16. What is computing?

• Applied Computing is a field within SCIENCEwhich applies practical approaches of

computer science to real world problems

across multiple disciplines to produce

effective solutions.

• The techniques used within computing open

up possibilities for other disciplines which can

be explored for solutions and improvements

to tricky problems which ultimately are either

too complex or big for humans to provide the

answers.

• Applied computing uses various computer

science techniques such as algorithms,

databases, networks and more modern

applications such as deep learning, Big Data

and more to build systems and applications

which can be used in the real world.

• Applied computing has been effective

in many areas for developing solutions

which can analyse data to provide

informative results for applications in

real-world situations, the following

branches are some of those in which

applied

computing

has

been

effectively used:

Education and Learning Techniques

Health and Medicine

Gaming

Business

Economics

17. Progress in Early Computing

• Applications in computing and informationprocessing have existed since ancient times.

Such precursors of the modern computing era

now serve to demonstrate both the

conceptual and technological difficulties in the

evolution of such processing capabilities

during the pre-digital age.

• Early examples of applied computing include

the well known abacus, the earliest

computational instrument on record, along

with less widely known mechanical artefacts

such as the Antikythera mechanism, an

intricate analog device developed during the

first century BC to calculate astronomical

events.

18. Progress in Early Computing

• The less widely known mechanical artefacts suchas the Antikythera mechanism, an intricate

analog device developed during the first century

BC to calculate astronomical events.

• However, despite the endurance of both manual

arithmetic devices and one-of-a-kind automatic

mechanisms during periods of antiquity, the

position of computing remained largely

unchanged up until 1800, a time at which the

effects of the industrial revolution had become

well established. It is estimated that fewer than

100 automatic computing mechanisms were ever

built prior to this time.

19. Mechanical Computing and the Industrial Revolution

• Following the turn of the 19th Century,a sustained transition began to occur

from devices that could perform

arithmetic, to devices that could

represent logic through mechanical

operation.

During

this

time,

commercially viable devices that could

accept task-specific "programs” were

developed, such as the Jacquard loom

developed in 1804.

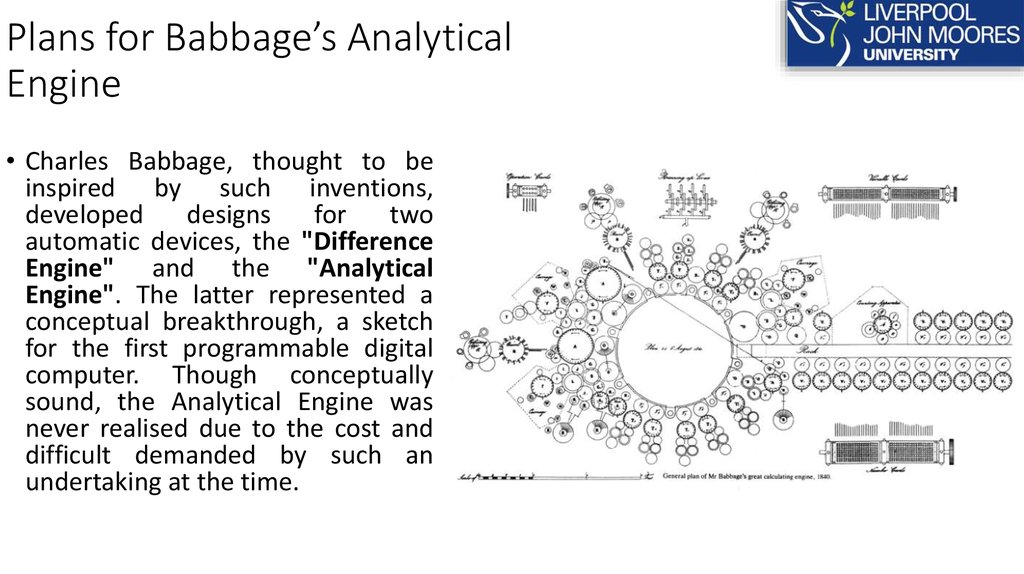

20. Plans for Babbage’s Analytical Engine

• Charles Babbage, thought to beinspired by such inventions,

developed

designs

for

two

automatic devices, the "Difference

Engine" and the "Analytical

Engine". The latter represented a

conceptual breakthrough, a sketch

for the first programmable digital

computer. Though conceptually

sound, the Analytical Engine was

never realised due to the cost and

difficult demanded by such an

undertaking at the time.

21. Introduction of Conventional Computing

• Automated applied computing began in the form of machines that werecreated to perform information processing tasks, specific to a goal of

interest. The physical machine could be programmed to alter the nature

of the task, for example in the case of the Jacquard loom. However, if the

goal of interest was to change, the physical machine itself, the substrate

upon which the computation was run, would have to be redesigned

and reconstructed. In 1936, a theoretical concept known as the

"Universal Turing Machine" was introduced by Alan Turing in a paper

titled "On computable numbers, with an application to the

Entscheidungsproblem".

• Turing had discovered a property of computation that would enable the

full potential of computing applications to be unleashed, he found that

with the provision of a set of simple primitive operations, the space of all

possible computations could be represented on any machine without

undertaking a redesign of the machine itself. Conversely, the same

realisation simultaneously proved that most previously developed

machinery was fundamentally limited, since the not all of the so called

primitives were incorporated into these devices. The notion of "Turing

Completeness" is now well recognised and is fundamental to the design

of all general purpose computing systems, having opened the way for

both modern applied computing research and the exploration of artificial

intelligence.

22.

Conventional Vs Biologically InspiredComputing

23. Conventional Computing

A computer does what it is programmed to do !Conventional computers follow a set of ‘well-defined’

instructions in order to solve a problem.

When equipped with the appropriate rules, it can

perform many tasks better or faster than humans.

playbuzz.com

BUT

conventional computing ‘struggles’ with certain

types of tasks !

Computer performed better than the human at chess in

the late 1990’s !

24.

25.

26.

Aoccdrnig to a rscheearch at an Elingsh uinervtisy, it deosn't mttaerin waht oredr the ltteers in a wrod are, the olny iprmoetnt tihng is

taht frist and lsat ltteer is at the rghit pclae. The rset can be a toatl

mses and you can sitll raed it wouthit porbelm.

[rscheearch at Cmabrigde Uinervtisy]

27.

biometricupdate.com/inhabitat.com

28. Conventional Computing - Challenges

Examples:Scenarios associated with

Recognising complex patterns such as human

faces or emotions

Limited or no knowledge

Recognising a variety of handwriting.

Context

Identifying the words and context in spoken

language.

Driving a car through busy streets.

New or previously unseen situations

Decision making

Extremely large number of possible solutions to

choose from

29.

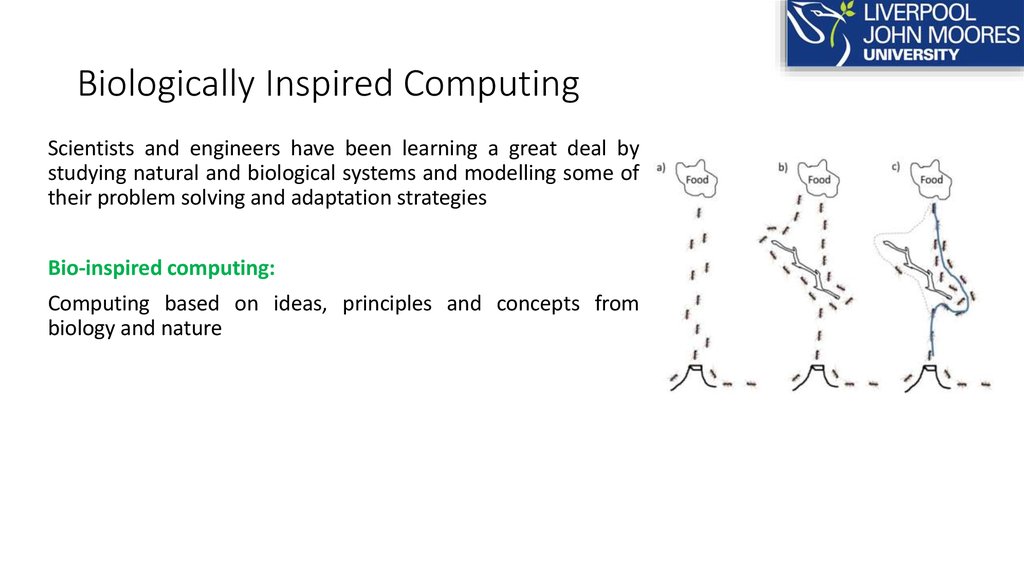

Biologically Inspired Computing30. Biologically Inspired Computing

Scientists and engineers have been learning a great deal bystudying natural and biological systems and modelling some of

their problem solving and adaptation strategies

Bio-inspired computing:

Computing based on ideas, principles and concepts from

biology and nature

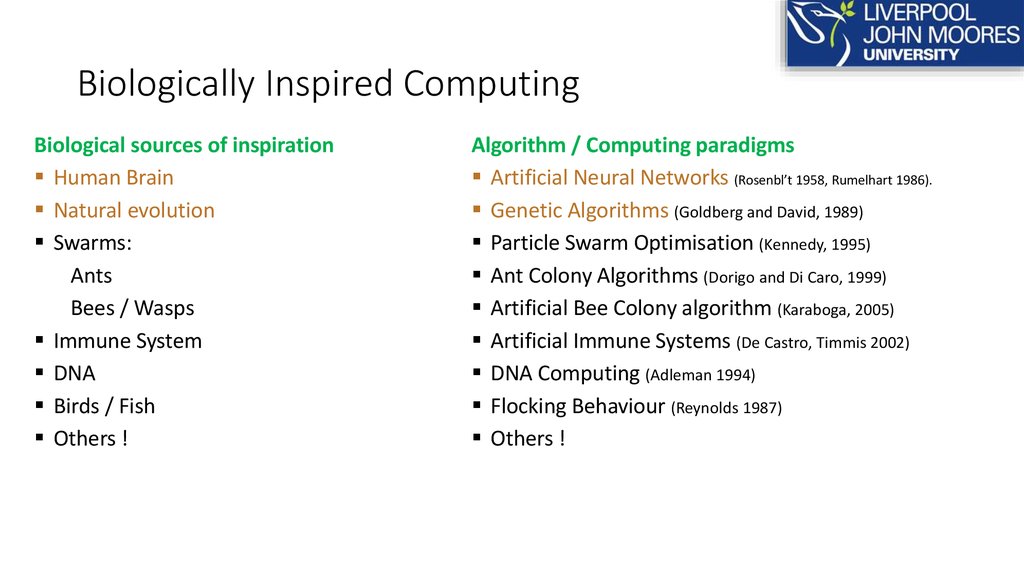

31. Biologically Inspired Computing

Biological sources of inspirationHuman Brain

Natural evolution

Swarms:

Ants

Bees / Wasps

Immune System

DNA

Birds / Fish

Others !

Algorithm / Computing paradigms

Artificial Neural Networks (Rosenbl’t 1958, Rumelhart 1986).

Genetic Algorithms (Goldberg and David, 1989)

Particle Swarm Optimisation (Kennedy, 1995)

Ant Colony Algorithms (Dorigo and Di Caro, 1999)

Artificial Bee Colony algorithm (Karaboga, 2005)

Artificial Immune Systems (De Castro, Timmis 2002)

DNA Computing (Adleman 1994)

Flocking Behaviour (Reynolds 1987)

Others !

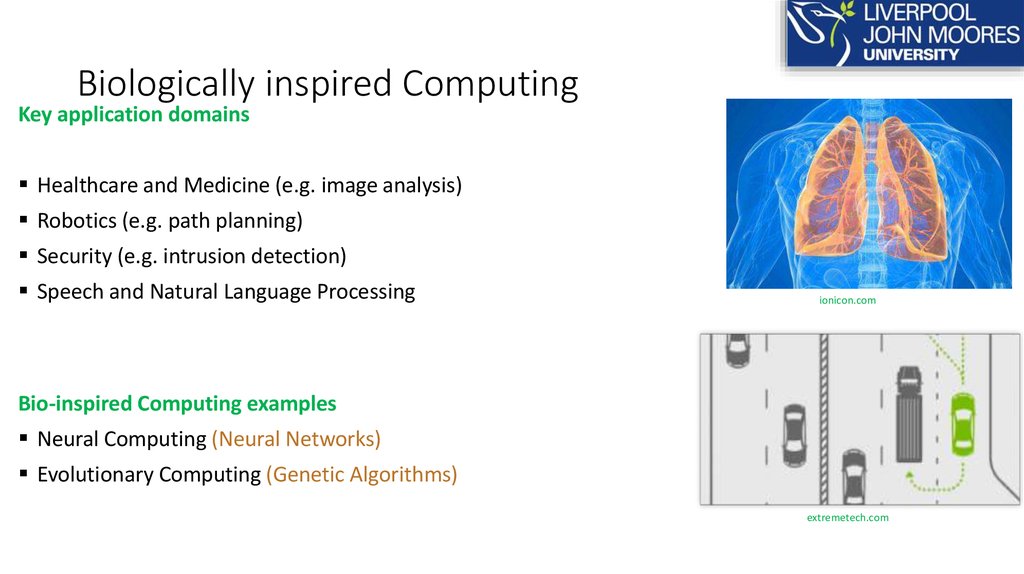

32. Biologically inspired Computing

Key application domainsHealthcare and Medicine (e.g. image analysis)

Robotics (e.g. path planning)

Security (e.g. intrusion detection)

Speech and Natural Language Processing

ionicon.com

Bio-inspired Computing examples

Neural Computing (Neural Networks)

Evolutionary Computing (Genetic Algorithms)

extremetech.com

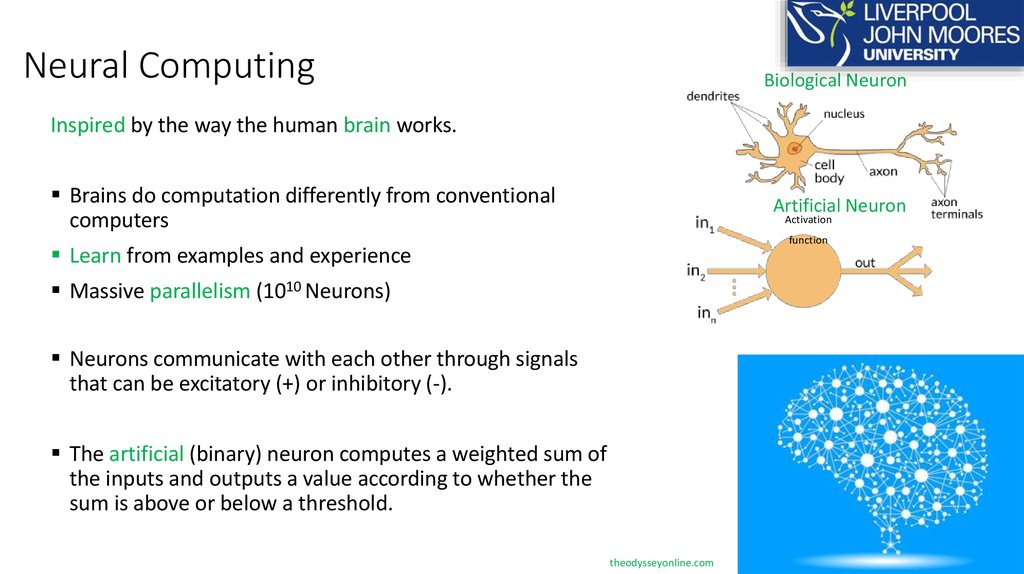

33. Neural Computing

Biological NeuronInspired by the way the human brain works.

Brains do computation differently from conventional

computers

Artificial Neuron

Activation

function

Learn from examples and experience

Massive parallelism (1010 Neurons)

Neurons communicate with each other through signals

that can be excitatory (+) or inhibitory (-).

The artificial (binary) neuron computes a weighted sum of

the inputs and outputs a value according to whether the

sum is above or below a threshold.

theodysseyonline.com

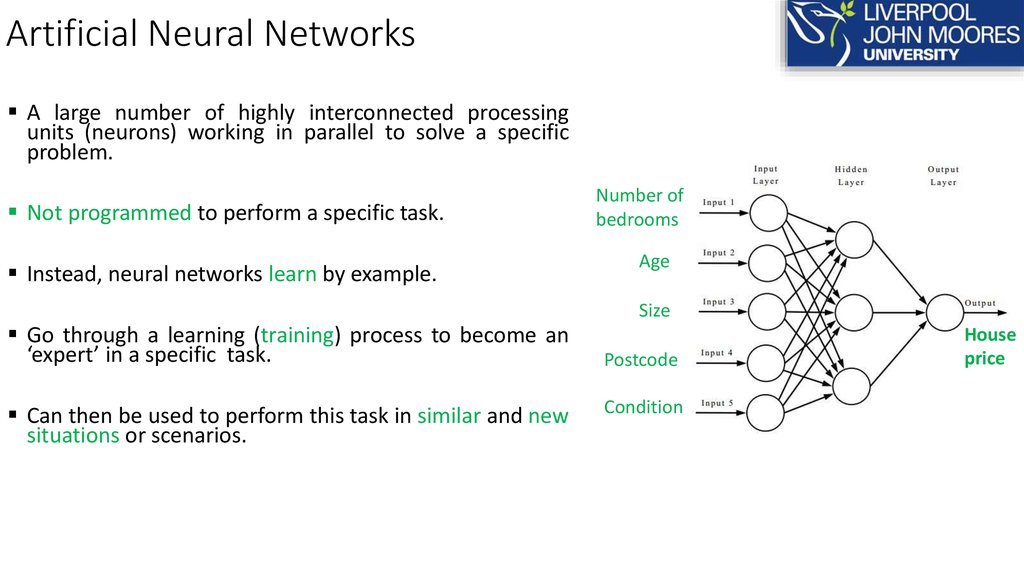

34. Artificial Neural Networks

A large number of highly interconnected processingunits (neurons) working in parallel to solve a specific

problem.

Not programmed to perform a specific task.

Instead, neural networks learn by example.

Go through a learning (training) process to become an

‘expert’ in a specific task.

Can then be used to perform this task in similar and new

situations or scenarios.

Number of

bedrooms

Age

Size

Postcode

Condition

House

price

35. Financial Time Series Analysis with Neural Networks

2 .52 .5

2

2

1 .5

1 .5

1

1

RDP + 5 Values

RDP + 5 Values

Financial Time Series Analysis with Neural Networks

0 .5

0

-0 .5

0 .5

0

-0 .5

-1

-1

-1 .5

-1 .5

-2

-2

-2 .5

-2 .5

0

20

40

60

80

Day

US/EU currency exchange rate

100

0

20

40

60

80

Day

JP/UK currency exchange rate

100

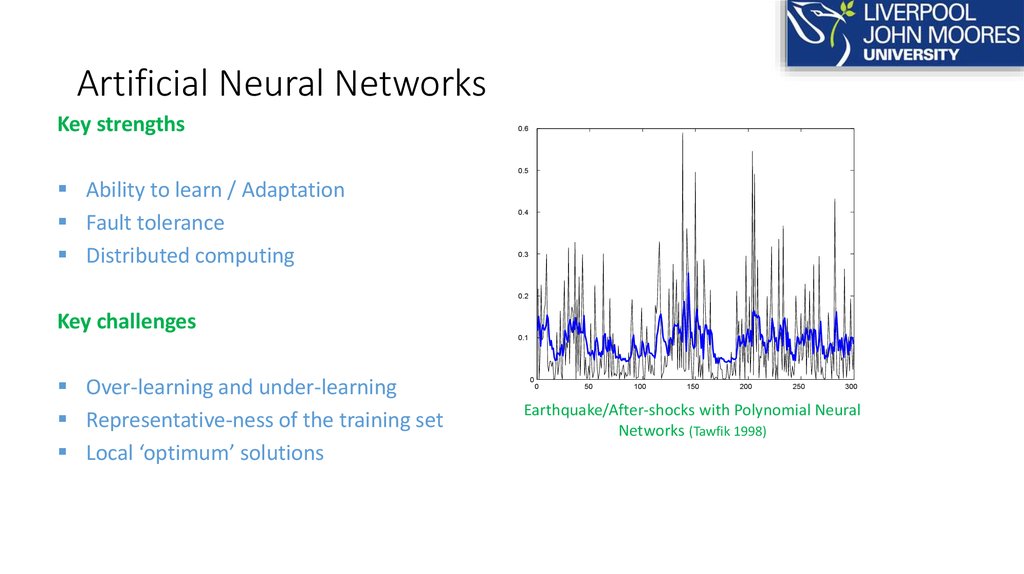

36. Artificial Neural Networks

Key strengths0.6

0.5

Ability to learn / Adaptation

Fault tolerance

Distributed computing

0.4

0.3

0.2

Key challenges

0.1

Over-learning and under-learning

Representative-ness of the training set

Local ‘optimum’ solutions

0

0

50

100

150

200

250

300

Earthquake/After-shocks with Polynomial Neural

Networks (Tawfik 1998)

37.

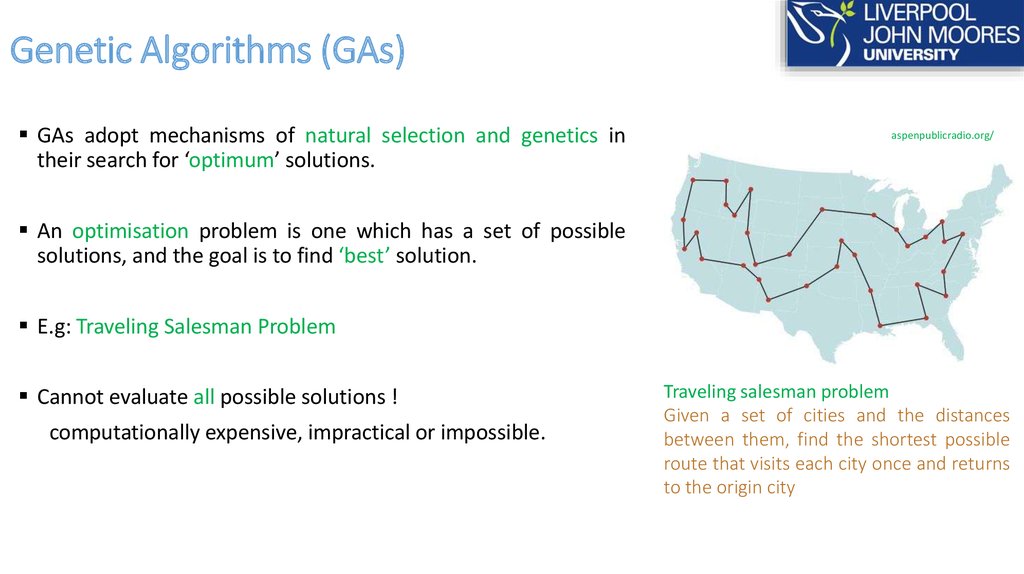

Genetic Algorithms (GAs)GAs adopt mechanisms of natural selection and genetics in

their search for ‘optimum’ solutions.

aspenpublicradio.org/

An optimisation problem is one which has a set of possible

solutions, and the goal is to find ‘best’ solution.

E.g: Traveling Salesman Problem

Cannot evaluate all possible solutions !

computationally expensive, impractical or impossible.

Traveling salesman problem

Given a set of cities and the distances

between them, find the shortest possible

route that visits each city once and returns

to the origin city

38. Genetic Algorithms (GAs)

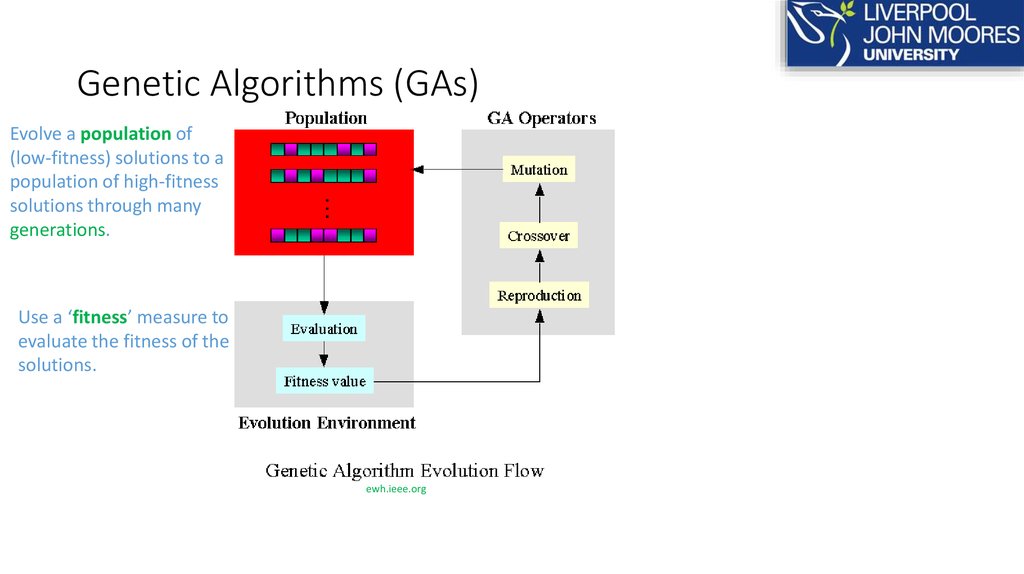

Evolve a population of(low-fitness) solutions to a

population of high-fitness

solutions through many

generations.

Use a ‘fitness’ measure to

evaluate the fitness of the

solutions.

ewh.ieee.org

39. Genetic Algorithms (GAs)

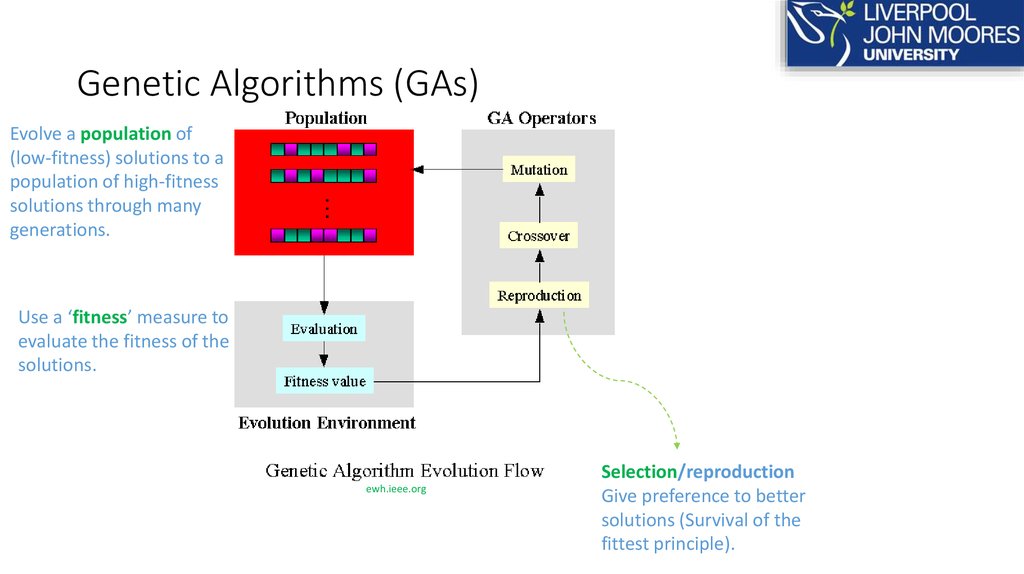

Evolve a population of(low-fitness) solutions to a

population of high-fitness

solutions through many

generations.

Use a ‘fitness’ measure to

evaluate the fitness of the

solutions.

ewh.ieee.org

Selection/reproduction

Give preference to better

solutions (Survival of the

fittest principle).

40. Genetic Algorithms (GAs)

Evolve a population of(low-fitness) solutions to a

population of high-fitness

solutions through many

generations.

Use a ‘fitness’ measure to

evaluate the fitness of the

solutions.

abrandao.com

Crossover: combination of parents

materials to form children

ewh.ieee.org

Selection/reproduction

Give preference to better

solutions (Survival of the

fittest principle).

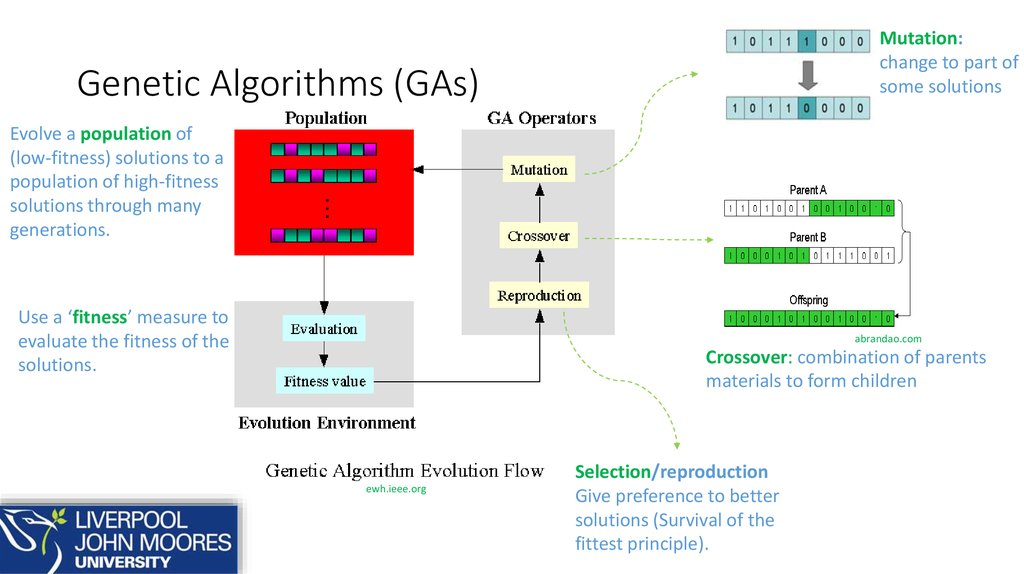

41. Genetic Algorithms (GAs)

Mutation:change to part of

some solutions

Genetic Algorithms (GAs)

Evolve a population of

(low-fitness) solutions to a

population of high-fitness

solutions through many

generations.

Use a ‘fitness’ measure to

evaluate the fitness of the

solutions.

abrandao.com

Crossover: combination of parents

materials to form children

ewh.ieee.org

Selection/reproduction

Give preference to better

solutions (Survival of the

fittest principle).

42. Genetic Algorithms for tactical driving decision making

zipcar.comGA selects a ‘tactical’ driving decision in terms of change of lane,

change of speed, change of acceleration, etc

Tawfik, H. and Liatsis, P. (2008). An intelligent systems framework for prototyping tactical driving decisions. Intelligent Systems Technologies

and Applications.

Tawfik, H. and Liatsis, P. (2006). Modelling tactical driving manoeuvres with GA-INTACT. Computational Science.

43. Genetic Algorithms

Key strengthsNear optimum solutions

Evolution and adaptability

Distributed computing

Key challenge

Pre-mature convergence to ‘sub-optimal’ solutions

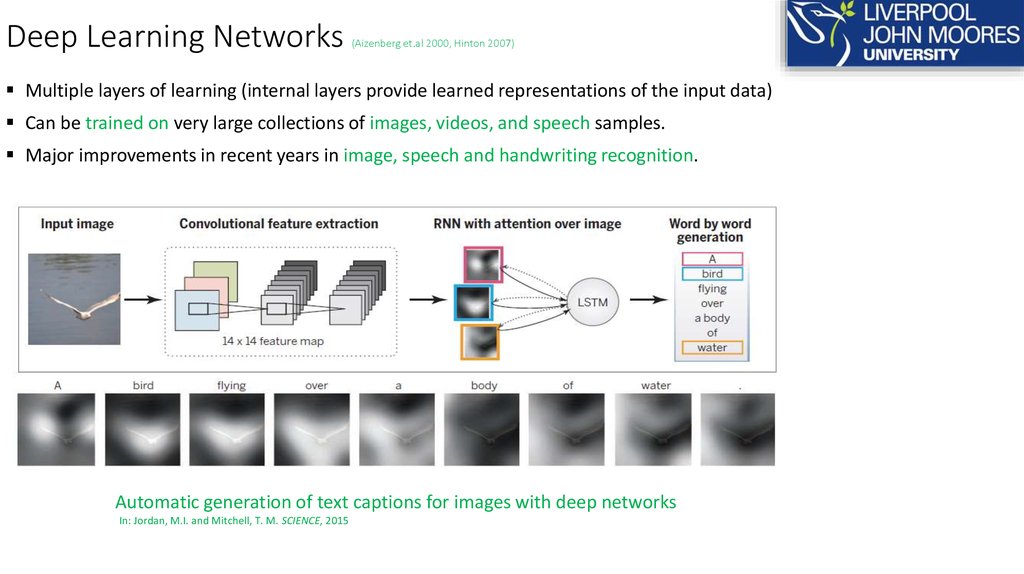

44. Deep Learning Networks (Aizenberg et.al 2000, Hinton 2007)

Multiple layers of learning (internal layers provide learned representations of the input data)Can be trained on very large collections of images, videos, and speech samples.

Major improvements in recent years in image, speech and handwriting recognition.

Automatic generation of text captions for images with deep networks

In: Jordan, M.I. and Mitchell, T. M. SCIENCE, 2015

45.

Big Data and Data Science46. What is Big Data?

• Isn’t all data big?• Big Data: information that can’t be processed or analysed using

traditional processes or tools

• Organisations produce vast amounts of data each and every day

• Some of this is unstructured or at best semi-structured

46

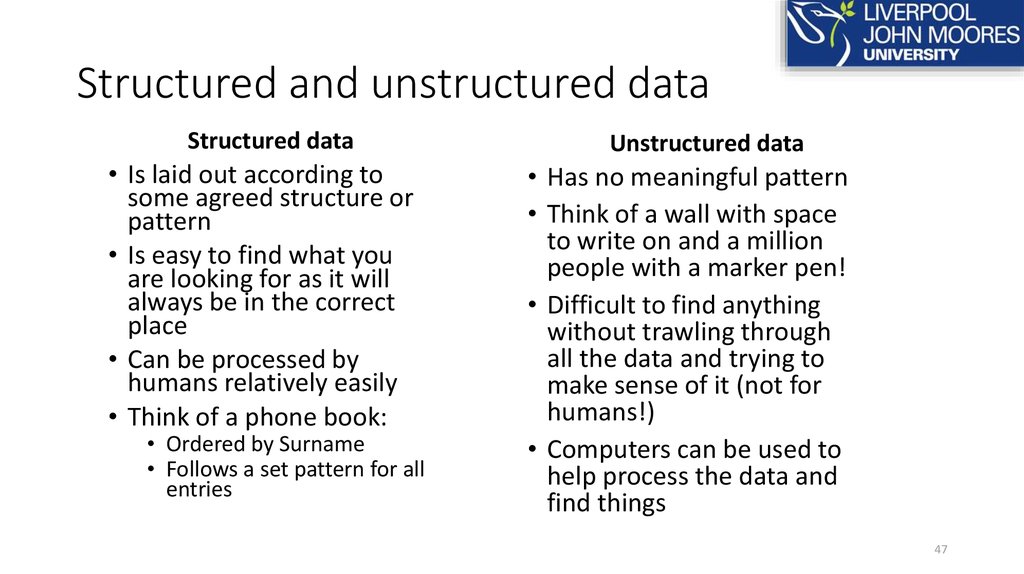

47. Structured and unstructured data

Structured data• Is laid out according to

some agreed structure or

pattern

• Is easy to find what you

are looking for as it will

always be in the correct

place

• Can be processed by

humans relatively easily

• Think of a phone book:

• Ordered by Surname

• Follows a set pattern for all

entries

Unstructured data

• Has no meaningful pattern

• Think of a wall with space

to write on and a million

people with a marker pen!

• Difficult to find anything

without trawling through

all the data and trying to

make sense of it (not for

humans!)

• Computers can be used to

help process the data and

find things

47

48. Characteristics of Big Data

IBM characterises Big Data by the four Vs• Variety – the many different

forms that data can take

• Velocity - the speed at which

the data is produced or

needs to be processed

• Volume – the amount of

data produced or consumed

• Veracity – the truthfulness

(or uncertainty) of data

48

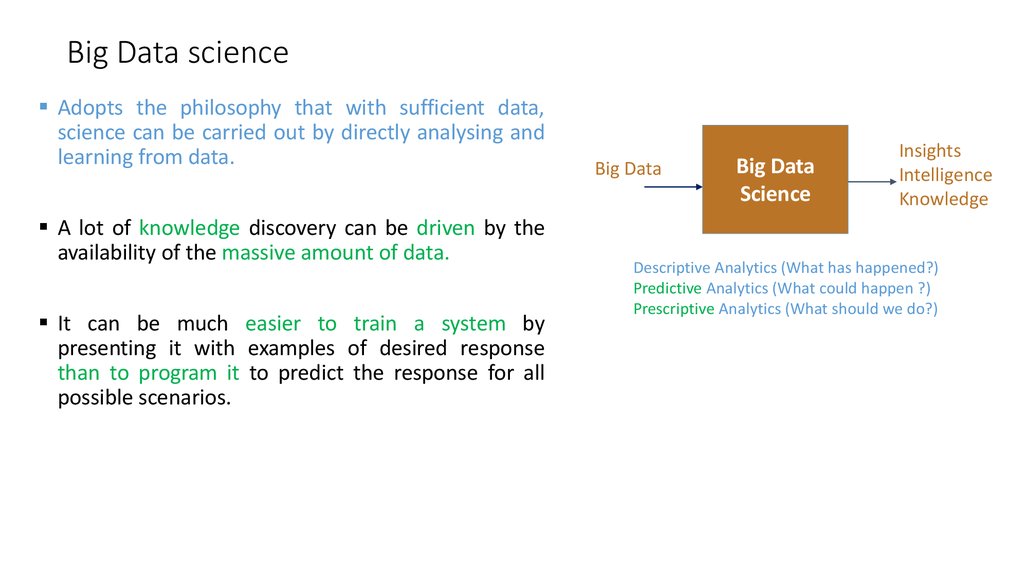

49. Big Data science

Adopts the philosophy that with sufficient data,science can be carried out by directly analysing and

learning from data.

A lot of knowledge discovery can be driven by the

availability of the massive amount of data.

It can be much easier to train a system by

presenting it with examples of desired response

than to program it to predict the response for all

possible scenarios.

Big Data

Big Data

Science

Insights

Intelligence

Knowledge

Descriptive Analytics (What has happened?)

Predictive Analytics (What could happen ?)

Prescriptive Analytics (What should we do?)

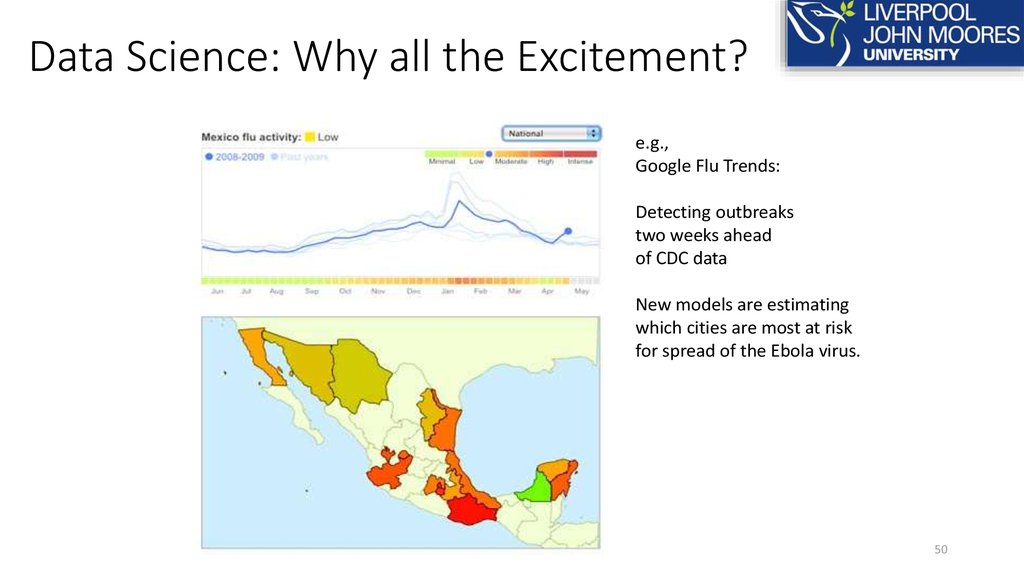

50. Data Science: Why all the Excitement?

e.g.,Google Flu Trends:

Detecting outbreaks

two weeks ahead

of CDC data

New models are estimating

which cities are most at risk

for spread of the Ebola virus.

50

51. Data Science – One Definition

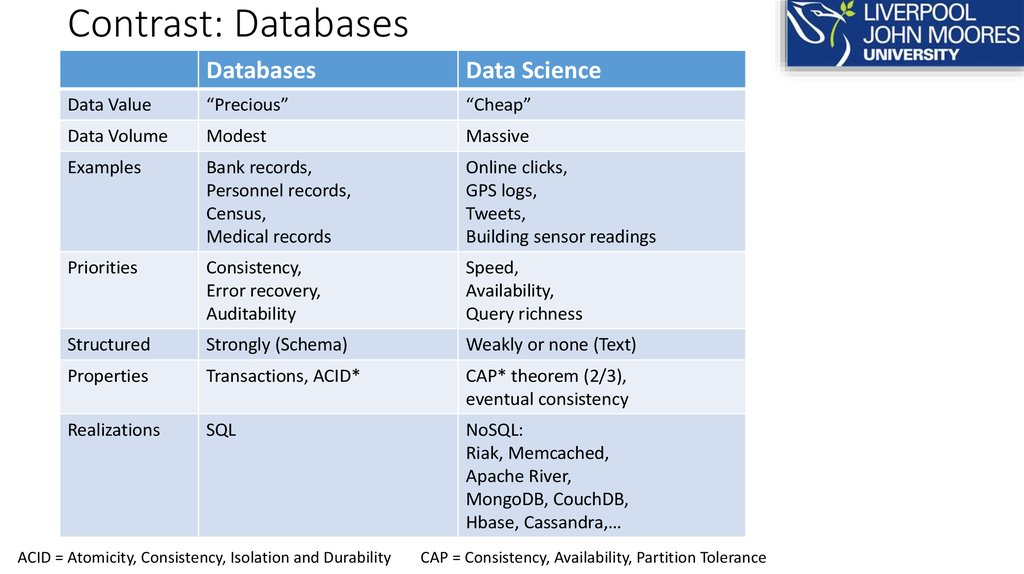

52. Contrast: Databases

DatabasesData Science

Data Value

“Precious”

“Cheap”

Data Volume

Modest

Massive

Examples

Bank records,

Personnel records,

Census,

Medical records

Online clicks,

GPS logs,

Tweets,

Building sensor readings

Priorities

Consistency,

Error recovery,

Auditability

Speed,

Availability,

Query richness

Structured

Strongly (Schema)

Weakly or none (Text)

Properties

Transactions, ACID*

CAP* theorem (2/3),

eventual consistency

Realizations

SQL

NoSQL:

Riak, Memcached,

Apache River,

MongoDB, CouchDB,

Hbase, Cassandra,…

ACID = Atomicity, Consistency, Isolation and Durability

CAP = Consistency, Availability, Partition Tolerance

53. Contrast: Databases

DatabasesData Science

Querying the past

Querying the future

Business intelligence (BI) is the transformation of raw data into meaningful and

useful information for business analysis purposes. BI can handle enormous

amounts of unstructured data to help identify, develop and otherwise create new

strategic business opportunities - Wikipedia

54. Contrast: Scientific Computing

ImageGeneral purpose classifier

Supernova

Not

Nugent group / C3 LBL

Scientific Modeling

Data-Driven Approach

Physics-based models

General inference engine replaces model

Problem-Structured

Structure not related to problem

Mostly deterministic, precise

Statistical models handle true randomness,

and unmodeled complexity.

Run on Supercomputer or

High-end Computing Cluster

Run on cheaper computer Clusters (EC2)

55. Contrast: Computational Science

CASP: A Worldwide, BiannualProtein Folding Contest

Brain Mapping: Allen Institute,

White House, Berkeley

Quark

Raptor-X

Rich, Complex

Energy Models

Data-intensive,

general ML models

Techniques (Massive ML)

Faithful, Physical

Simulation

Feature-based inference

Principal Component Analysis

Conditional Neural Fields

Independent Component Analysis

Sparse Coding

Spatial (Image) Filtering

56. Contrast: Machine Learning

Machine LearningData Science

Develop new (individual) models

Explore many models, build and

tune hybrids

Understand empirical properties

of models

Prove mathematical properties of

models

Improve/validate on a few,

relatively clean, small datasets

Publish a paper

Develop/use tools that can

handle massive datasets

Take action!

57.

Application of Data Science inHealthcare and Medicine

(Bio-inspired Computing for Big Data Science)

58. Bio-inspired Computing for Big Data Science

Big data approaches need to exploit computing paradigmsthat are powerful, fault tolerant and capable of adapting to

the challenging nature of the data.

Scientists and engineers are turning to biologically and

nature-inspired computing, and other AI, techniques to

obtain useful insights from big data.

59. Medical and Health Real World Applications

• In terms of the research proposedwithin the previous slides; the

application of applied computing

becomes apparent further to the

end product.

• The research involved within our area is

predominantly theory-based; the applications

of the derived theory can then be used to

provide applied computing opportunities.

• By using Machine Learning (ML) techniques and

statistical methods to classify and produce

predictive outcomes; these conclusive

algorithms and results can be used within

structures such as Decision Support Systems

(DSS) which can aid health professionals in

making decisions about a patients condition,

their treatment options or in outlining the risk

factors associated with a patients’ condition

given their biological make-up or environment.

60. Machine Learning (ML) and its’ applications

WHAT IS ML?MACHINE LEARNING WITHIN CLINICAL DECISION

SUPPORT SYSTEMS (CDSS)

• The concept of machine

learning refers to a computer

program that able to learn

and gain knowledge from

past experiences and/or

through

identifying

the

important features of a given

dataset in order to make

predictions about other data

that were not a part of the

original training set.

ML considered to be the backbone for the majority of

sophisticated CDSS.

It is one of the principal components of the

information architecture of CDSS.

It is essential part of CDSS and enables such systems

to learn over time.

It would handle more complicated decisions that

might require a specialist knowledge as well as

evaluating the consequences of the suggested

solution.

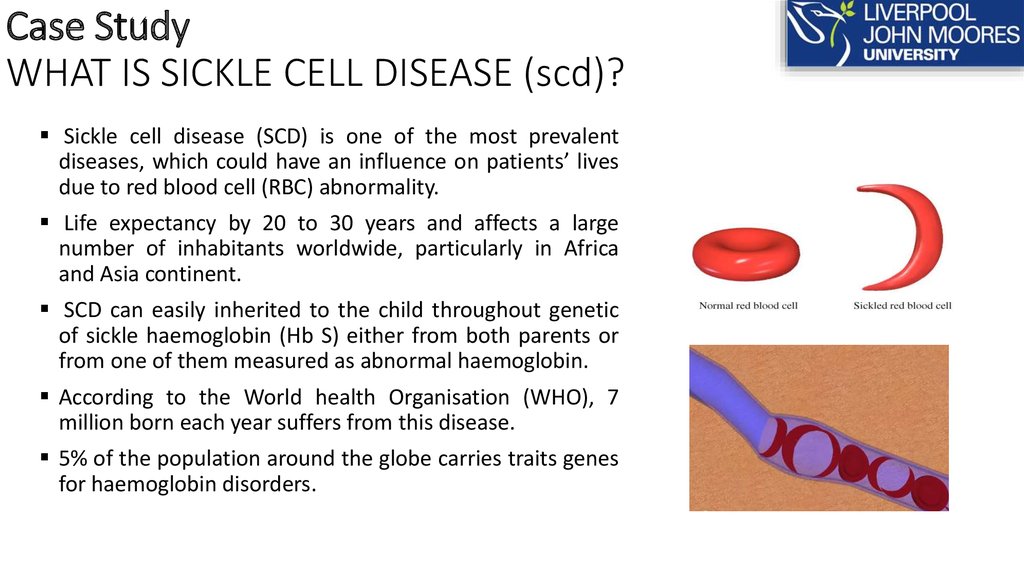

61. Case Study WHAT IS SICKLE CELL DISEASE (scd)?

Sickle cell disease (SCD) is one of the most prevalentdiseases, which could have an influence on patients’ lives

due to red blood cell (RBC) abnormality.

Life expectancy by 20 to 30 years and affects a large

number of inhabitants worldwide, particularly in Africa

and Asia continent.

SCD can easily inherited to the child throughout genetic

of sickle haemoglobin (Hb S) either from both parents or

from one of them measured as abnormal haemoglobin.

According to the World health Organisation (WHO), 7

million born each year suffers from this disease.

5% of the population around the globe carries traits genes

for haemoglobin disorders.

62.

TREATMENT CHALLENGES• Continuous self-care monitoring of chronic diseases and

medicine intake are vital for patients to mitigate the severe

of disease by taking the appropriate medicine at proper

time.

• Within this context, there is a significant need for

constructing cooperative care environment to improve

quality of care and increase caregivers’ efficiency with the

purpose of providing regular information for medical

experts and patients.

• In order to achieve that facilities, this research focus on

how to develop an intelligent system to provide short term

interpretation of overall goals in Sickle Cell Disease (SCD).

63. The current situation in healthcare environment

• Currently, all hospitals and healthcaresectors are using manual approach

that depends completely on patient

input, which can be slowly analysed,

time consuming and stressful as well.

• The most challenging aspects that

facing healthcare in these days is that

there

is

still

insufficient

communication between the SCD

patients and association healthcare

providers.

• There still need for developing of an

intelligent SCD diagnosis system that

eligible to provide a specific treatment

plan inspired by expert system.

• There are still a number of barriers to

obtain excellent communication between

both patient-medical experts relationship

in terms of workload, patients' fear and

anxiety, and fear of verbal or abuse

physical.

64. Genetics

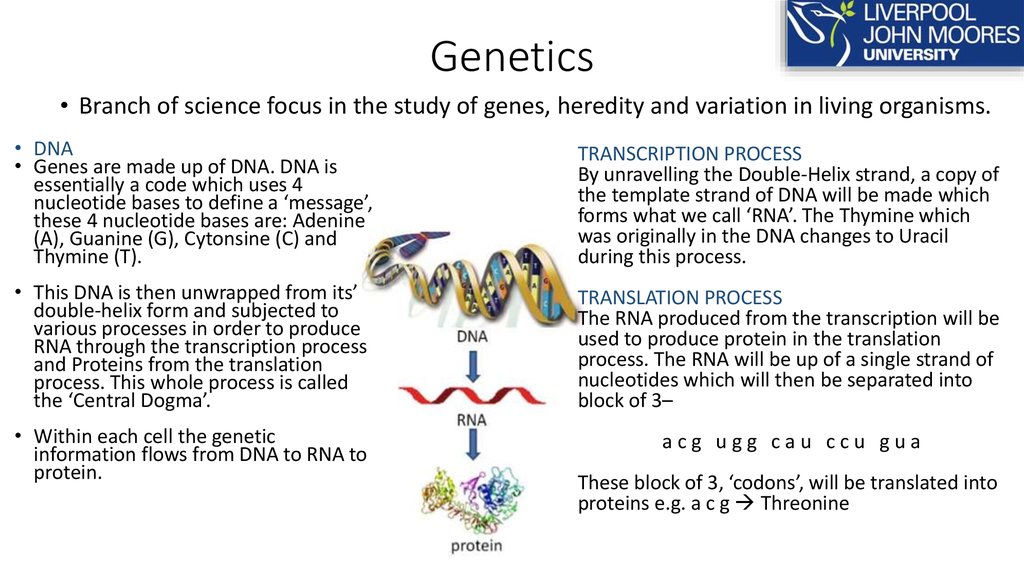

• Branch of science focus in the study of genes, heredity and variation in living organisms.• DNA

• Genes are made up of DNA. DNA is

essentially a code which uses 4

nucleotide bases to define a ‘message’,

these 4 nucleotide bases are: Adenine

(A), Guanine (G), Cytonsine (C) and

Thymine (T).

TRANSCRIPTION PROCESS

By unravelling the Double-Helix strand, a copy of

the template strand of DNA will be made which

forms what we call ‘RNA’. The Thymine which

was originally in the DNA changes to Uracil

during this process.

• This DNA is then unwrapped from its’

double-helix form and subjected to

various processes in order to produce

RNA through the transcription process

and Proteins from the translation

process. This whole process is called

the ‘Central Dogma’.

TRANSLATION PROCESS

The RNA produced from the transcription will be

used to produce protein in the translation

process. The RNA will be up of a single strand of

nucleotides which will then be separated into

block of 3–

• Within each cell the genetic

information flows from DNA to RNA to

protein.

acg ugg cau ccu gua

These block of 3, ‘codons’, will be translated into

proteins e.g. a c g Threonine

65. Genomics

• WHAT IS IT?• Genomics is the study of a person’s

complete DNA sequence - including

genes (exons) and “noncoding” (intron)

DNA segments in the chromosomes and

how those genes interact with each

other, as well as the internal and

external environments they are exposed

to.

• WHAT HAS BEEN FOUND?

• Overall it has been estimated that there are

only approximately 24,000 genes in the

human body (previously thought to be

100,000 genes!)

• WHAT HAS BEEN DONE IN THE AREA?

• Human Genome Project is an example

of a considerable achievement in the

area of genomics. It was an international

research effort to determine the

sequence of the human genome and

identify the genes that it contains.

The aim is to find out, at what stage the problems start in the

genome for each disease, disorder and illness; and if they can

find this (can they produce a treatment or prevention?)

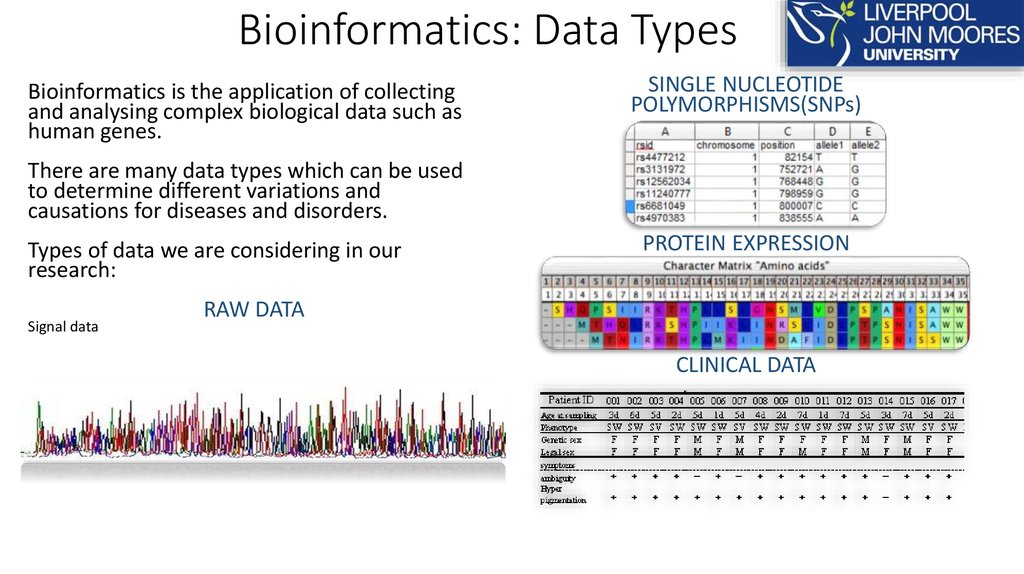

66. Bioinformatics: Data Types

Bioinformatics is the application of collectingand analysing complex biological data such as

human genes.

SINGLE NUCLEOTIDE

POLYMORPHISMS(SNPs)

There are many data types which can be used

to determine different variations and

causations for diseases and disorders.

Types of data we are considering in our

research:

PROTEIN EXPRESSION

RAW DATA

Signal data

CLINICAL DATA

Translated signal data

acgtaatgctattgctccagt

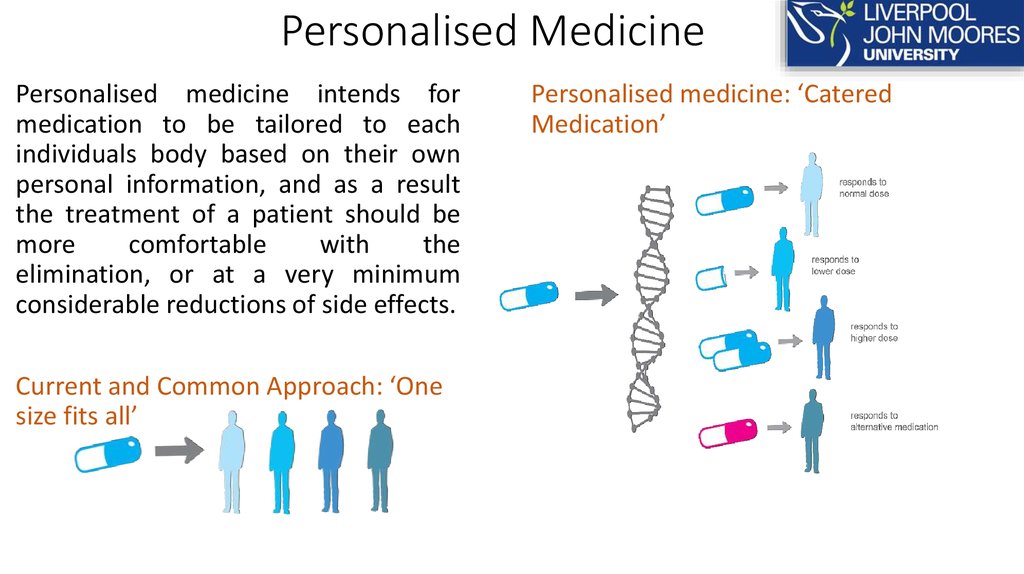

67. Personalised Medicine

Personalised medicine intends formedication to be tailored to each

individuals body based on their own

personal information, and as a result

the treatment of a patient should be

more

comfortable

with

the

elimination, or at a very minimum

considerable reductions of side effects.

Current and Common Approach: ‘One

size fits all’

Personalised medicine: ‘Catered

Medication’

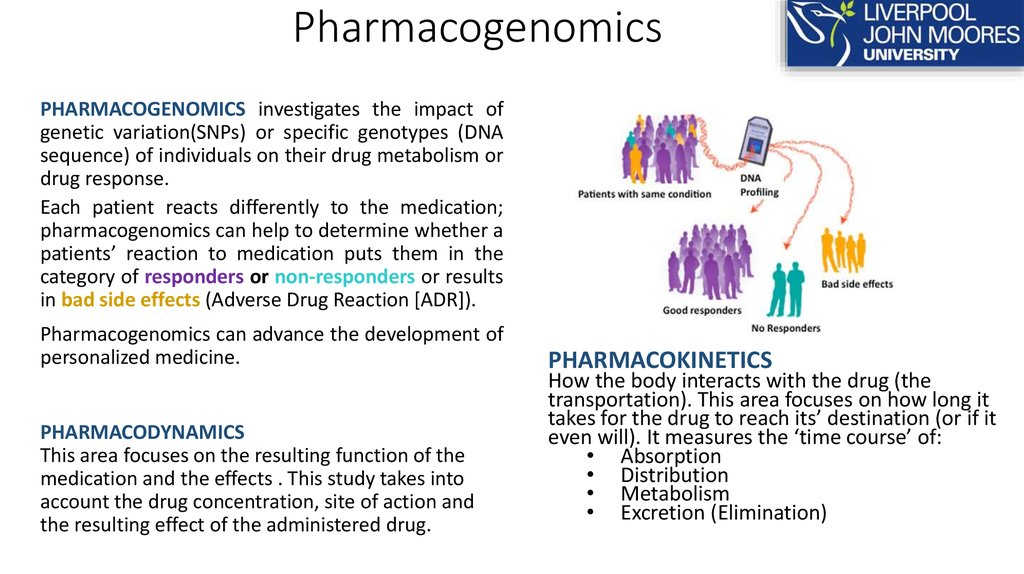

68. Pharmacogenomics

PHARMACOGENOMICS investigates the impact ofgenetic variation(SNPs) or specific genotypes (DNA

sequence) of individuals on their drug metabolism or

drug response.

Each patient reacts differently to the medication;

pharmacogenomics can help to determine whether a

patients’ reaction to medication puts them in the

category of responders or non-responders or results

in bad side effects (Adverse Drug Reaction [ADR]).

Pharmacogenomics can advance the development of

personalized medicine.

PHARMACODYNAMICS

This area focuses on the resulting function of the

medication and the effects . This study takes into

account the drug concentration, site of action and

the resulting effect of the administered drug.

PHARMACOKINETICS

How the body interacts with the drug (the

transportation). This area focuses on how long it

takes for the drug to reach its’ destination (or if it

even will). It measures the ‘time course’ of:

• Absorption

• Distribution

• Metabolism

• Excretion (Elimination)

69. Conclusion

Biologically inspired computing systems ability to adapt, learn/evolve, and theirtolerance to faults and uncertainties, make them a valuable data science tool in the

big data era.

Novel developments/areas of research include:

More sophisticated bio-inspired systems

Collaborative and team based learning

Crowd computing

Quantum computing

informatics

informatics