Similar presentations:

Методы разрешения (семантической) неоднозначности

1. Методы разрешения (семантической) неоднозначности

Обзор методов NLP, IR иData Mining (методов

извлечения

инфомации/знаний)

МЕТОДЫ РАЗРЕШЕНИЯ (СЕМАНТИЧЕСКОЙ)

НЕОДНОЗНАЧНОСТИ

2. -1. Некоторые замечания о методах NLP, IR и Data Mining

-1. НЕКОТОРЫЕ ЗАМЕЧАНИЯ О МЕТОДАХ NLP,IR И DATA MINING

СТАНДАРТНЫЕ МАТЕМАТИЧЕСКИЕ МЕТОДЫ и МОДЕЛИ

+ ЛИНГВИСТИЧЕСКИЕ KNOW HOW

Математические основания:

Стандартные оценки вероятностей + условная вероятность

Метод максимального правдоподобия + наивная Байесовская модель

Стандартные методы проверки гипотез (хи-квадрат, t-статистика и т.п.)

Признаковое пространство + квантитативная оценка весов + метрика

Кластеризация и классификация

Алгоритмы самообучения (методы распознавания образов):

Модель языка (скрытые марковские модели)

Энтропийные модели

Классификаторы (метод максимальной энтропии, наивная

байесовская классификация, скрытые марковские модели,

деревья решений, bootstrapping, нейронные сети, генетические

алгоритмы и т.п.)

3. Предварительная постановка задачи

ПРЕДВАРИТЕЛЬНАЯ ПОСТАНОВКА ЗАДАЧИПроблема 1: омонимия и многозначность при автоматической

обработке текста

Проблема 2.: группировка лексики, автоматическое создание нужных

лексикографических ресурсов, например, тезаурусов.

Семантическая неоднозначность (технический термин -

семантическая многозначность):

Bank vs. bank (см. предыдущую лекцию)

Он нашел возможность vs. Он нашел квартиру

Язык: естественный язык vs. Говяжий язык

4.

Используется в:(а) семантическая разметка корпусов

(б) прикладные задачи информационного поиска: простой

поиск по запросу пользователя, автоматическая

классификация текстов

(в) автоматические системы перевода

(г) вопросно-ответные системы

(д) извлечение знаний из текстов (омонимия

именованных сущностей NER) и т.п.

WSD — word sense disambiguation

5.

На какие вопросы надо ответить,чтобы решить задачу

На что опираться, чтобы различить

разные смыслы лексемы в тексте

6. Лингвистические основания

Значения:словари, тезаурусы, WordNet

Источники:

(а) значение в словаре

(б) тезаурусный класс

(в) синонимический ряд в словаре

синонимов

(г) synset в WordNet

(д) Wikipedia

класс контекстных слов ((а) – (г) источники для выделения

класса контекстных слов)

задача для каждого из "значений" определить "класс

эквивалентности"

7. Алгоритмы самообучения

Задача: найти множество признаков контекста,которые бы максимально точно определяли данное

значение слова (значение X в контексте С (с1 …. ст)

было бы максимально вероятно)

Можно приписать в лексикографическом источнике

Можно извлечь в процессе самообучения:

(а) из размеченного корпуса (supervised learning)

(б) из неразмеченного корпуса (unsupervised)

Классификатор

Кластеризация для контекстов

Выбор признаков (все, что только можно)

Контекстные слова

Контестные POS тэги

Место контекстного слова

8. Задачи обучения:

ЗАДАЧИ ОБУЧЕНИЯ:Дано: "мешок" признаков

Выход:

максимально "близкая" к

действительности модель (такие параметры

модели, которые бы давали максимальную

вероятность того, что данная модель порождает

то, что мы наблюдаем) – веса параметров,

правила классификации и т.п.

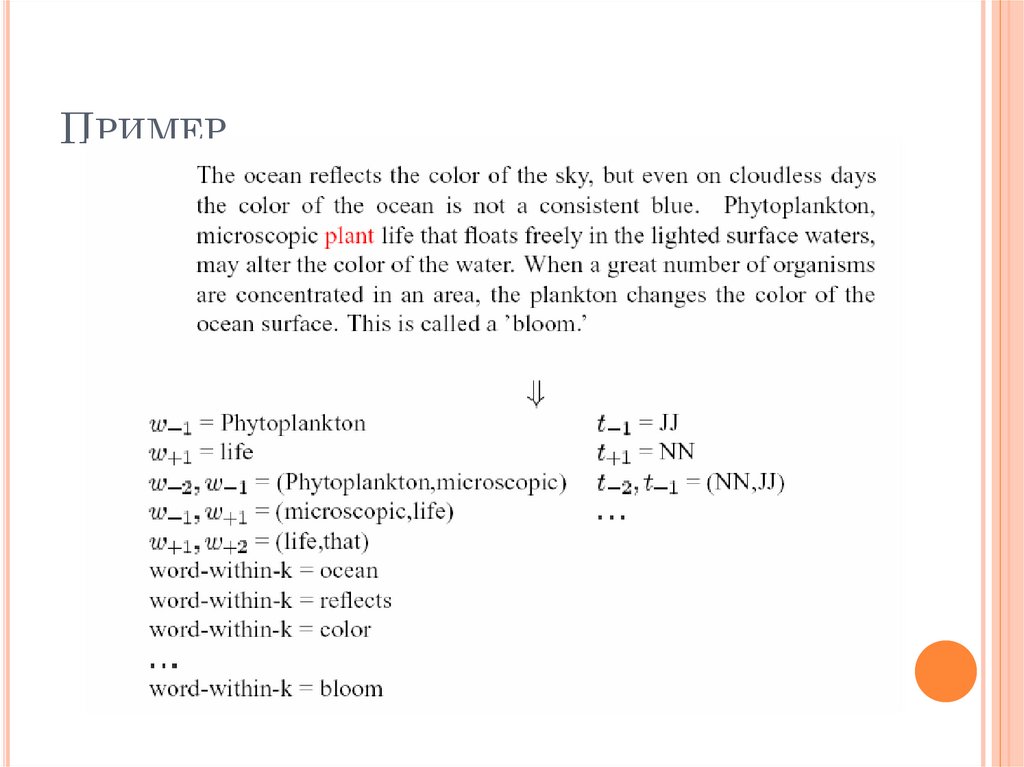

9. Пример

ПРИМЕР10. 1. Методы, основанные на знаниях (knowledge-based methods) 1.1. Методы контекстного пересечения

1. МЕТОДЫ, ОСНОВАННЫЕ НА ЗНАНИЯХ(KNOWLEDGE-BASED METHODS)

1.1. МЕТОДЫ КОНТЕКСТНОГО

ПЕРЕСЕЧЕНИЯ

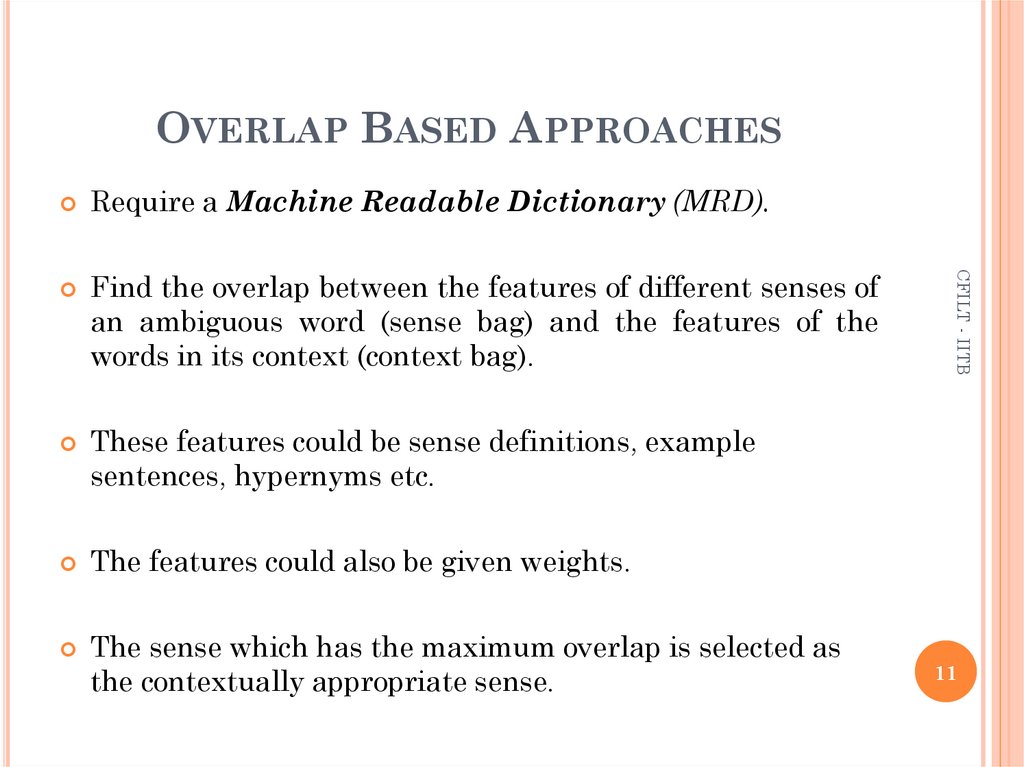

11. OVERLAP BASED APPROACHES

Require a Machine Readable Dictionary (MRD).an ambiguous word (sense bag) and the features of the

words in its context (context bag).

CFILT - IITB

Find the overlap between the features of different senses of

These features could be sense definitions, example

sentences, hypernyms etc.

The features could also be given weights.

The sense which has the maximum overlap is selected as

the contextually appropriate sense.

11

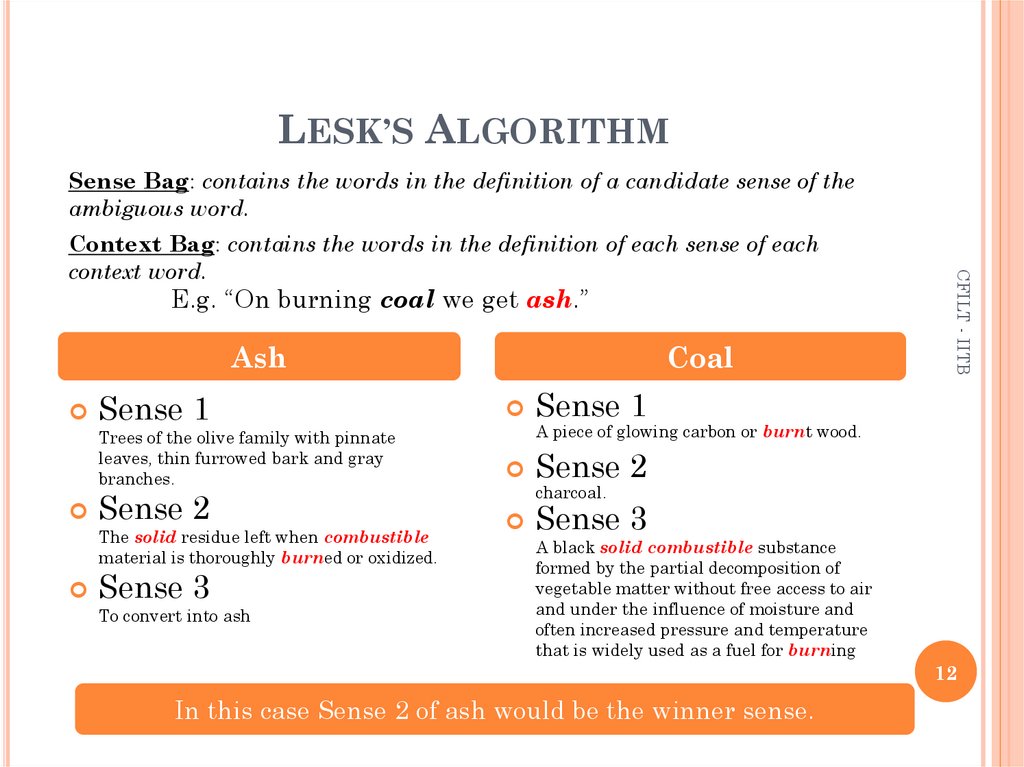

12. LESK’S ALGORITHM

Sense Bag: contains the words in the definition of a candidate sense of theambiguous word.

E.g. “On burning coal we get ash.”

Ash

Sense 1

Trees of the olive family with pinnate

leaves, thin furrowed bark and gray

branches.

Sense 2

The solid residue left when combustible

material is thoroughly burned or oxidized.

Sense 3

To convert into ash

Coal

CFILT - IITB

Context Bag: contains the words in the definition of each sense of each

context word.

Sense 1

A piece of glowing carbon or burnt wood.

Sense 2

charcoal.

Sense 3

A black solid combustible substance

formed by the partial decomposition of

vegetable matter without free access to air

and under the influence of moisture and

often increased pressure and temperature

that is widely used as a fuel for burning

12

In this case Sense 2 of ash would be the winner sense.

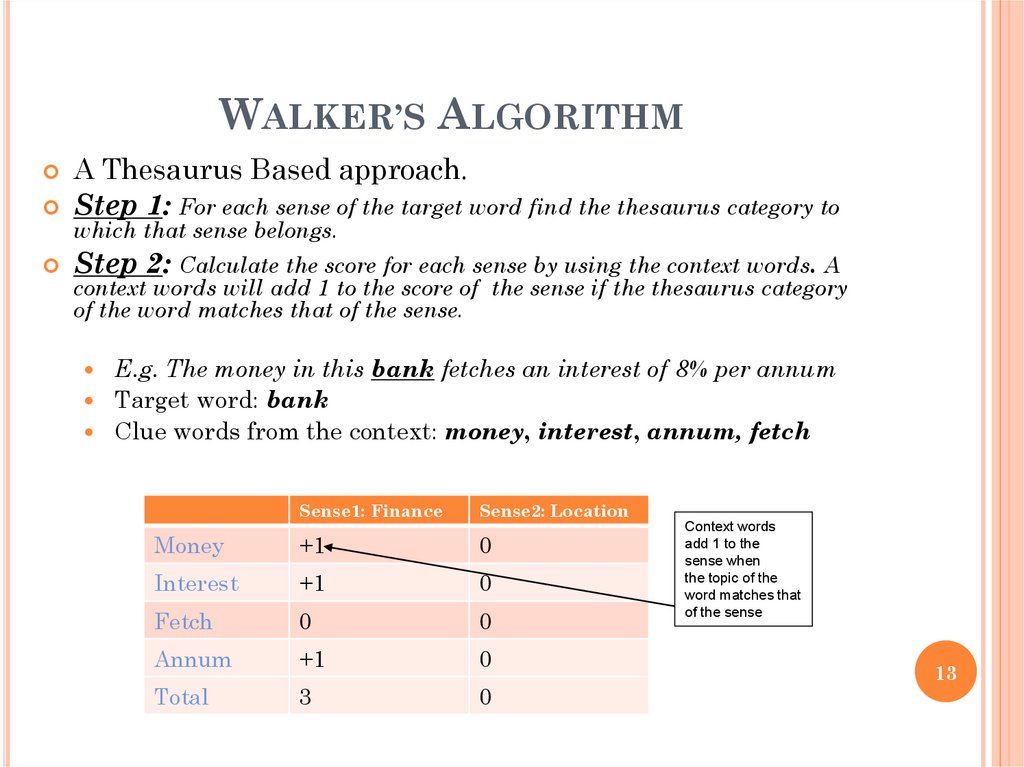

13. WALKER’S ALGORITHM

A Thesaurus Based approach.Step 1: For each sense of the target word find the thesaurus category to

which that sense belongs.

Step 2: Calculate the score for each sense by using the context words. A

context words will add 1 to the score of the sense if the thesaurus category

of the word matches that of the sense.

E.g. The money in this bank fetches an interest of 8% per annum

Target word: bank

Clue words from the context: money, interest, annum, fetch

Sense1: Finance

Sense2: Location

Money

+1

0

Interest

+1

0

Fetch

0

0

Annum

+1

0

Total

3

0

Context words

add 1 to the

sense when

the topic of the

word matches that

of the sense

13

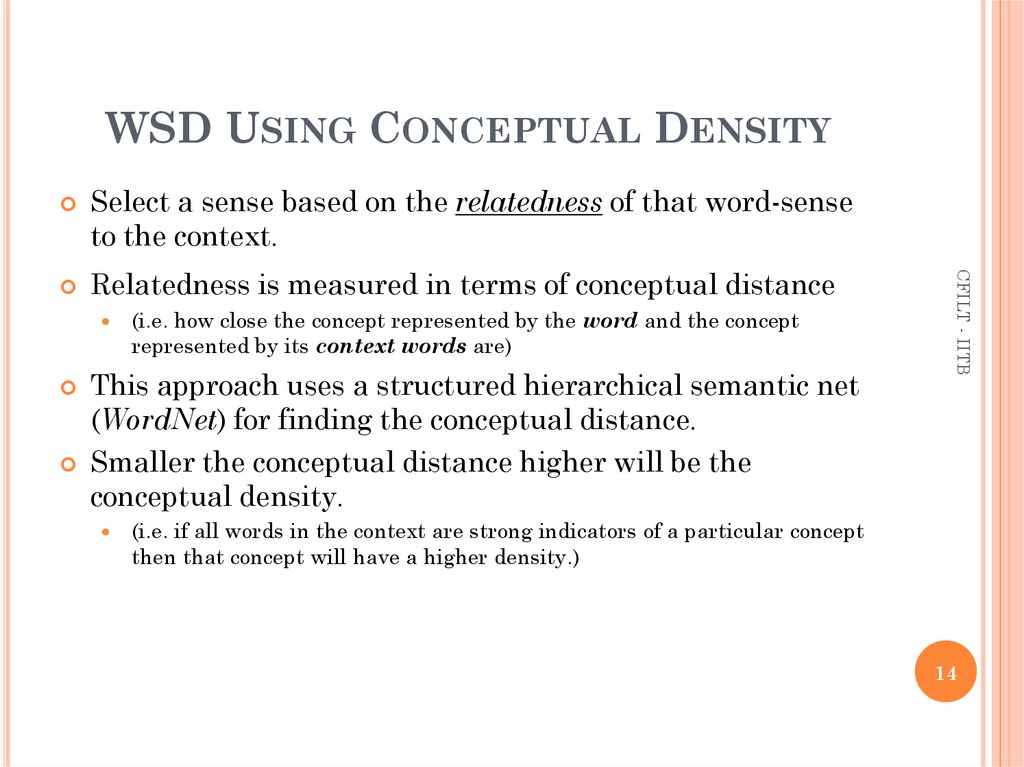

14. WSD USING CONCEPTUAL DENSITY

Select a sense based on the relatedness of that word-senseto the context.

(i.e. how close the concept represented by the word and the concept

represented by its context words are)

This approach uses a structured hierarchical semantic net

CFILT - IITB

Relatedness is measured in terms of conceptual distance

(WordNet) for finding the conceptual distance.

Smaller the conceptual distance higher will be the

conceptual density.

(i.e. if all words in the context are strong indicators of a particular concept

then that concept will have a higher density.)

14

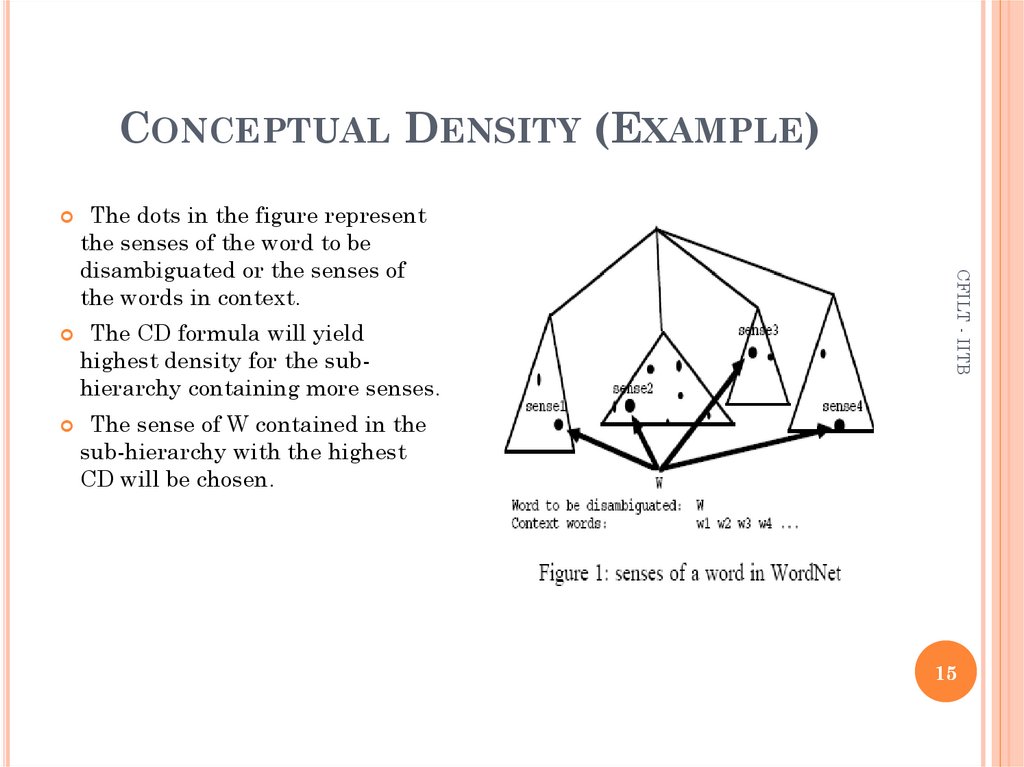

15. CONCEPTUAL DENSITY (EXAMPLE)

The CD formula will yieldhighest density for the subhierarchy containing more senses.

CFILT - IITB

The dots in the figure represent

the senses of the word to be

disambiguated or the senses of

the words in context.

The sense of W contained in the

sub-hierarchy with the highest

CD will be chosen.

15

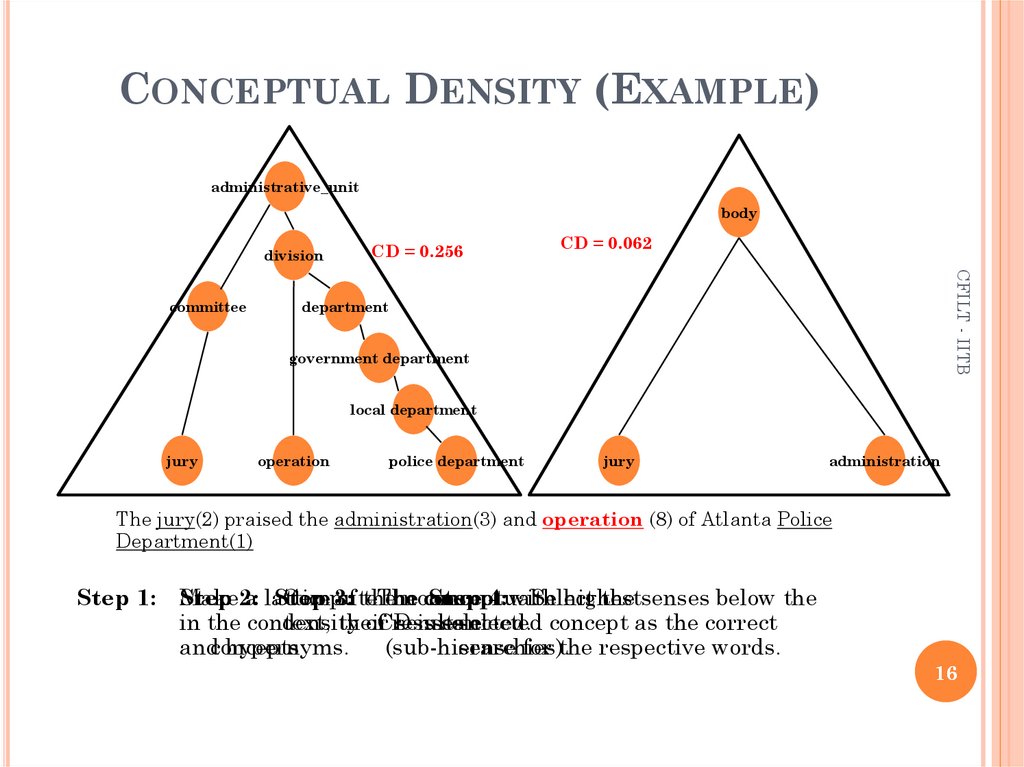

16. CONCEPTUAL DENSITY (EXAMPLE)

administrative_unitbody

division

CD = 0.062

CFILT - IITB

committee

CD = 0.256

department

government department

local department

jury

operation

police department

jury

administration

The jury(2) praised the administration(3) and operation (8) of Atlanta Police

Department(1)

Step 1:

Step 2:

Make

a lattice

Step

Compute

3:

of the

The

the

nouns

concept

conceptual

Step 4: with

Select

highest

the senses below the

in the context,

density

their

ofCD

resultant

senses

is selected.

selected concept as the correct

andconcepts

hypernyms.

(sub-hierarchies).

sense for the respective words.

16

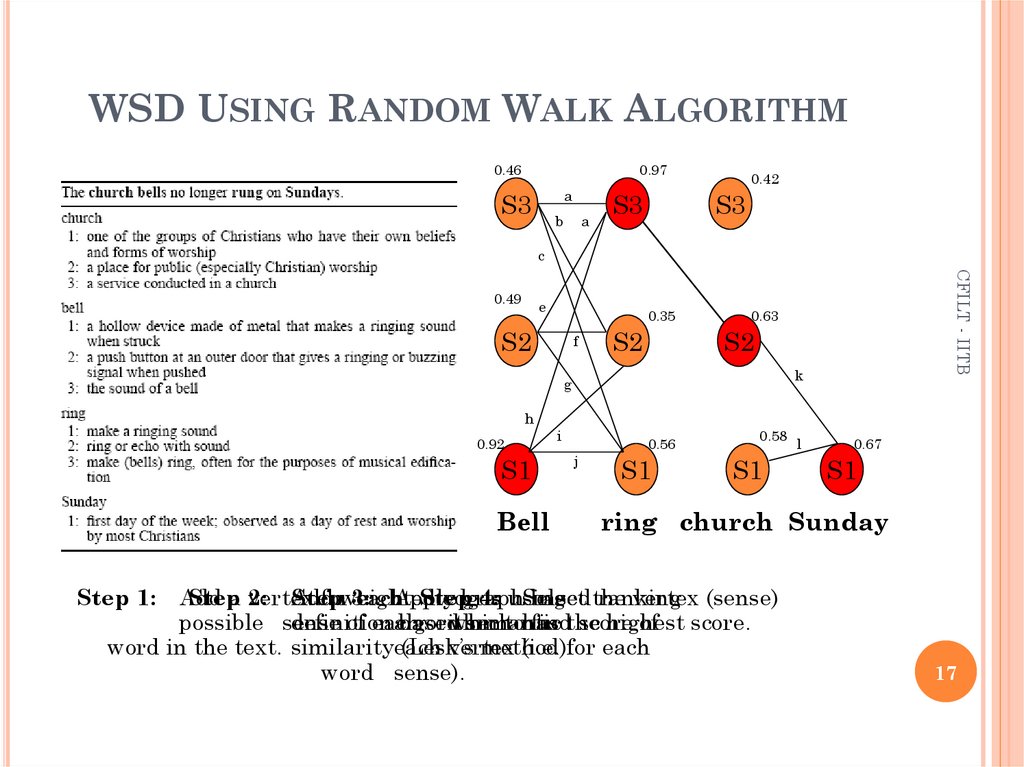

17. WSD USING RANDOM WALK ALGORITHM

0.460.97

a

S3

b

a

S3

0.42

S3

c

e

0.35

S2

f

S2

CFILT - IITB

0.49

0.63

S2

k

g

h

0.92

S1

Bell

i

0.56

j

S1

0.58

S1

l

0.67

S1

ring church Sunday

Step 1:

Add

Step

a vertex

2: Step

Add

forweighted

3:

eachApply

Step

edges

graph

4: using

Select

basedthe

ranking

vertex (sense)

possible sense

definition

of each

algorithm

basedwhich

semantic

tohas

findthe

score

highest

of score.

word in the text. similarityeach

(Lesk’s

vertex

method).

(i.e. for each

word sense).

17

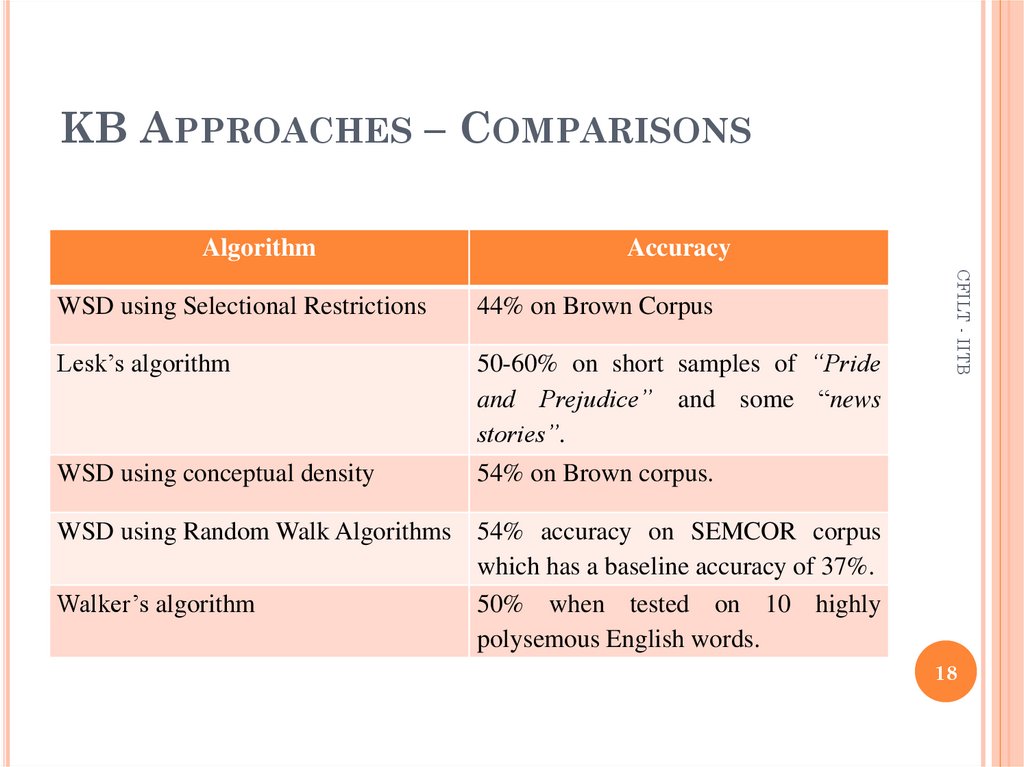

18. KB Approaches – Comparisons

KB APPROACHES – COMPARISONSAlgorithm

Accuracy

44% on Brown Corpus

Lesk’s algorithm

50-60% on short samples of “Pride

and Prejudice” and some “news

stories”.

WSD using conceptual density

54% on Brown corpus.

CFILT - IITB

WSD using Selectional Restrictions

WSD using Random Walk Algorithms 54% accuracy on SEMCOR corpus

which has a baseline accuracy of 37%.

Walker’s algorithm

50% when tested on 10 highly

polysemous English words.

18

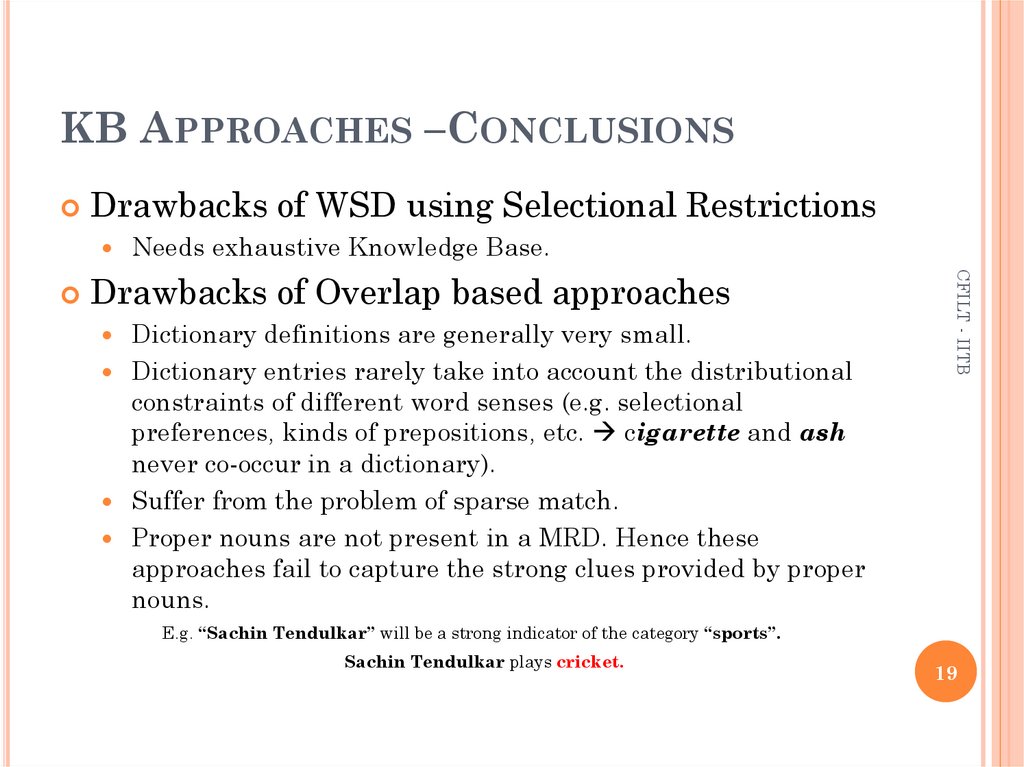

19. KB Approaches –Conclusions

KB APPROACHES –CONCLUSIONSDrawbacks of WSD using Selectional Restrictions

Needs exhaustive Knowledge Base.

Dictionary definitions are generally very small.

Dictionary entries rarely take into account the distributional

constraints of different word senses (e.g. selectional

preferences, kinds of prepositions, etc. cigarette and ash

never co-occur in a dictionary).

Suffer from the problem of sparse match.

Proper nouns are not present in a MRD. Hence these

approaches fail to capture the strong clues provided by proper

nouns.

CFILT - IITB

Drawbacks of Overlap based approaches

E.g. “Sachin Tendulkar” will be a strong indicator of the category “sports”.

Sachin Tendulkar plays cricket.

19

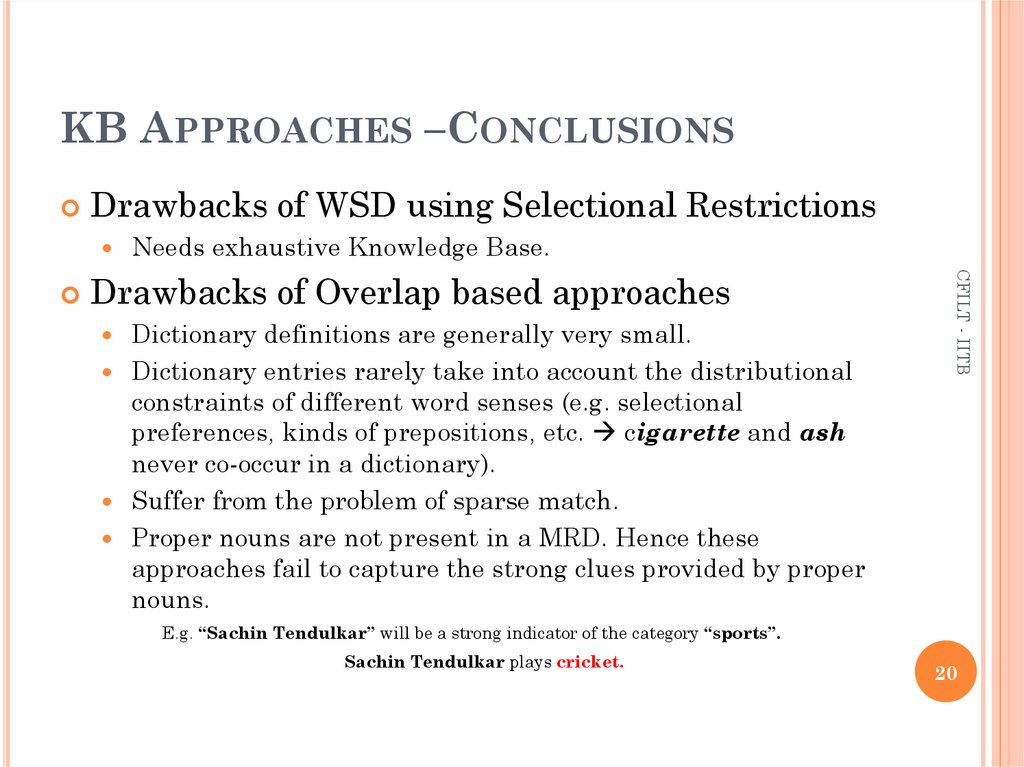

20. KB Approaches –Conclusions

KB APPROACHES –CONCLUSIONSDrawbacks of WSD using Selectional Restrictions

Needs exhaustive Knowledge Base.

Dictionary definitions are generally very small.

Dictionary entries rarely take into account the distributional

constraints of different word senses (e.g. selectional

preferences, kinds of prepositions, etc. cigarette and ash

never co-occur in a dictionary).

Suffer from the problem of sparse match.

Proper nouns are not present in a MRD. Hence these

approaches fail to capture the strong clues provided by proper

nouns.

CFILT - IITB

Drawbacks of Overlap based approaches

E.g. “Sachin Tendulkar” will be a strong indicator of the category “sports”.

Sachin Tendulkar plays cricket.

20

21. Методы машинного обучения

автоматическая классификация икластеризация

22. Контролируемые методы машинного обучения

КОНТРОЛИРУЕМЫЕ МЕТОДЫ МАШИННОГООБУЧЕНИЯ

Задача разрешения семантической

неоднозначности сводится к задаче классификации:

Дано: wj ~ {si} – множестов смыслов для слова wj

{fi } – множество признаков

(для данной задачи – множество контекстных слов,

множество морфологических тэгов и т.п.)

Задача: наблюдаемое в некотором конкретном

контексте словоупотребление отнести к одному из

классов (si) на основе информации о значениях

признаков {fi } для данного контекста

надо построить такую модель, чтобы по

комбинации значений признаков максимально

точно предсказывать si

23. КОНТРОЛИРУЕМЫЕ МЕТОДЫ ОБУЧЕНИЯ: ОБУЧАЕМЫЙ КЛАССИФИКАТОР

Обучающий корпус: размеченный по si вручнуюкорпус текстов

Множество признаков: контекстные слова и POS

тэги с весами

Найти такие значения признаков, чтобы их

комбинация была «оптимальна» на данном

значении слова (например, вероятность приписать

данное значение словоформе в данном контексте

была максимальной или энтропия была бы

максимальной)

sˆ= argmax s ε senses Pr(s|Vw)

Обучение:

извлечение из корпуса информации о совместной

встречаемости значения s i и признака vw

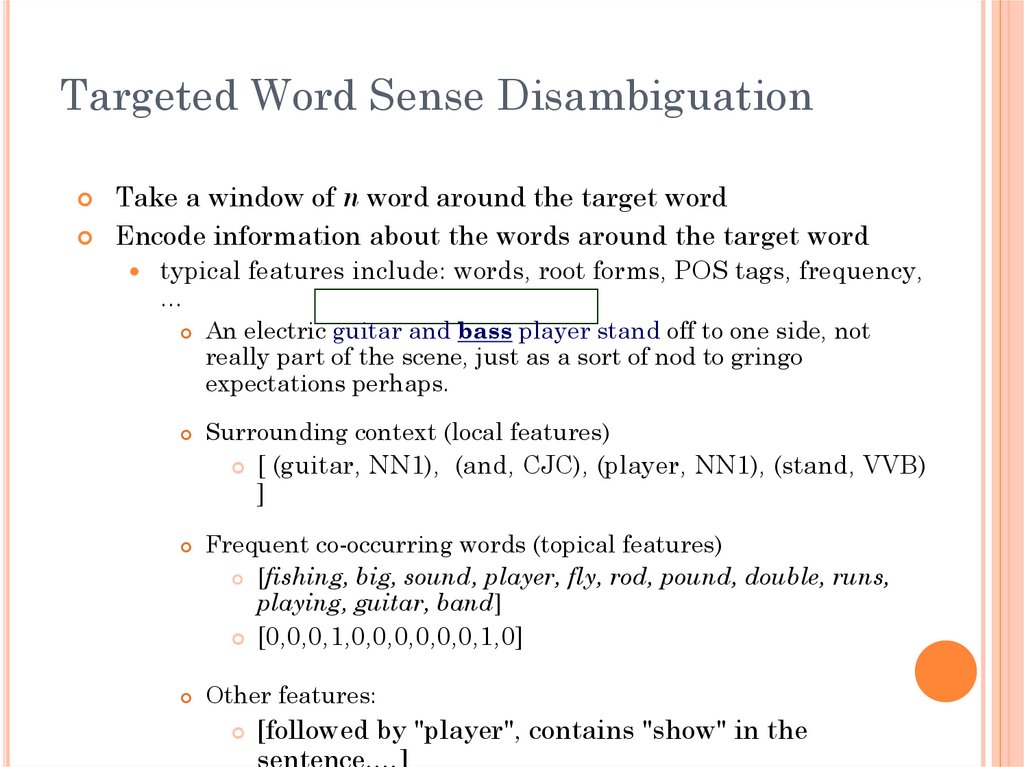

24. Targeted Word Sense Disambiguation

Disambiguate one target word“Take a seat on this chair”

“The chair of the Math Department”

WSD is viewed as a typical classification problem

use machine learning techniques to train a system

Training:

Corpus of occurrences of the target word, each

occurrence annotated with appropriate sense

Build feature vectors:

a vector of relevant linguistic features that represents the

context (ex: a window of words around the target word)

Disambiguation:

Disambiguate the target word in new unseen text

25. Targeted Word Sense Disambiguation

Take a window of n word around the target wordEncode information about the words around the target word

typical features include: words, root forms, POS tags, frequency,

…

An electric guitar and bass player stand off to one side, not

really part of the scene, just as a sort of nod to gringo

expectations perhaps.

Surrounding context (local features)

Frequent co-occurring words (topical features)

[fishing, big, sound, player, fly, rod, pound, double, runs,

playing, guitar, band]

[ (guitar, NN1), (and, CJC), (player, NN1), (stand, VVB)

]

[0,0,0,1,0,0,0,0,0,0,1,0]

Other features:

[followed by "player", contains "show" in the

sentence,…]

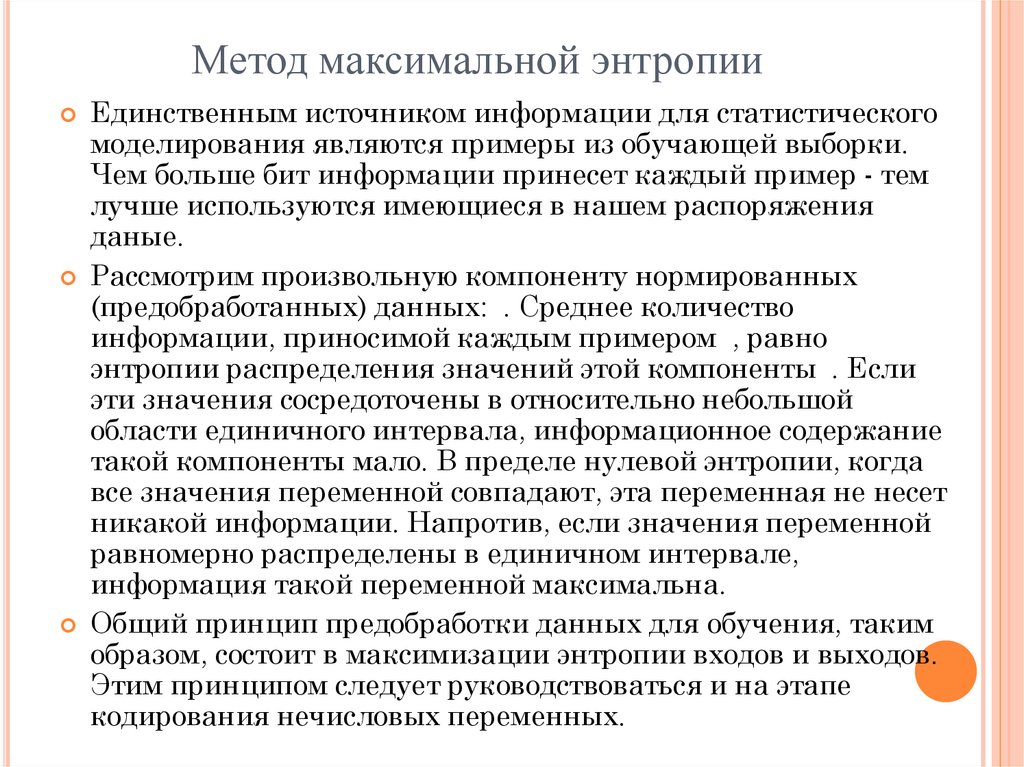

26. Метод максимальной энтропии

Единственным источником информации для статистическогомоделирования являются примеры из обучающей выборки.

Чем больше бит информации принесет каждый пример - тем

лучше используются имеющиеся в нашем распоряжения

даные.

Рассмотрим произвольную компоненту нормированных

(предобработанных) данных: . Среднее количество

информации, приносимой каждым примером , равно

энтропии распределения значений этой компоненты . Если

эти значения сосредоточены в относительно небольшой

области единичного интервала, информационное содержание

такой компоненты мало. В пределе нулевой энтропии, когда

все значения переменной совпадают, эта переменная не несет

никакой информации. Напротив, если значения переменной

равномерно распределены в единичном интервале,

информация такой переменной максимальна.

Общий принцип предобработки данных для обучения, таким

образом, состоит в максимизации энтропии входов и выходов.

Этим принципом следует руководствоваться и на этапе

кодирования нечисловых переменных.

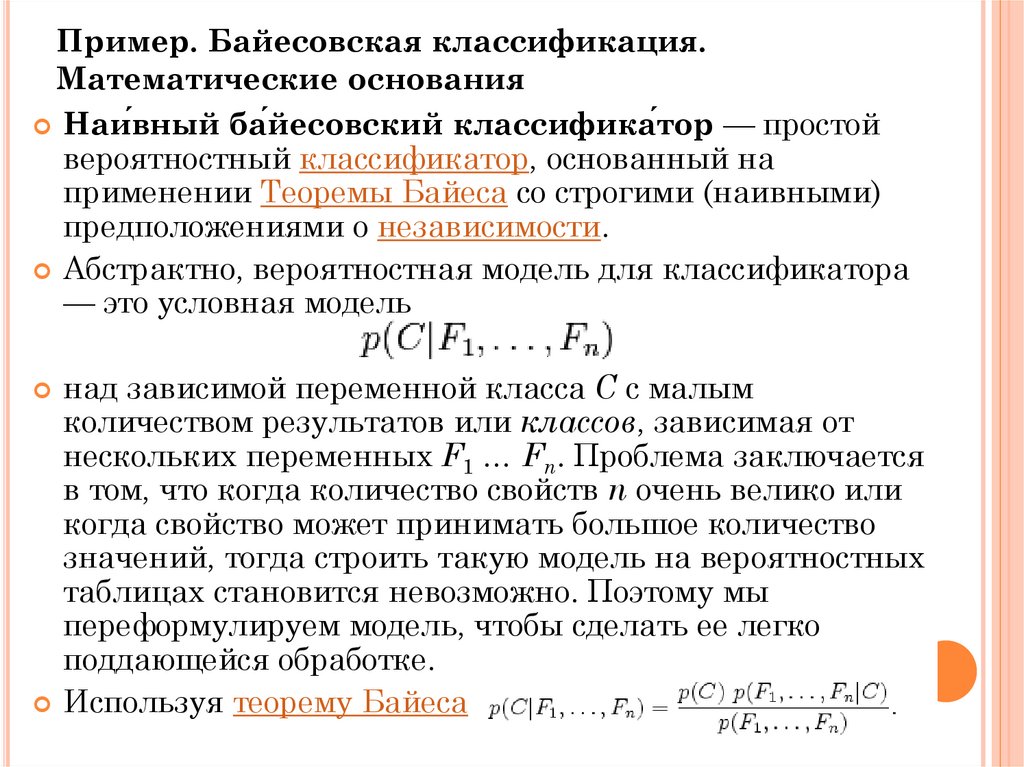

27. Пример. Байесовская классификация. Математические основания

Наи́вный ба́йесовский классифика́тор — простойвероятностный классификатор, основанный на

применении Теоремы Байеса со строгими (наивными)

предположениями о независимости.

Абстрактно, вероятностная модель для классификатора

— это условная модель

над зависимой переменной класса C с малым

количеством результатов или классов, зависимая от

нескольких переменных F1 … Fn. Проблема заключается

в том, что когда количество свойств n очень велико или

когда свойство может принимать большое количество

значений, тогда строить такую модель на вероятностных

таблицах становится невозможно. Поэтому мы

переформулируем модель, чтобы сделать ее легко

поддающейся обработке.

Используя теорему Байеса

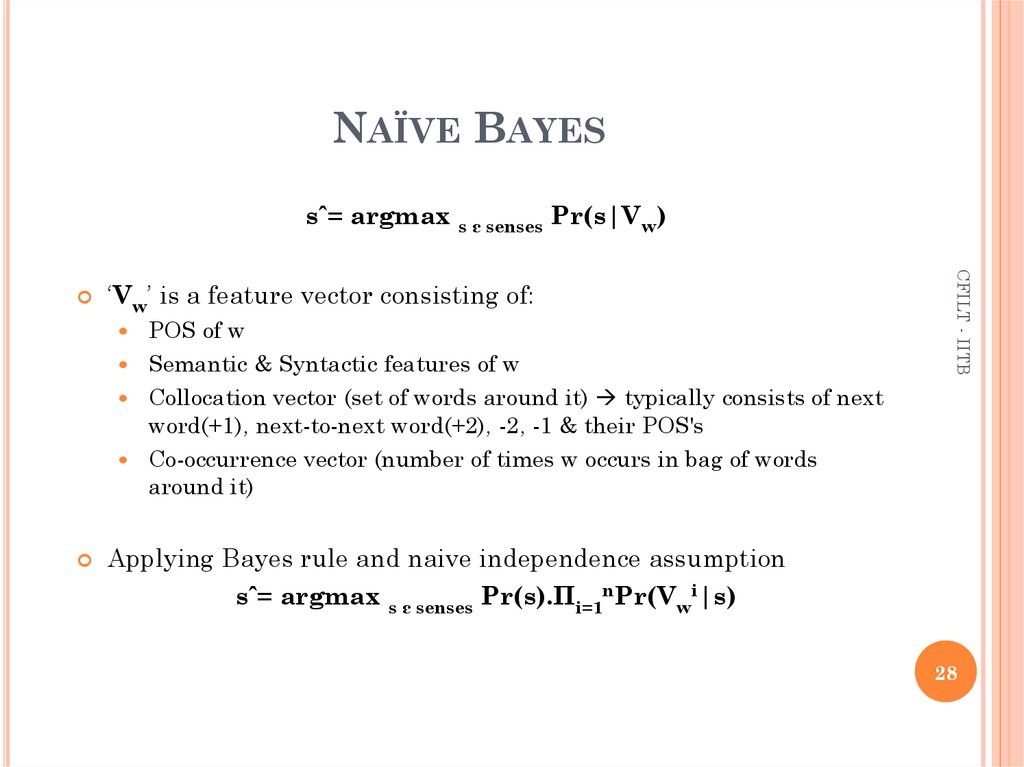

28. NAÏVE BAYES

sˆ= argmax s ε senses Pr(s|Vw)‘Vw’ is a feature vector consisting of:

POS of w

Semantic & Syntactic features of w

Collocation vector (set of words around it) typically consists of next

word(+1), next-to-next word(+2), -2, -1 & their POS's

Co-occurrence vector (number of times w occurs in bag of words

around it)

CFILT - IITB

Applying Bayes rule and naive independence assumption

sˆ= argmax s ε senses Pr(s).Πi=1nPr(Vwi|s)

28

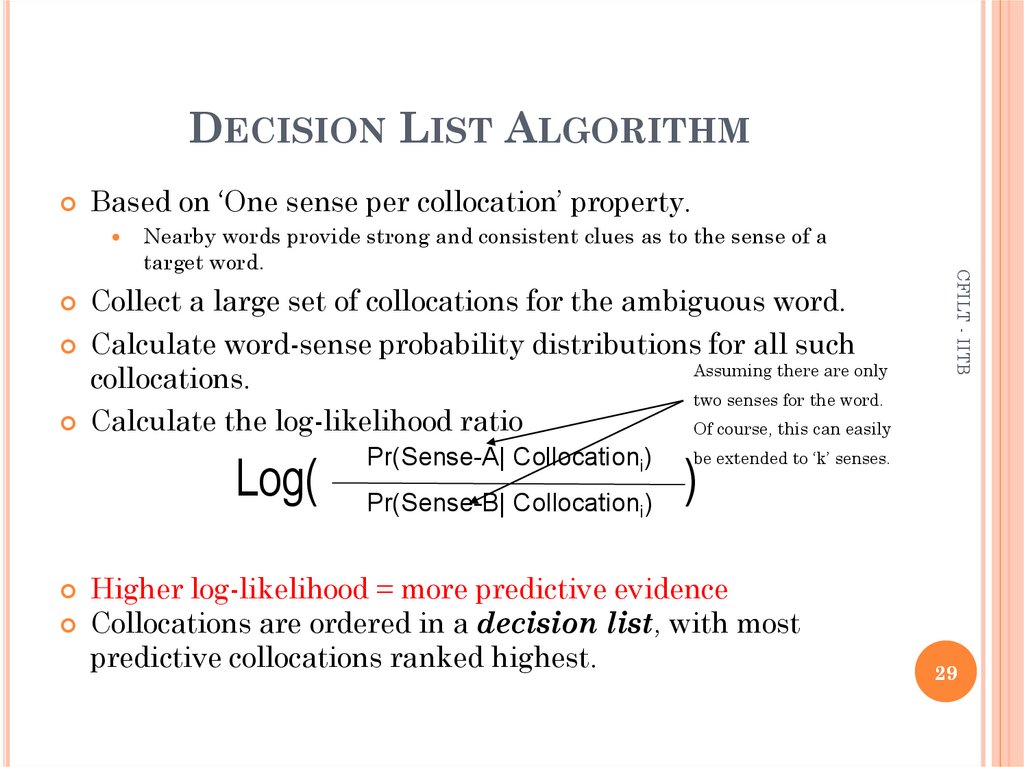

29. DECISION LIST ALGORITHM

Based on ‘One sense per collocation’ property.Collect a large set of collocations for the ambiguous word.

Calculate word-sense probability distributions for all such

collocations.

Calculate the log-likelihood ratio

Log(

Pr(Sense-A| Collocationi)

Pr(Sense-B| Collocationi)

Assuming there are only

CFILT - IITB

Nearby words provide strong and consistent clues as to the sense of a

target word.

two senses for the word.

Of course, this can easily

be extended to ‘k’ senses.

)

Higher log-likelihood = more predictive evidence

Collocations are ordered in a decision list, with most

predictive collocations ranked highest.

29

30. Supervised Approaches –Conclusions

SUPERVISED APPROACHES –CONCLUSIONS

General Comments

Use corpus evidence instead of relying of dictionary defined senses.

nouns do appear in a corpus.

Naïve Bayes

CFILT - IITB

Can capture important clues provided by proper nouns because proper

Suffers from data sparseness.

Since the scores are a product of probabilities, some weak features

might pull down the overall score for a sense.

A large number of parameters need to be trained.

Decision Lists

A word-specific classifier. A separate classifier needs to be trained for

each word.

Uses the single most predictive feature which eliminates the

drawback of Naïve Bayes.

30

31. Supervised Approaches –Conclusions

SUPERVISED APPROACHES –CONCLUSIONS

SVM

A word-sense specific classifier.

Gives the highest improvement over the baseline accuracy.

Uses a diverse set of features.

HMM

Significant in lieu of the fact that a fine distinction between the

various senses of a word is not needed in tasks like MT.

A broad coverage classifier as the same knowledge sources can be used

for all words belonging to super sense.

Even though the polysemy was reduced significantly there was not a

comparable significant improvement in the performance.

CFILT - IITB

Exemplar Based K-NN

A word-specific classifier.

Will not work for unknown words which do not appear in the corpus.

Uses a diverse set of features (including morphological and nounsubject-verb pairs)

31

32. ROADMAP

Knowledge Based ApproachesWSD using Selectional Preferences (or restrictions)

Overlap Based Approaches

Supervised Approaches

Semi-supervised Algorithms

Unsupervised Algorithms

CFILT - IITB

Machine Learning Based Approaches

Hybrid Approaches

Reducing Knowledge Acquisition Bottleneck

WSD and MT

Summary

Future Work

32

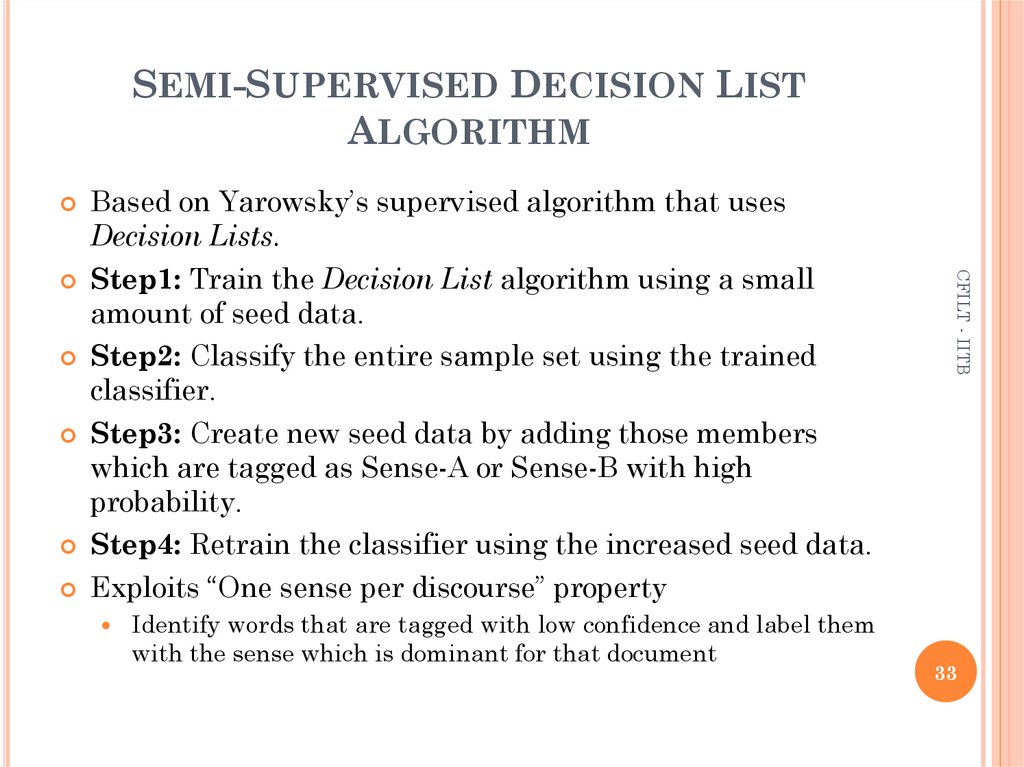

33. SEMI-SUPERVISED DECISION LIST ALGORITHM

Based on Yarowsky’s supervised algorithm that usesIdentify words that are tagged with low confidence and label them

with the sense which is dominant for that document

CFILT - IITB

Decision Lists.

Step1: Train the Decision List algorithm using a small

amount of seed data.

Step2: Classify the entire sample set using the trained

classifier.

Step3: Create new seed data by adding those members

which are tagged as Sense-A or Sense-B with high

probability.

Step4: Retrain the classifier using the increased seed data.

Exploits “One sense per discourse” property

33

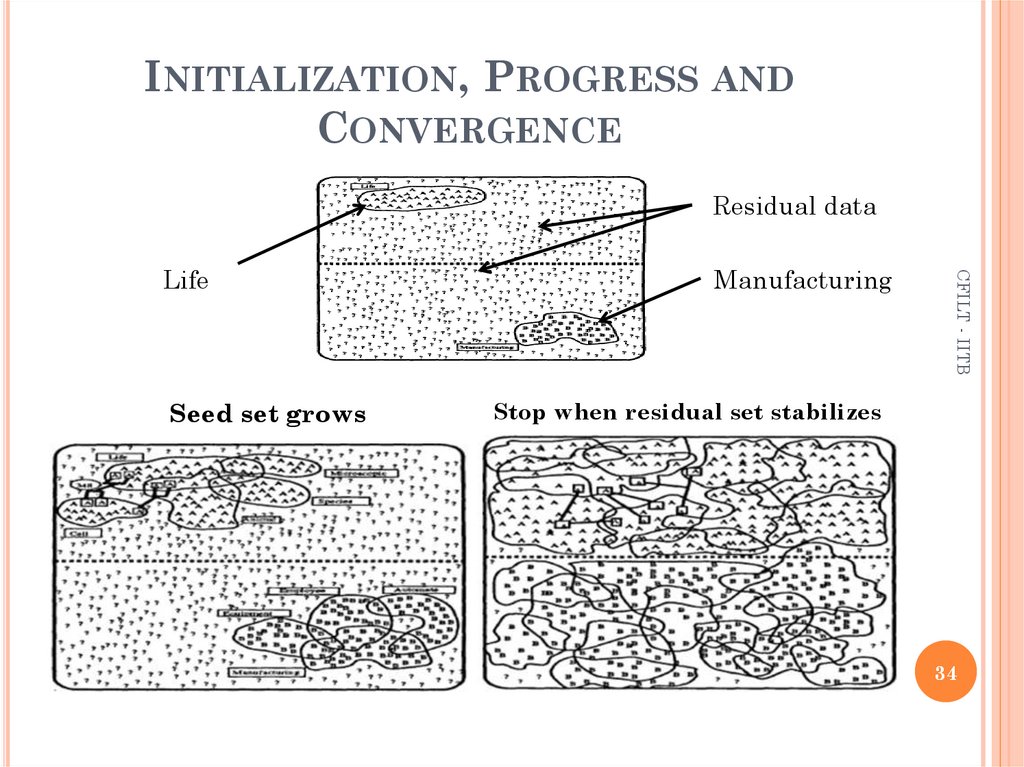

34. Initialization, Progress and Convergence

INITIALIZATION, PROGRESS ANDCONVERGENCE

Residual data

Seed set grows

Manufacturing

CFILT - IITB

Life

Stop when residual set stabilizes

34

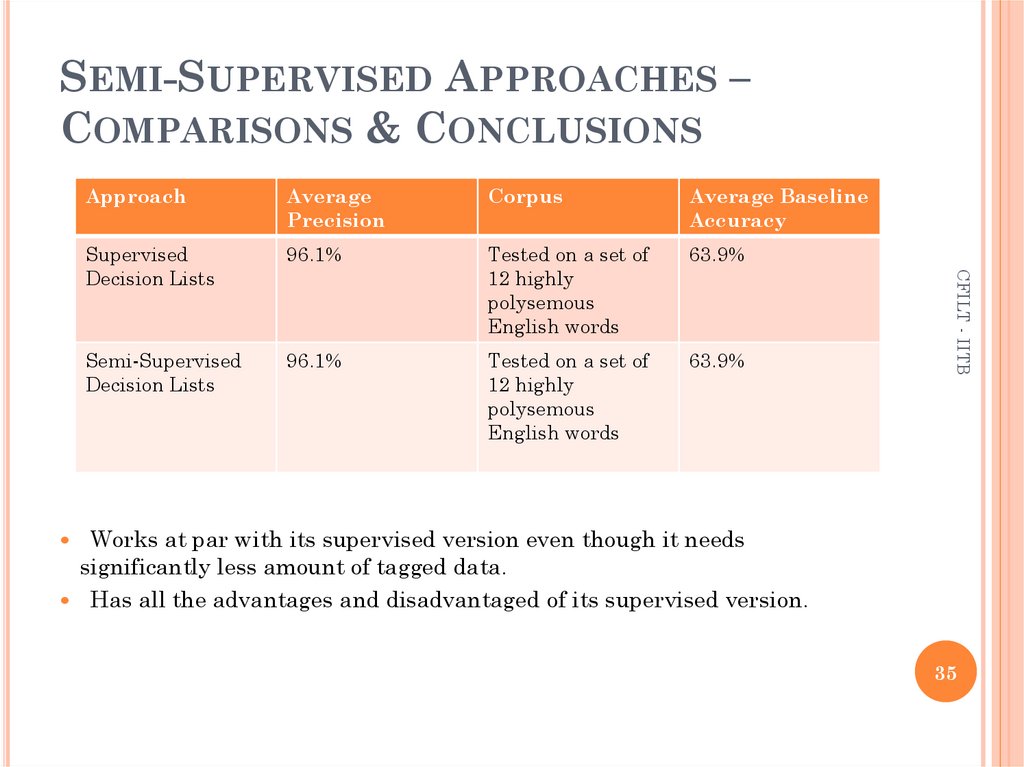

35. Semi-Supervised Approaches – Comparisons & Conclusions

SEMI-SUPERVISED APPROACHES –COMPARISONS & CONCLUSIONS

Average

Precision

Corpus

Average Baseline

Accuracy

Supervised

Decision Lists

96.1%

Tested on a set of

12 highly

polysemous

English words

63.9%

Semi-Supervised

Decision Lists

96.1%

Tested on a set of

12 highly

polysemous

English words

63.9%

CFILT - IITB

Approach

Works at par with its supervised version even though it needs

significantly less amount of tagged data.

Has all the advantages and disadvantaged of its supervised version.

35

36. ROADMAP

Knowledge Based ApproachesWSD using Selectional Preferences (or restrictions)

Overlap Based Approaches

Supervised Approaches

Semi-supervised Algorithms

Unsupervised Algorithms

CFILT - IITB

Machine Learning Based Approaches

Hybrid Approaches

Reducing Knowledge Acquisition Bottleneck

WSD and MT

Summary

Future Work

36

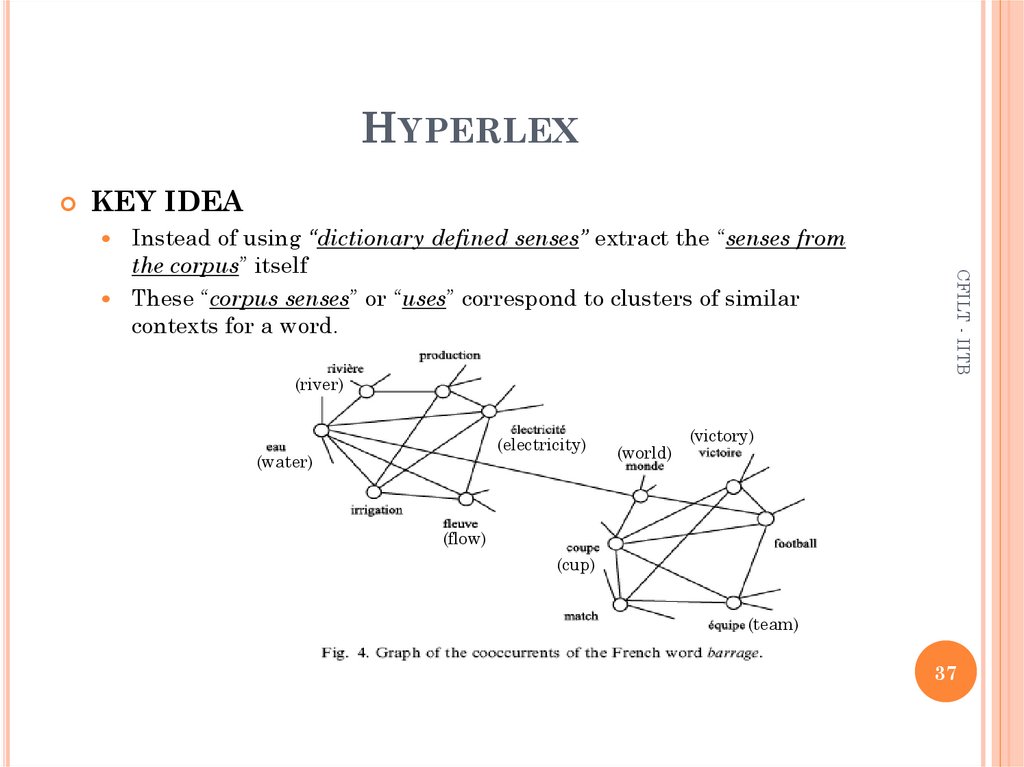

37. HYPERLEX

KEY IDEAInstead of using “dictionary defined senses” extract the “senses from

the corpus” itself

These “corpus senses” or “uses” correspond to clusters of similar

contexts for a word.

(electricity)

(water)

(world)

CFILT - IITB

(river)

(victory)

(flow)

(cup)

(team)

37

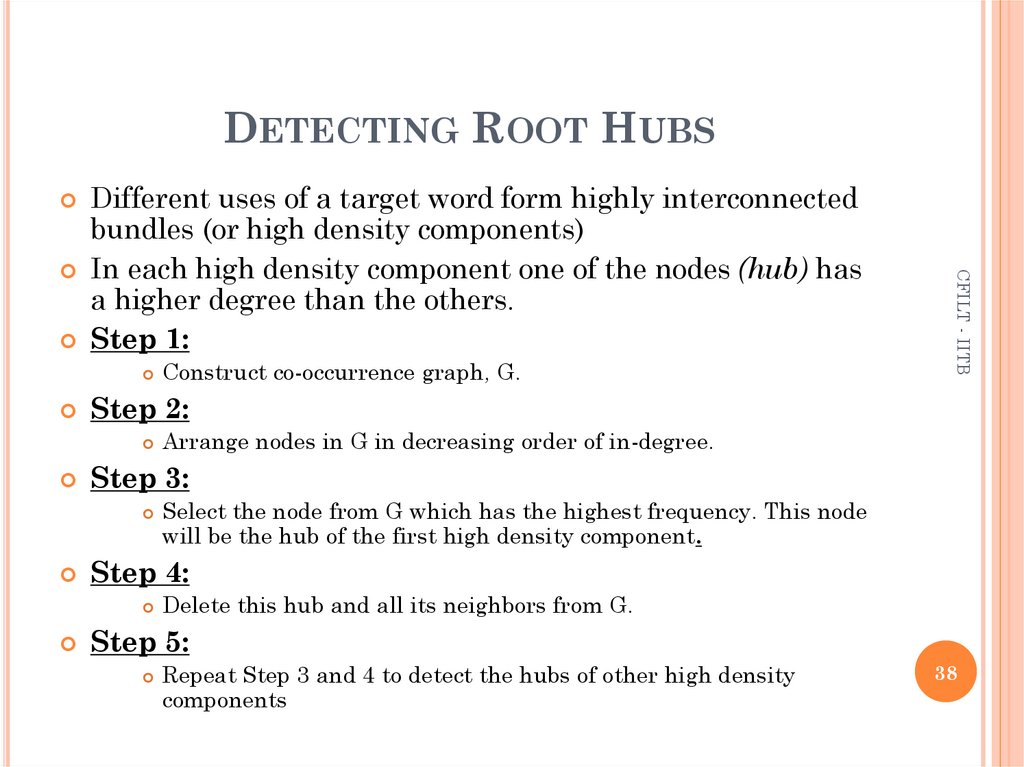

38. DETECTING ROOT HUBS

Different uses of a target word form highly interconnectedConstruct co-occurrence graph, G.

CFILT - IITB

bundles (or high density components)

In each high density component one of the nodes (hub) has

a higher degree than the others.

Step 1:

Step 2:

Arrange nodes in G in decreasing order of in-degree.

Step 3:

Select the node from G which has the highest frequency. This node

will be the hub of the first high density component.

Step 4:

Delete this hub and all its neighbors from G.

Step 5:

Repeat Step 3 and 4 to detect the hubs of other high density

components

38

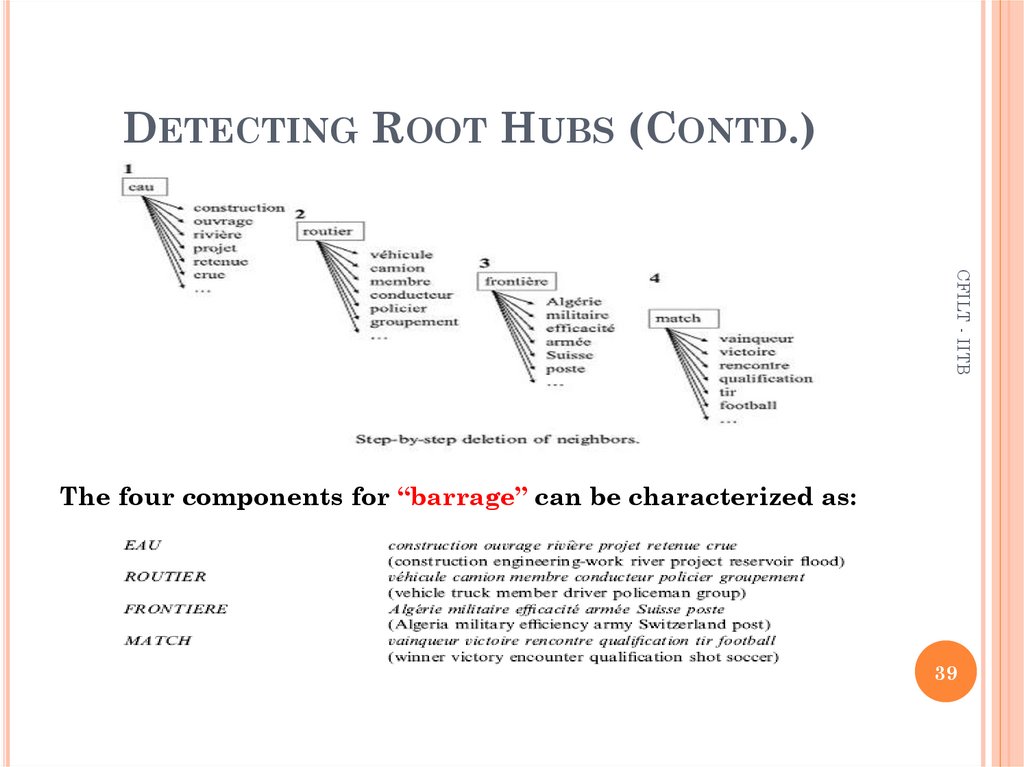

39. DETECTING ROOT HUBS (CONTD.)

CFILT - IITBThe four components for “barrage” can be characterized as:

39

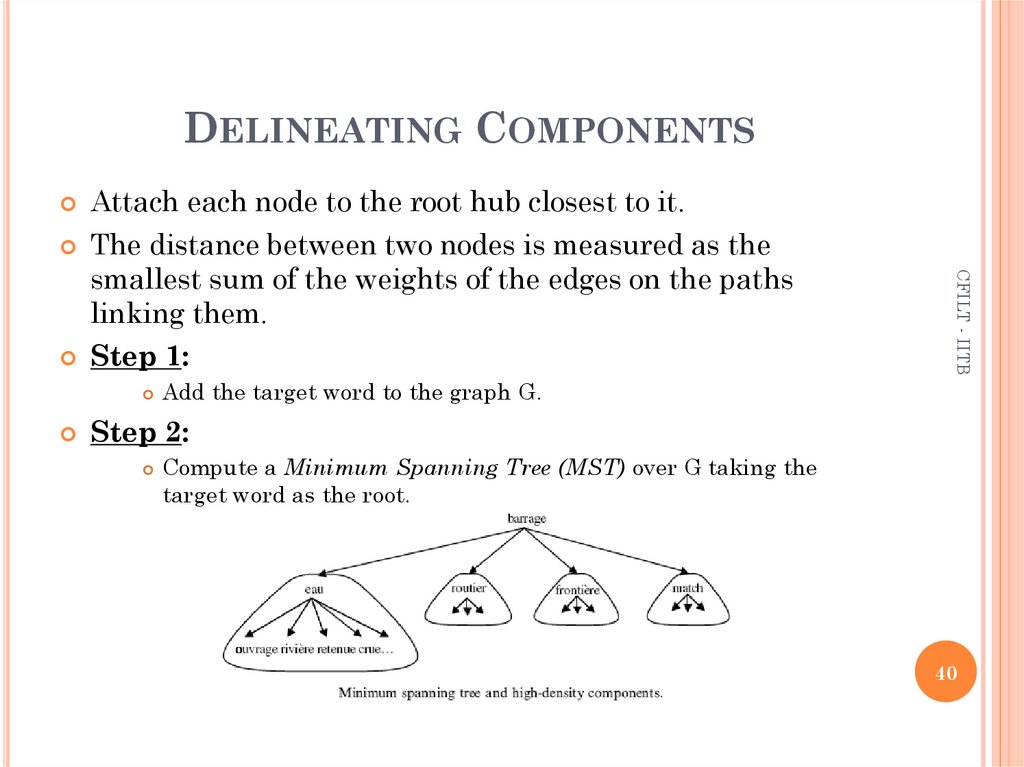

40. DELINEATING COMPONENTS

Attach each node to the root hub closest to it.The distance between two nodes is measured as the

CFILT - IITB

smallest sum of the weights of the edges on the paths

linking them.

Step 1:

Add the target word to the graph G.

Step 2:

Compute a Minimum Spanning Tree (MST) over G taking the

target word as the root.

40

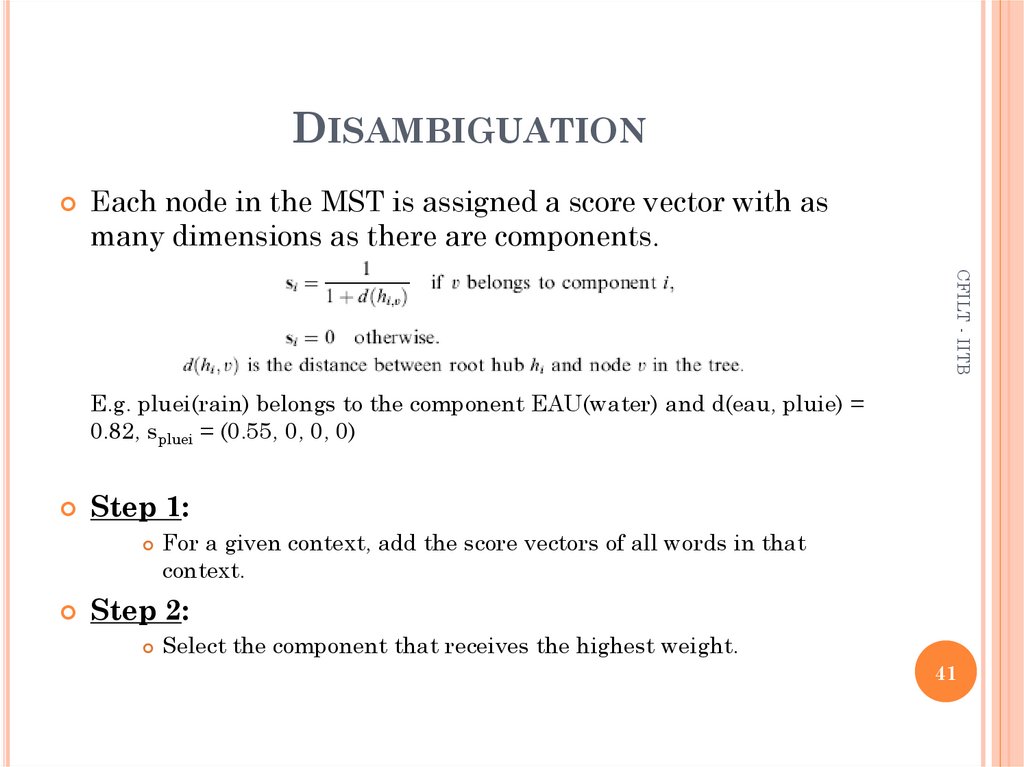

41. DISAMBIGUATION

Each node in the MST is assigned a score vector with asmany dimensions as there are components.

CFILT - IITB

E.g. pluei(rain) belongs to the component EAU(water) and d(eau, pluie) =

0.82, spluei = (0.55, 0, 0, 0)

Step 1:

For a given context, add the score vectors of all words in that

context.

Step 2:

Select the component that receives the highest weight.

41

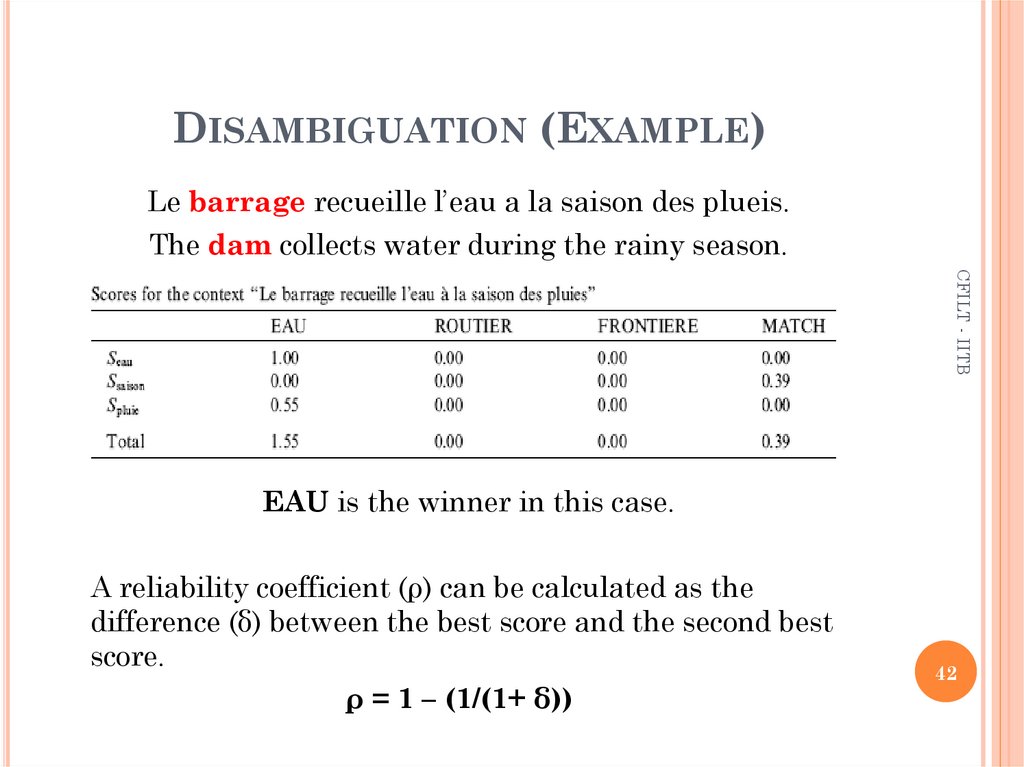

42. DISAMBIGUATION (EXAMPLE)

Le barrage recueille l’eau a la saison des plueis.The dam collects water during the rainy season.

CFILT - IITB

EAU is the winner in this case.

A reliability coefficient (ρ) can be calculated as the

difference (δ) between the best score and the second best

score.

ρ = 1 – (1/(1+ δ))

42

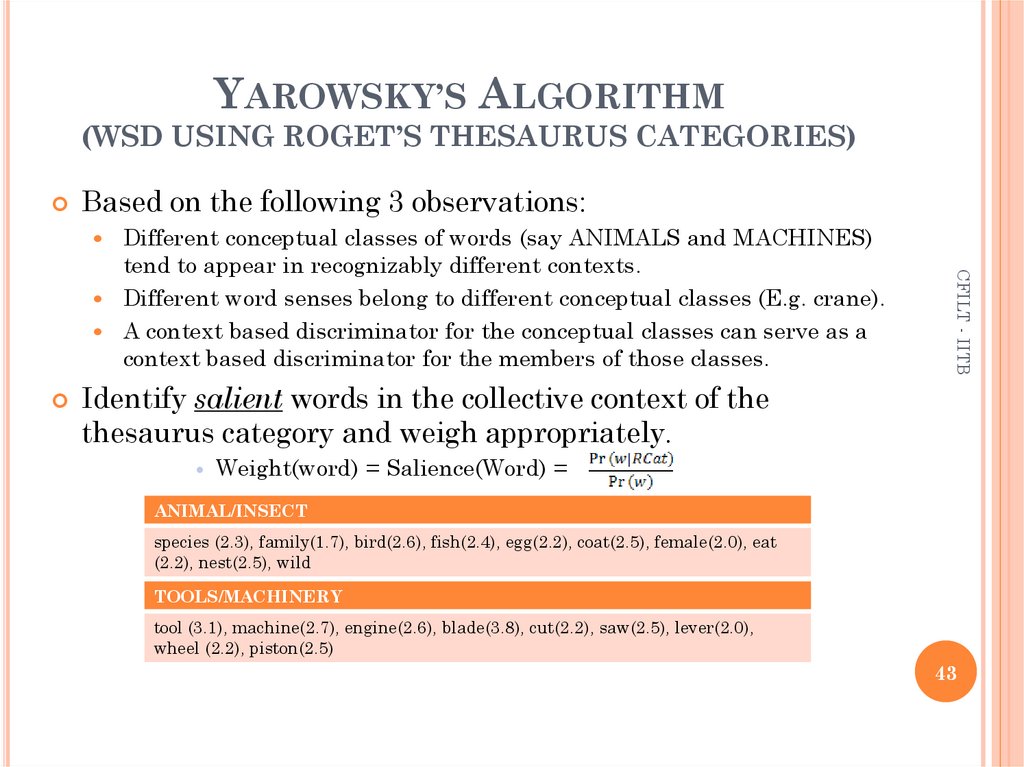

43. YAROWSKY’S ALGORITHM (WSD USING ROGET’S THESAURUS CATEGORIES)

Based on the following 3 observations:Different conceptual classes of words (say ANIMALS and MACHINES)

tend to appear in recognizably different contexts.

Different word senses belong to different conceptual classes (E.g. crane).

A context based discriminator for the conceptual classes can serve as a

context based discriminator for the members of those classes.

CFILT - IITB

Identify salient words in the collective context of the

thesaurus category and weigh appropriately.

Weight(word) = Salience(Word) =

ANIMAL/INSECT

species (2.3), family(1.7), bird(2.6), fish(2.4), egg(2.2), coat(2.5), female(2.0), eat

(2.2), nest(2.5), wild

TOOLS/MACHINERY

tool (3.1), machine(2.7), engine(2.6), blade(3.8), cut(2.2), saw(2.5), lever(2.0),

wheel (2.2), piston(2.5)

43

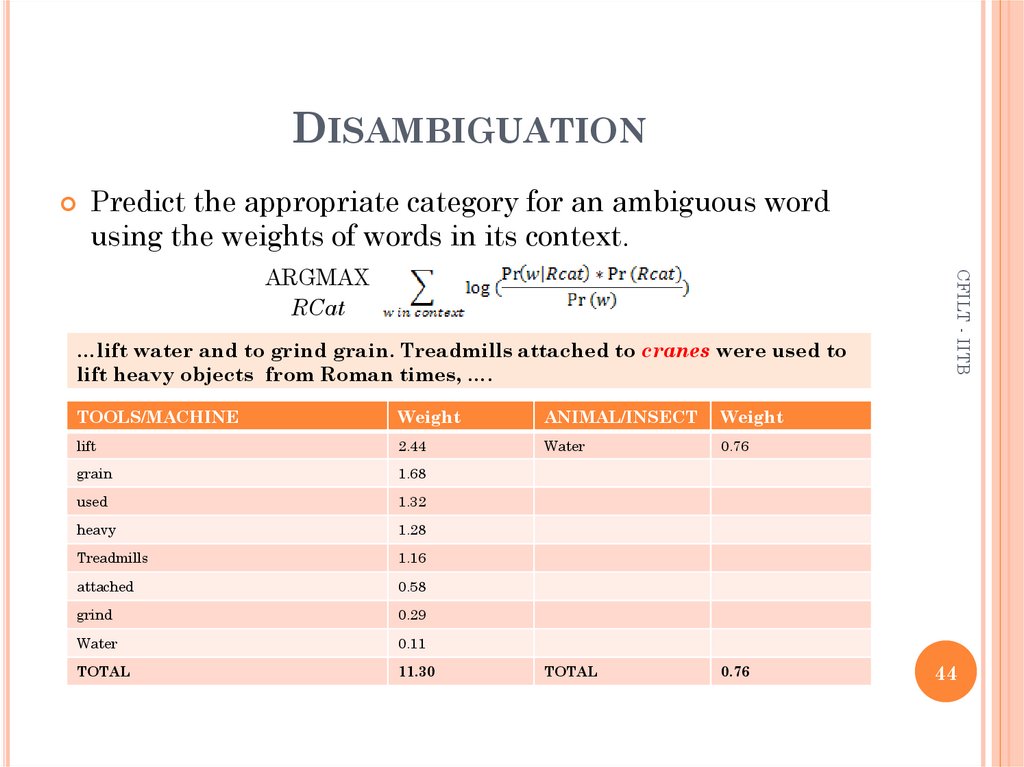

44. DISAMBIGUATION

Predict the appropriate category for an ambiguous wordusing the weights of words in its context.

…lift water and to grind grain. Treadmills attached to cranes were used to

lift heavy objects from Roman times, ….

TOOLS/MACHINE

Weight

ANIMAL/INSECT

Weight

lift

2.44

Water

0.76

grain

1.68

used

1.32

heavy

1.28

Treadmills

1.16

attached

0.58

grind

0.29

Water

0.11

TOTAL

11.30

TOTAL

0.76

CFILT - IITB

ARGMAX

RCat

44

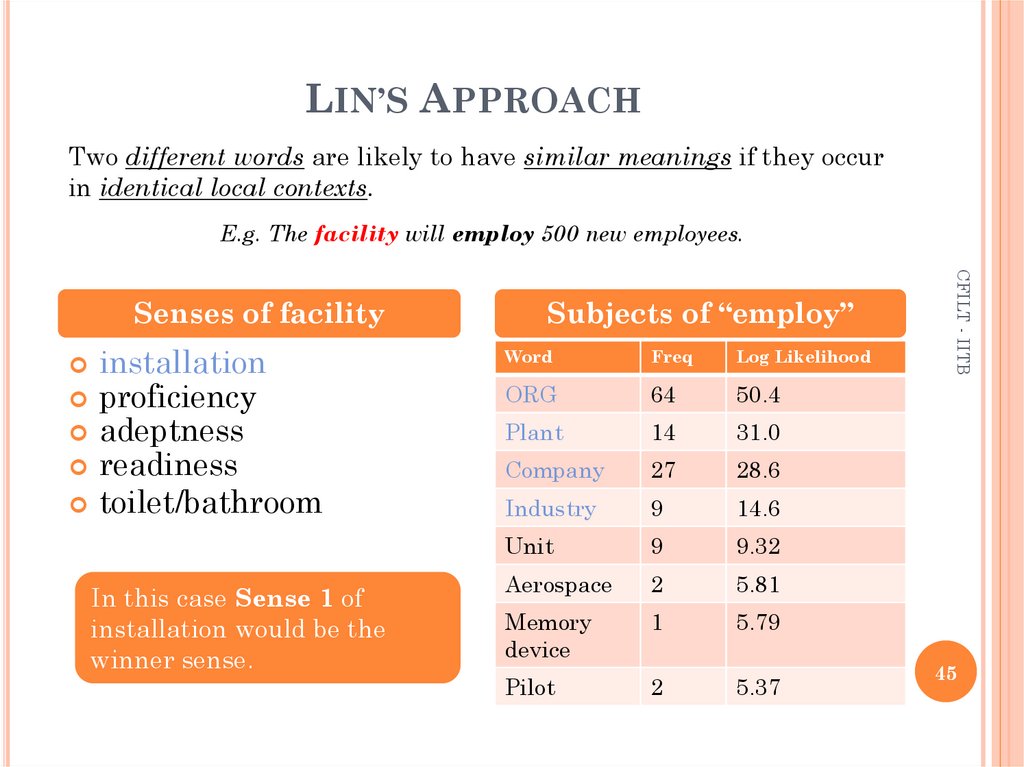

45. LIN’S APPROACH

Two different words are likely to have similar meanings if they occurin identical local contexts.

E.g. The facility will employ 500 new employees.

installation

proficiency

adeptness

readiness

toilet/bathroom

In this case Sense 1 of

installation would be the

winner sense.

Subjects of “employ”

Word

Freq

Log Likelihood

ORG

64

50.4

Plant

14

31.0

Company

27

28.6

Industry

9

14.6

Unit

9

9.32

Aerospace

2

5.81

Memory

device

1

5.79

Pilot

2

5.37

CFILT - IITB

Senses of facility

45

informatics

informatics